diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000..965d14b

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,3 @@

+*.[0-9]*.toe

+Backup/*

+.DS_Store

diff --git a/README.md b/README.md

index 2859b45..65df6cb 100644

--- a/README.md

+++ b/README.md

@@ -1,117 +1,150 @@

-# Final Project!

-

-This is it! The culmination of your procedural graphics experience this semester. For your final project, we'd like to give you the time and space to explore a topic of your choosing. You may choose any topic you please, so long as you vet the topic and scope with an instructor or TA. We've provided some suggestions below. The scope of your project should be roughly 1.5 homework assignments). To help structure your time, we're breaking down the project into 4 milestones:

-

-## Project planning: Design Doc (due 11/9)

-Before submitting your first milestone, _you must get your project idea and scope approved by Rachel, Adam or a TA._

-

-### Design Doc

-Start off by forking this repository. In your README, write a design doc to outline your project goals and implementation plan. It must include the following sections:

-

-#### Introduction

-- What motivates your project?

-

-#### Goal

-- What do you intend to achieve with this project?

-

-#### Inspiration/reference:

-- You must have some form of reference material for your final project. Your reference may be a research paper, a blog post, some artwork, a video, another class at Penn, etc.

-- Include in your design doc links to and images of your reference material.

-

-#### Specification:

-- Outline the main features of your project.

-

-#### Techniques:

-- What are the main technical/algorithmic tools you’ll be using? Give an overview, citing specific papers/articles.

-

-#### Design:

-- How will your program fit together? Make a simple free-body diagram illustrating the pieces.

-

-#### Timeline:

-- Create a week-by-week set of milestones for each person in your group. Make sure you explicitly outline what each group member's duties will be.

-

-Submit your Design doc as usual via pull request against this repository.

-## Milestone 1: Implementation part 1 (due 11/16)

-Begin implementing your engine! Don't worry too much about polish or parameter tuning -- this week is about getting together the bulk of your generator implemented. By the end of the week, even if your visuals are crude, the majority of your generator's functionality should be done.

-

-Put all your code in your forked repository.

-

-Submission: Add a new section to your README titled: Milestone #1, which should include

-- written description of progress on your project goals. If you haven't hit all your goals, what's giving you trouble?

-- Examples of your generators output so far

-We'll check your repository for updates. No need to create a new pull request.

-## Milestone 3: Implementation part 2 (due 11/28)

-We're over halfway there! This week should be about fixing bugs and extending the core of your generator. Make sure by the end of this week _your generator works and is feature complete._ Any core engine features that don't make it in this week should be cut! Don't worry if you haven't managed to exactly hit your goals. We're more interested in seeing proof of your development effort than knowing your planned everything perfectly.

-

-Put all your code in your forked repository.

-

-Submission: Add a new section to your README titled: Milestone #3, which should include

-- written description of progress on your project goals. If you haven't hit all your goals, what did you have to cut and why?

-- Detailed output from your generator, images, video, etc.

-We'll check your repository for updates. No need to create a new pull request.

-

-Come to class on the due date with a WORKING COPY of your project. We'll be spending time in class critiquing and reviewing your work so far.

-

-## Final submission (due 12/5)

-Time to polish! Spen this last week of your project using your generator to produce beautiful output. Add textures, tune parameters, play with colors, play with camera animation. Take the feedback from class critques and use it to take your project to the next level.

-

-Submission:

-- Push all your code / files to your repository

-- Come to class ready to present your finished project

-- Update your README with two sections

- - final results with images and a live demo if possible

- - post mortem: how did your project go overall? Did you accomplish your goals? Did you have to pivot?

-

-## Topic Suggestions

-

-### Create a generator in Houdini

-

-### A CLASSIC 4K DEMO

-- In the spirit of the demo scene, create an animation that fits into a 4k executable that runs in real-time. Feel free to take inspiration from the many existing demos. Focus on efficiency and elegance in your implementation.

-- Example:

- - [cdak by Quite & orange](https://www.youtube.com/watch?v=RCh3Q08HMfs&list=PLA5E2FF8E143DA58C)

-

-### A RE-IMPLEMENTATION

-- Take an academic paper or other pre-existing project and implement it, or a portion of it.

-- Examples:

- - [2D Wavefunction Collapse Pokémon Town](https://gurtd.github.io/566-final-project/)

- - [3D Wavefunction Collapse Dungeon Generator](https://github.com/whaoran0718/3dDungeonGeneration)

- - [Reaction Diffusion](https://github.com/charlesliwang/Reaction-Diffusion)

- - [WebGL Erosion](https://github.com/LanLou123/Webgl-Erosion)

- - [Particle Waterfall](https://github.com/chloele33/particle-waterfall)

- - [Voxelized Bread](https://github.com/ChiantiYZY/566-final)

-

-### A FORGERY

-Taking inspiration from a particular natural phenomenon or distinctive set of visuals, implement a detailed, procedural recreation of that aesthetic. This includes modeling, texturing and object placement within your scene. Does not need to be real-time. Focus on detail and visual accuracy in your implementation.

-- Examples:

- - [The Shrines](https://github.com/byumjin/The-Shrines)

- - [Watercolor Shader](https://github.com/gracelgilbert/watercolor-stylization)

- - [Sunset Beach](https://github.com/HanmingZhang/homework-final)

- - [Sky Whales](https://github.com/WanruZhao/CIS566FinalProject)

- - [Snail](https://www.shadertoy.com/view/ld3Gz2)

- - [Journey](https://www.shadertoy.com/view/ldlcRf)

- - [Big Hero 6 Wormhole](https://2.bp.blogspot.com/-R-6AN2cWjwg/VTyIzIQSQfI/AAAAAAAABLA/GC0yzzz4wHw/s1600/big-hero-6-disneyscreencaps.com-10092.jpg)

-

-### A GAME LEVEL

-- Like generations of game makers before us, create a game which generates an navigable environment (eg. a roguelike dungeon, platforms) and some sort of goal or conflict (eg. enemy agents to avoid or items to collect). Aim to create an experience that will challenge players and vary noticeably in different playthroughs, whether that means procedural dungeon generation, careful resource management or an interesting AI model. Focus on designing a system that is capable of generating complex challenges and goals.

-- Examples:

- - [Rhythm-based Mario Platformer](https://github.com/sgalban/platformer-gen-2D)

- - [Pokémon Ice Puzzle Generator](https://github.com/jwang5675/Ice-Puzzle-Generator)

- - [Abstract Exploratory Game](https://github.com/MauKMu/procedural-final-project)

- - [Tiny Wings](https://github.com/irovira/TinyWings)

- - Spore

- - Dwarf Fortress

- - Minecraft

- - Rogue

-

-### AN ANIMATED ENVIRONMENT / MUSIC VISUALIZER

-- Create an environment full of interactive procedural animation. The goal of this project is to create an environment that feels responsive and alive. Whether or not animations are musically-driven, sound should be an important component. Focus on user interactions, motion design and experimental interfaces.

-- Examples:

- - [The Darkside](https://github.com/morganherrmann/thedarkside)

- - [Music Visualizer](https://yuruwang.github.io/MusicVisualizer/)

- - [Abstract Mesh Animation](https://github.com/mgriley/cis566_finalproj)

- - [Panoramical](https://www.youtube.com/watch?v=gBTTMNFXHTk)

- - [Bound](https://www.youtube.com/watch?v=aE37l6RvF-c)

-

-### YOUR OWN PROPOSAL

-- You are of course welcome to propose your own topic . Regardless of what you choose, you and your team must research your topic and relevant techniques and come up with a detailed plan of execution. You will meet with some subset of the procedural staff before starting implementation for approval.

+# Interactive Dance Projection with TouchDesigner

+

+## Introduction

+

+Generative art that are interactive is something I have always loved, especially since visiting large scale exhibitions by studios like [teamLab](https://www.teamlab.art/) and [Moment Factory](https://momentfactory.com/home). While the main purpose of computer graphics is to generate beautiful images, these visual experiences can be greatly elevated by integrating sensors and input systems to involve the audience in the art form. Recently, I have been playing around with [TouchDesigner](https://derivative.ca/) and the LeapMotion controller to create interactive art using hand motions. However, these experiences are thus far limiting in space. It is now time for me scale it to the next level both in terms of display output and the input system.

+

+The theme of this project focuses on two important interests of mine: **dancing** and **fashion**. I recently discovered the music genre of **Deep House**, which is both a type of [dance](https://www.youtube.com/watch?v=PbSv9doE9IY&ab_channel=MOVEDanceStudio) as well as the genre heavily used in the fashion industry for fashion runways or stores like ZARA. For example, [check this song out](https://www.youtube.com/watch?v=KD3sOUxKp9g&ab_channel=MelomaniacRDV) to get a sense of the vibe. My plan is to integrate these two elements into my work as I design the overall audience experience and the visuals.

+

+## Goal

+

+The goal of this project is to create an audio-and-motion-reactive visualization of Deep House music. Specifically, there are a few core objectives that I would like to meet:

+

+* The visuals react to a playlist of Deep House music coherently. The overall mood established should follow the beats and pace of the music.

+* The project will be rendered in real-time at an interactive rate (minimum 30 fps).

+* The audience can interact with the visualizer by moving their bodies and dancing to the music.

+* The project can be scaled to a larger environment, ideally displayed using a projector and have multiple people interact with it at the same time.

+* The visuals must be polished with fine-tuned color schemes that feel in place with the overall theme of the project.

+* BONUS: Install the project somewhere on campus for people to actually interact with, and record a video showing this.

+

+**The above goals are dependent on my ability to source the hardware.*

+

+## Inspiration & References

+

+- [TouchDesigner Artist: Bileam Tschepe](https://www.instagram.com/elekktronaut/) - he has lots of cool patterns created in TouchDesigner that would go well with Deep House.

+- [Universe of Water Particles - teamLab](https://www.teamlab.art/ew/waterparticles-transcending_superblue/superbluemiami/) - one of my favorite pieces from teamLab. Love the use of lines as waterfall. The color contrast between the waterfall and the flowers also works very well.

+- [TouchDesigner: Popping Dance w/ Particles](https://www.youtube.com/watch?v=oSPbZISVjRM) - an example of the type of interactivity that's possible with TouchDesigner.

+- [TouchDesigner: Audio-Reactive Voronoi](https://www.youtube.com/watch?v=tQp2osjgfYE&ab_channel=VJHellstoneLiveVisuals) - the type of background visuals that would be good for this project. Nothing too complex.

+- [Taipei Fashion Week SS22 - Ultra Combos (Only available in Chinese)](https://ultracombos.com/SS22-Taipei-Fashion-Week-SS22) - love the aesthetics of the background for this fashion show.

+

+## Specification

+

+This project will be implemented using **TouchDesigner** simplifying technical implementations. The inputs to the system will be a depth sensor camera such as ZED Mini or Kinect, as well as audio signals from the music. These signals will be used to drive the various parameters of the visualization.

+

+The visualizer can be broken down into multiple visual layers which are composited together. Specifically there are four layers that are included in the project:

+

+1. **Background Layer**: this layer will be the background for the final render. It will include procedurally generated patterns that are relatively simple, such that they do not overpower the foreground elements. These patterns will be **audio-reactive**.

+

+2. **Interaction Layer**: this layer will contain procedural elements that are **motion-reactive** thus making it interactive. For example, a particle system can be included that are reactive to the motion of the audience. This should be the primary focus of the art for the audience.

+

+3. **Reprojection Layer (Stretch Goal)**: this layer is optional and will only be implemented if time permits. It will contain a stylized reprojection of the actor. This layer allows a clearer indication of where the actors are. This reprojection layer can be **audio-reactive**.

+

+4. **Post-processing Layer**: this layer is for enhancing the visuals by applying post-processing effects to the previous layers. This layer is crucial in achieving the desired look, feel and mood.

+

+Finally, the composited render will be displayed using a projector.

+

+## Techniques

+

+The project will explore many common procedural techniques, including but not limited to the following:

+

+* **Particles Simulation** - particle systems will be used to add interactivity to the scene, and/or as an decorative element. These systems will be driven by custom forces that are guided by noise functions and input signals. The GPU-based particle systems will be used in TouchDesigner in order to meet the real-time requirement.

+* **Procedural Patterns** - procedural patterns will be generated using noise and toolbox functions along with basic geometric shapes. The idea is to generate a simple audio-reactive background that complements the main interactive layer.

+* **Optical Flow** - optical flow is a common technique used in TouchDesigner with a camera to affect the image output. The motion between frames captured from the camera will be converted to velocity signals that can drive other parameters in the scene.

+* **Noise and Toolbox Functions will be used everywhere!**

+* **Post-processing Techniques** - bloom effect, blur, feedback, distortion and edge detection will be experimented with to enhance the visuals.

+* **Coloring** - a specific color palette will be selected that best describes the theme of the project. This is all about making it look pretty!

+

+## Design

+

+

+

+## Timeline

+

+### Week 1 (11/09-11/16)

+* Establish the color tone

+* Curate the playlist to go with the visualizer

+* Source the required hardware

+* Create a simple audio-reactive pattern for the background layer

+* Create a simple motion-reactive visual using the webcam for the interaction layer

+

+### Week 2 (11/16-11/23)

+* Fine tune the shape for the background layer

+* Connect the depth sensor to the interaction layer and finalize the experience

+* Add in post-processing layer

+

+*(Will not be working from 11/23-11/28)*

+

+### Week 3 (11/28-12/05)

+* If time permitting, implement a simple reprojection layer

+* Color grading and parameters tuning

+* Install the project somewhere on campus

+

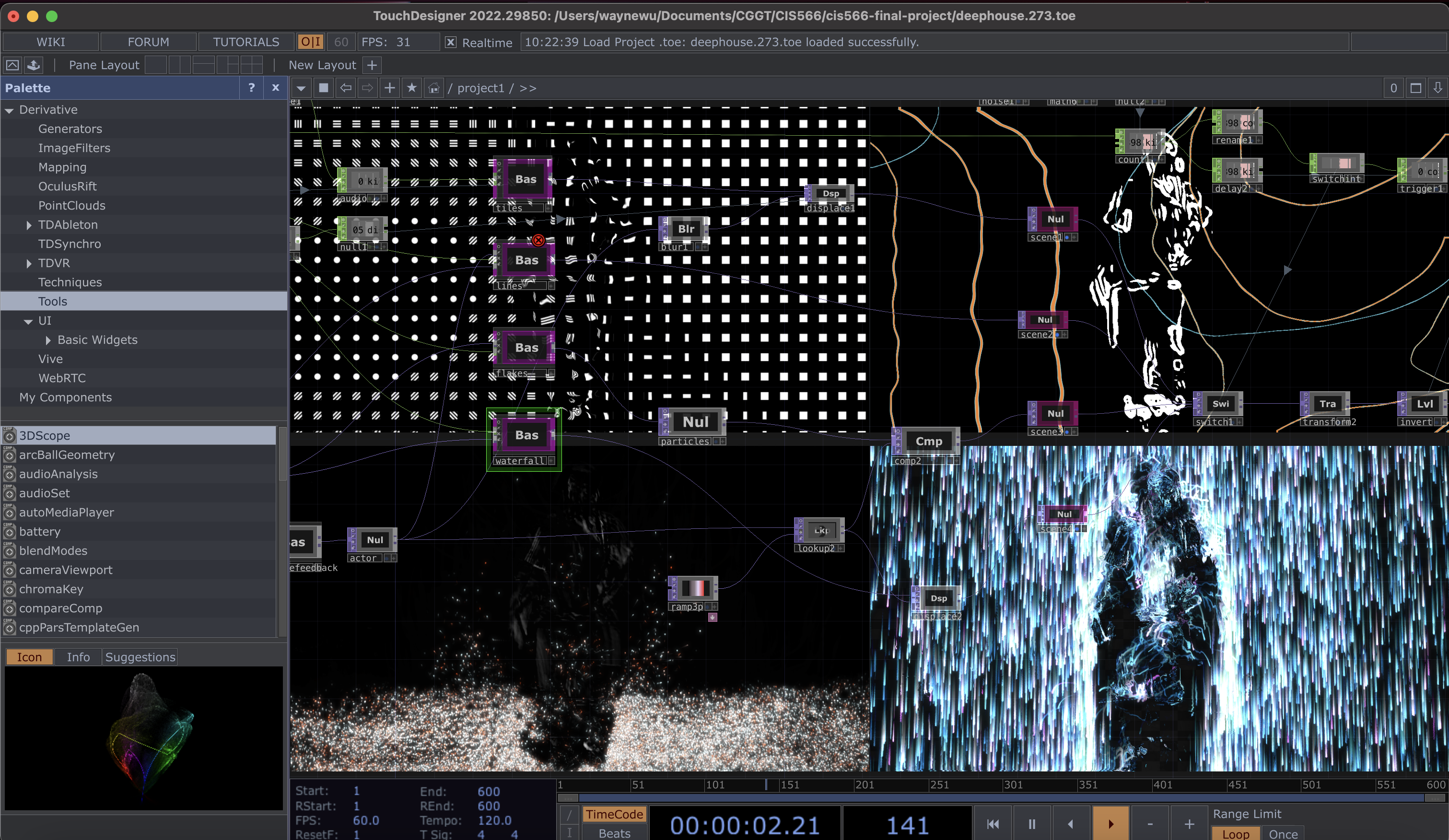

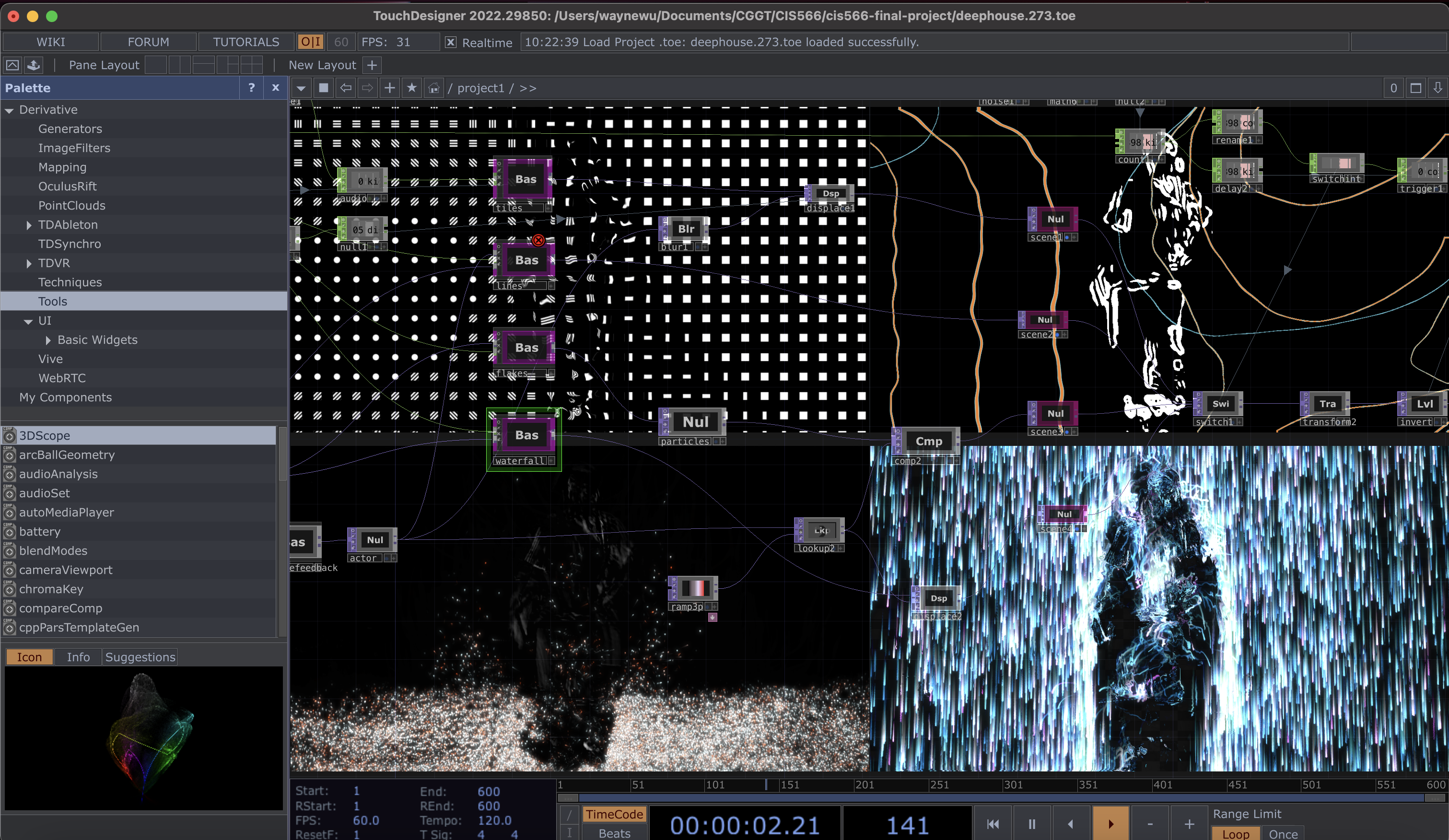

+# Milestone 1 Update

+

+For Milestone 1, I experimented with various procedural patterns in order to find one that works best with the vibe of the music as the background layer. Below are some of the patterns that I was able to create following Bileam Tschepe's awesome tutorials. These patterns are currently all just black and white. The color and composition mode will be determined in the next milestone.

+

+| *[Tile Pattern](https://www.youtube.com/watch?v=gXUWcYZ8hqQ&ab_channel=bileamtschepe%28elekktronaut%29)* | *[Texture Instancing](https://www.youtube.com/watch?v=uFFXUPP0cyg&ab_channel=bileamtschepe%28elekktronaut%29)* |

+| ------------- | ------------- |

+|  |  |

+

+| *[Texture Feedback](https://www.youtube.com/watch?v=NMvx_icZUhY&t=266s&ab_channel=bileamtschepe%28elekktronaut%29)* | *[Line Feedback](https://www.youtube.com/watch?v=zCNREVDLVo8&ab_channel=bileamtschepe%28elekktronaut%29)* |

+| ------------- | ------------- |

+|  |  |

+

+Additionally, I have also tested out the optical flow tool inside TouchDesigner by feeding in a [house dance video](https://www.youtube.com/watch?v=VEE5qqDPVGY&ab_channel=JardySantiago) for testing. This particular video works well because the camera is static, and the framing of the actor is similar to how I would set up my camera sensor. Theoretically, I should be able to just swap the footage with a camera input later on and everything should work accordingly.

+

+By processing the optical flow data using a feedback loop, we can get a smoothly motion-blurred silhouette of the actor. Finally, optical flow is then used to drive a simple particle system as shown below. The result is very promising as I may potentially not have to get a depth sensor camera and can drive everything using just a webcam.

+

+

+

+Finally, if we composite one of the audio-reactive patterns with the interaction layer, we can get something interesting like this:

+

+

+

+However, I'm still not happy with the overall look. I do have patterns that I can work with but none of them really speaks with the music. Therefore, I may take or discard elements from each pattern to create something more original and interesting. Moreover, I'm also not satisfied with the color tone. There are some interesting color palettes [here](https://www.shutterstock.com/blog/10-color-palettes-and-patterns-inspired-by-new-york-fashion-week) based on NY fashion week that I will be exploring.

+

+# Milestone 2 Update

+

+For Milestone 2, I did not get as far as I would have liked to, but I was able to tweak some of the parameters of the procedural elements and make more of the parameters audio-reactive. I changed the texture instancing pattern to have larger texture size such that more of the particle systems can be seen once overlayed by the procedural pattern. The particles are now spawned at the bottom, closer to the feet, since House dance focuses a lot on the bottom movements. I have brought the reprojection of the actor as a foreground element so it can be seen more clearly now. Finally, I have also changed the color tone to be cooler, although I'm still not 100% satisfied with it.

+

+Here's a video of the current state:

+

+https://user-images.githubusercontent.com/77313916/204447443-830f3ccc-7921-4b64-8074-61e143c864df.mov

+

+I was not able to test it with a depth sensor or projector as I was not able to acquire them on time. However, I will be getting the ZED mini this week and will rent a projector from the library to test the setup.

+

+# Final Version Update

+

+For the final version of the project, I have separated out the procedural patterns as individaul scenes instead of composing them all together. This allows the audience interactions to be more visible, while reducing the overall complexity of the scene, which was previously quite overwhelming. The four scenes are: flakes, lines, tiles, and waterfall.

+

+ +

+# Live Installation

+

+For the live installation, I've set up the projector and ZED mini on a desk and projected the visuals on a plain wall in our lab. Since the space is limiting, the size of projection is roughly 3.2m x 1.8m. Ideally, it could be scaled up even further.

+

+

+

+# Live Installation

+

+For the live installation, I've set up the projector and ZED mini on a desk and projected the visuals on a plain wall in our lab. Since the space is limiting, the size of projection is roughly 3.2m x 1.8m. Ideally, it could be scaled up even further.

+

+ +

+

+

+ +

+## Flakes

+

+

+

+## Flakes

+

+ +

+## Lines

+

+

+

+## Lines

+

+ +

+## Tiles

+

+

+

+## Tiles

+

+ +

+## Waterfall

+

+

+

+## Waterfall

+

+ +

+# [Check out the final live demo here!](https://vimeo.com/manage/videos/778235844)

+

+

+

+

diff --git a/deephouse.toe b/deephouse.toe

new file mode 100644

index 0000000..c498031

Binary files /dev/null and b/deephouse.toe differ

diff --git a/imgs/compose.gif b/imgs/compose.gif

new file mode 100644

index 0000000..79e6f7a

Binary files /dev/null and b/imgs/compose.gif differ

diff --git a/imgs/diagram.png b/imgs/diagram.png

new file mode 100644

index 0000000..1478cf8

Binary files /dev/null and b/imgs/diagram.png differ

diff --git a/imgs/opticalflow.gif b/imgs/opticalflow.gif

new file mode 100644

index 0000000..c8d2319

Binary files /dev/null and b/imgs/opticalflow.gif differ

diff --git a/imgs/pattern1.gif b/imgs/pattern1.gif

new file mode 100644

index 0000000..ecea1c2

Binary files /dev/null and b/imgs/pattern1.gif differ

diff --git a/imgs/pattern2.gif b/imgs/pattern2.gif

new file mode 100644

index 0000000..18954d9

Binary files /dev/null and b/imgs/pattern2.gif differ

diff --git a/imgs/pattern3.gif b/imgs/pattern3.gif

new file mode 100644

index 0000000..6956e29

Binary files /dev/null and b/imgs/pattern3.gif differ

diff --git a/imgs/pattern4.gif b/imgs/pattern4.gif

new file mode 100644

index 0000000..c46328b

Binary files /dev/null and b/imgs/pattern4.gif differ

diff --git a/videos/housedancevideo.mp4 b/videos/housedancevideo.mp4

new file mode 100644

index 0000000..7c09ee4

Binary files /dev/null and b/videos/housedancevideo.mp4 differ

+

+# [Check out the final live demo here!](https://vimeo.com/manage/videos/778235844)

+

+

+

+

diff --git a/deephouse.toe b/deephouse.toe

new file mode 100644

index 0000000..c498031

Binary files /dev/null and b/deephouse.toe differ

diff --git a/imgs/compose.gif b/imgs/compose.gif

new file mode 100644

index 0000000..79e6f7a

Binary files /dev/null and b/imgs/compose.gif differ

diff --git a/imgs/diagram.png b/imgs/diagram.png

new file mode 100644

index 0000000..1478cf8

Binary files /dev/null and b/imgs/diagram.png differ

diff --git a/imgs/opticalflow.gif b/imgs/opticalflow.gif

new file mode 100644

index 0000000..c8d2319

Binary files /dev/null and b/imgs/opticalflow.gif differ

diff --git a/imgs/pattern1.gif b/imgs/pattern1.gif

new file mode 100644

index 0000000..ecea1c2

Binary files /dev/null and b/imgs/pattern1.gif differ

diff --git a/imgs/pattern2.gif b/imgs/pattern2.gif

new file mode 100644

index 0000000..18954d9

Binary files /dev/null and b/imgs/pattern2.gif differ

diff --git a/imgs/pattern3.gif b/imgs/pattern3.gif

new file mode 100644

index 0000000..6956e29

Binary files /dev/null and b/imgs/pattern3.gif differ

diff --git a/imgs/pattern4.gif b/imgs/pattern4.gif

new file mode 100644

index 0000000..c46328b

Binary files /dev/null and b/imgs/pattern4.gif differ

diff --git a/videos/housedancevideo.mp4 b/videos/housedancevideo.mp4

new file mode 100644

index 0000000..7c09ee4

Binary files /dev/null and b/videos/housedancevideo.mp4 differ

+

+# Live Installation

+

+For the live installation, I've set up the projector and ZED mini on a desk and projected the visuals on a plain wall in our lab. Since the space is limiting, the size of projection is roughly 3.2m x 1.8m. Ideally, it could be scaled up even further.

+

+

+

+# Live Installation

+

+For the live installation, I've set up the projector and ZED mini on a desk and projected the visuals on a plain wall in our lab. Since the space is limiting, the size of projection is roughly 3.2m x 1.8m. Ideally, it could be scaled up even further.

+

+ +

+## Lines

+

+

+

+## Lines

+

+ +

+## Tiles

+

+

+

+## Tiles

+

+ +

+## Waterfall

+

+

+

+## Waterfall

+

+ +

+# [Check out the final live demo here!](https://vimeo.com/manage/videos/778235844)

+

+

+

+

diff --git a/deephouse.toe b/deephouse.toe

new file mode 100644

index 0000000..c498031

Binary files /dev/null and b/deephouse.toe differ

diff --git a/imgs/compose.gif b/imgs/compose.gif

new file mode 100644

index 0000000..79e6f7a

Binary files /dev/null and b/imgs/compose.gif differ

diff --git a/imgs/diagram.png b/imgs/diagram.png

new file mode 100644

index 0000000..1478cf8

Binary files /dev/null and b/imgs/diagram.png differ

diff --git a/imgs/opticalflow.gif b/imgs/opticalflow.gif

new file mode 100644

index 0000000..c8d2319

Binary files /dev/null and b/imgs/opticalflow.gif differ

diff --git a/imgs/pattern1.gif b/imgs/pattern1.gif

new file mode 100644

index 0000000..ecea1c2

Binary files /dev/null and b/imgs/pattern1.gif differ

diff --git a/imgs/pattern2.gif b/imgs/pattern2.gif

new file mode 100644

index 0000000..18954d9

Binary files /dev/null and b/imgs/pattern2.gif differ

diff --git a/imgs/pattern3.gif b/imgs/pattern3.gif

new file mode 100644

index 0000000..6956e29

Binary files /dev/null and b/imgs/pattern3.gif differ

diff --git a/imgs/pattern4.gif b/imgs/pattern4.gif

new file mode 100644

index 0000000..c46328b

Binary files /dev/null and b/imgs/pattern4.gif differ

diff --git a/videos/housedancevideo.mp4 b/videos/housedancevideo.mp4

new file mode 100644

index 0000000..7c09ee4

Binary files /dev/null and b/videos/housedancevideo.mp4 differ

+

+# [Check out the final live demo here!](https://vimeo.com/manage/videos/778235844)

+

+

+

+

diff --git a/deephouse.toe b/deephouse.toe

new file mode 100644

index 0000000..c498031

Binary files /dev/null and b/deephouse.toe differ

diff --git a/imgs/compose.gif b/imgs/compose.gif

new file mode 100644

index 0000000..79e6f7a

Binary files /dev/null and b/imgs/compose.gif differ

diff --git a/imgs/diagram.png b/imgs/diagram.png

new file mode 100644

index 0000000..1478cf8

Binary files /dev/null and b/imgs/diagram.png differ

diff --git a/imgs/opticalflow.gif b/imgs/opticalflow.gif

new file mode 100644

index 0000000..c8d2319

Binary files /dev/null and b/imgs/opticalflow.gif differ

diff --git a/imgs/pattern1.gif b/imgs/pattern1.gif

new file mode 100644

index 0000000..ecea1c2

Binary files /dev/null and b/imgs/pattern1.gif differ

diff --git a/imgs/pattern2.gif b/imgs/pattern2.gif

new file mode 100644

index 0000000..18954d9

Binary files /dev/null and b/imgs/pattern2.gif differ

diff --git a/imgs/pattern3.gif b/imgs/pattern3.gif

new file mode 100644

index 0000000..6956e29

Binary files /dev/null and b/imgs/pattern3.gif differ

diff --git a/imgs/pattern4.gif b/imgs/pattern4.gif

new file mode 100644

index 0000000..c46328b

Binary files /dev/null and b/imgs/pattern4.gif differ

diff --git a/videos/housedancevideo.mp4 b/videos/housedancevideo.mp4

new file mode 100644

index 0000000..7c09ee4

Binary files /dev/null and b/videos/housedancevideo.mp4 differ