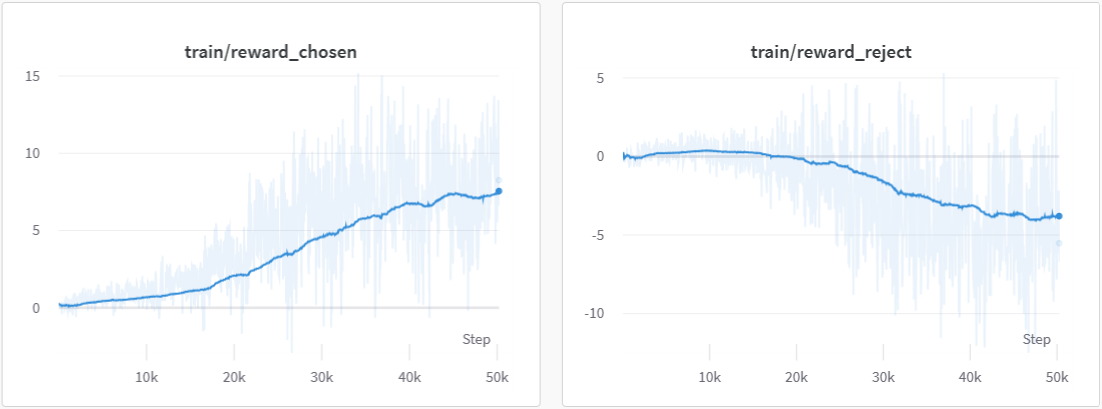

We also train the reward model based on LLaMA-7B, which reaches the ACC of 72.06% after 1 epoch, performing almost the same as Anthropic's best RM.

-

-### Arg List

-

-- `--strategy`: the strategy using for training, choices=['ddp', 'colossalai_gemini', 'colossalai_zero2'], default='colossalai_zero2'

-- `--model`: model type, choices=['gpt2', 'bloom', 'opt', 'llama'], default='bloom'

-- `--pretrain`: pretrain model, type=str, default=None

-- `--model_path`: the path of rm model(if continue to train), type=str, default=None

-- `--save_path`: path to save the model, type=str, default='output'

-- `--need_optim_ckpt`: whether to save optim ckpt, type=bool, default=False

-- `--max_epochs`: max epochs for training, type=int, default=3

-- `--dataset`: dataset name, type=str, choices=['Anthropic/hh-rlhf', 'Dahoas/rm-static']

-- `--subset`: subset of the dataset, type=str, default=None

-- `--batch_size`: batch size while training, type=int, default=4

-- `--lora_rank`: low-rank adaptation matrices rank, type=int, default=0

-- `--loss_func`: which kind of loss function, choices=['log_sig', 'log_exp']

-- `--max_len`: max sentence length for generation, type=int, default=512

-

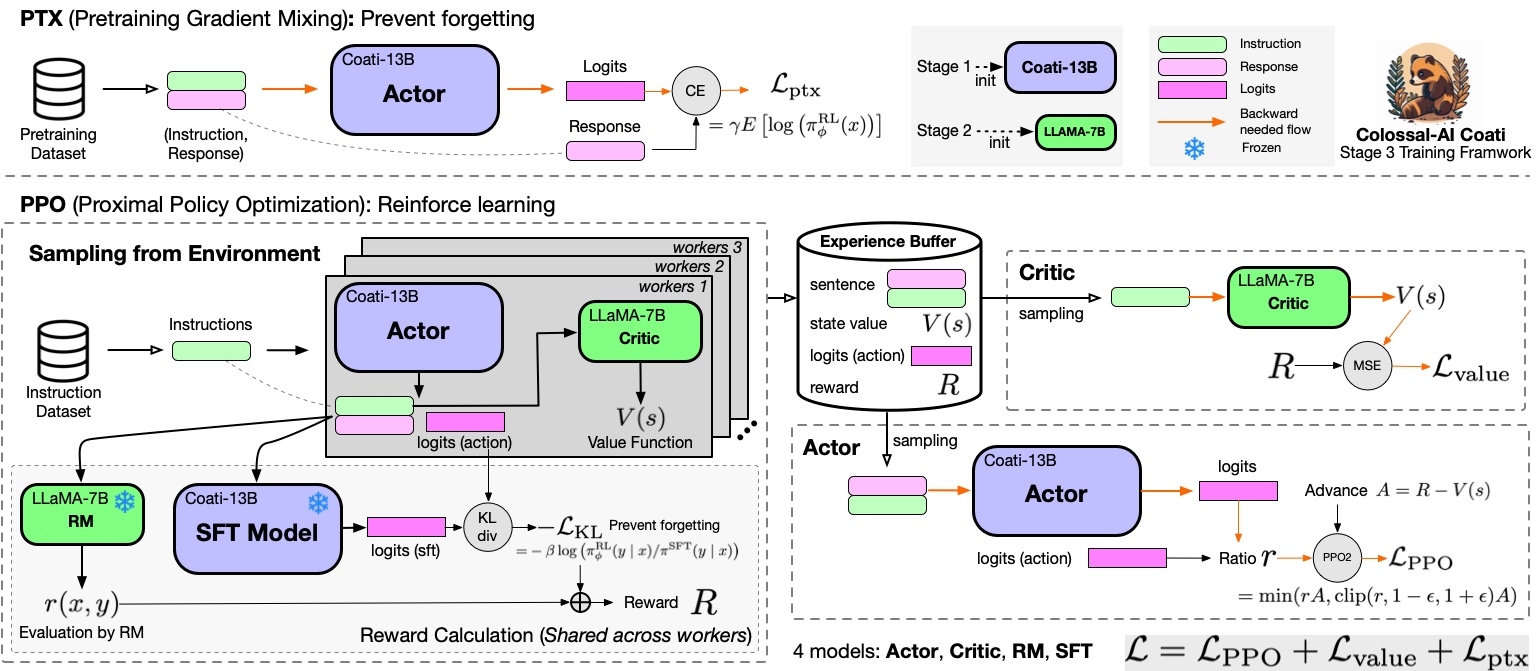

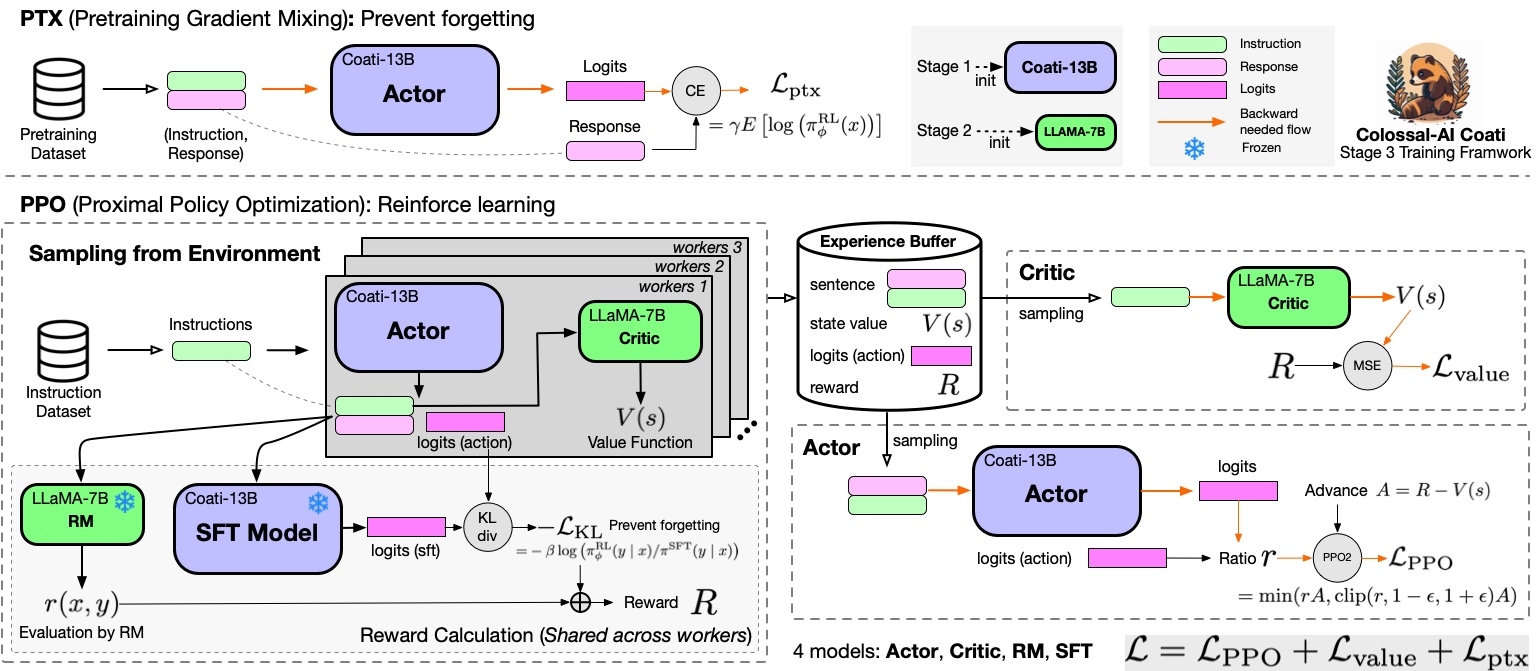

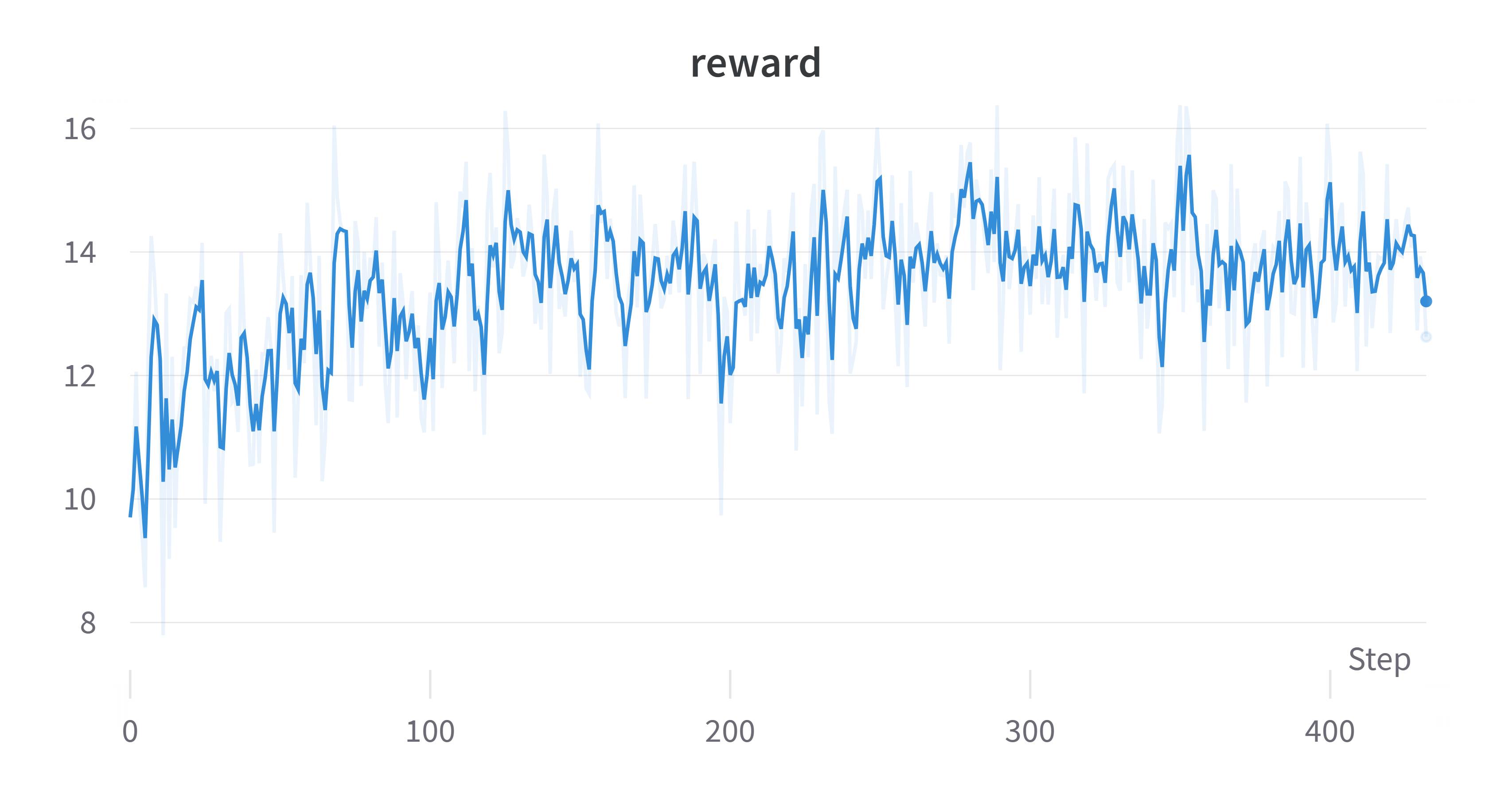

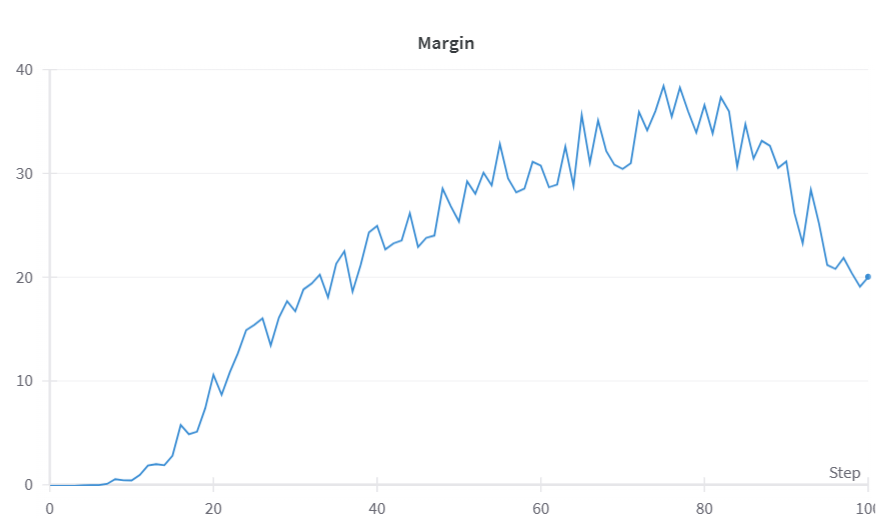

-## Stage3 - Training model using prompts with RL

-

-Stage3 uses reinforcement learning algorithm, which is the most complex part of the training process, as shown below:

-

-

- -

-

-

-You can run the `examples/train_prompts.sh` to start PPO training.

-

-You can also use the cmd following to start PPO training.

-[[Stage3 tutorial video]](https://www.youtube.com/watch?v=Z8wwSHxPL9g)

-

-```bash

-torchrun --standalone --nproc_per_node=4 train_prompts.py \

- --pretrain "/path/to/LLaMa-7B/" \

- --model 'llama' \

- --strategy colossalai_zero2 \

- --prompt_dataset /path/to/your/prompt_dataset \

- --pretrain_dataset /path/to/your/pretrain_dataset \

- --rm_pretrain /your/pretrain/rm/definition \

- --rm_path /your/rm/model/path

-```

-

-Prompt dataset: the instruction dataset mentioned in the above figure which includes the instructions, e.g. you can use the [script](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat/examples/generate_prompt_dataset.py) which samples `instinwild_en.json` or `instinwild_ch.json` in [InstructionWild](https://github.com/XueFuzhao/InstructionWild/tree/main/data#instructwild-data) to generate the prompt dataset.

-Pretrain dataset: the pretrain dataset including the instruction and corresponding response, e.g. you can use the [InstructWild Data](https://github.com/XueFuzhao/InstructionWild/tree/main/data) in stage 1 supervised instructs tuning.

-

-**Note**: the required datasets follow the following format,

-

-- `pretrain dataset`

-

- ```json

- [

- {

- "instruction": "Provide a list of the top 10 most popular mobile games in Asia",

- "input": "",

- "output": "The top 10 most popular mobile games in Asia are:\n1) PUBG Mobile\n2) Pokemon Go\n3) Candy Crush Saga\n4) Free Fire\n5) Clash of Clans\n6) Mario Kart Tour\n7) Arena of Valor\n8) Fantasy Westward Journey\n9) Subway Surfers\n10) ARK Survival Evolved",

- "id": 0

- },

- ...

- ]

- ```

-

-- `prompt dataset`

-

- ```json

- [

- {

- "instruction": "Edit this paragraph to make it more concise: \"Yesterday, I went to the store and bought some things. Then, I came home and put them away. After that, I went for a walk and met some friends.\"",

- "id": 0

- },

- {

- "instruction": "Write a descriptive paragraph about a memorable vacation you went on",

- "id": 1

- },

- ...

- ]

- ```

-

-### Arg List

-

-- `--strategy`: the strategy using for training, choices=['ddp', 'colossalai_gemini', 'colossalai_zero2'], default='colossalai_zero2'

-- `--model`: model type of actor, choices=['gpt2', 'bloom', 'opt', 'llama'], default='bloom'

-- `--pretrain`: pretrain model, type=str, default=None

-- `--rm_model`: reward model type, type=str, choices=['gpt2', 'bloom', 'opt', 'llama'], default=None

-- `--rm_pretrain`: pretrain model for reward model, type=str, default=None

-- `--rm_path`: the path of rm model, type=str, default=None

-- `--save_path`: path to save the model, type=str, default='output'

-- `--prompt_dataset`: path of the prompt dataset, type=str, default=None

-- `--pretrain_dataset`: path of the ptx dataset, type=str, default=None

-- `--need_optim_ckpt`: whether to save optim ckpt, type=bool, default=False

-- `--num_episodes`: num of episodes for training, type=int, default=10

-- `--num_update_steps`: number of steps to update policy per episode, type=int

-- `--num_collect_steps`: number of steps to collect experience per episode, type=int

-- `--train_batch_size`: batch size while training, type=int, default=8

-- `--ptx_batch_size`: batch size to compute ptx loss, type=int, default=1

-- `--experience_batch_size`: batch size to make experience, type=int, default=8

-- `--lora_rank`: low-rank adaptation matrices rank, type=int, default=0

-- `--kl_coef`: kl_coef using for computing reward, type=float, default=0.1

-- `--ptx_coef`: ptx_coef using for computing policy loss, type=float, default=0.9

-

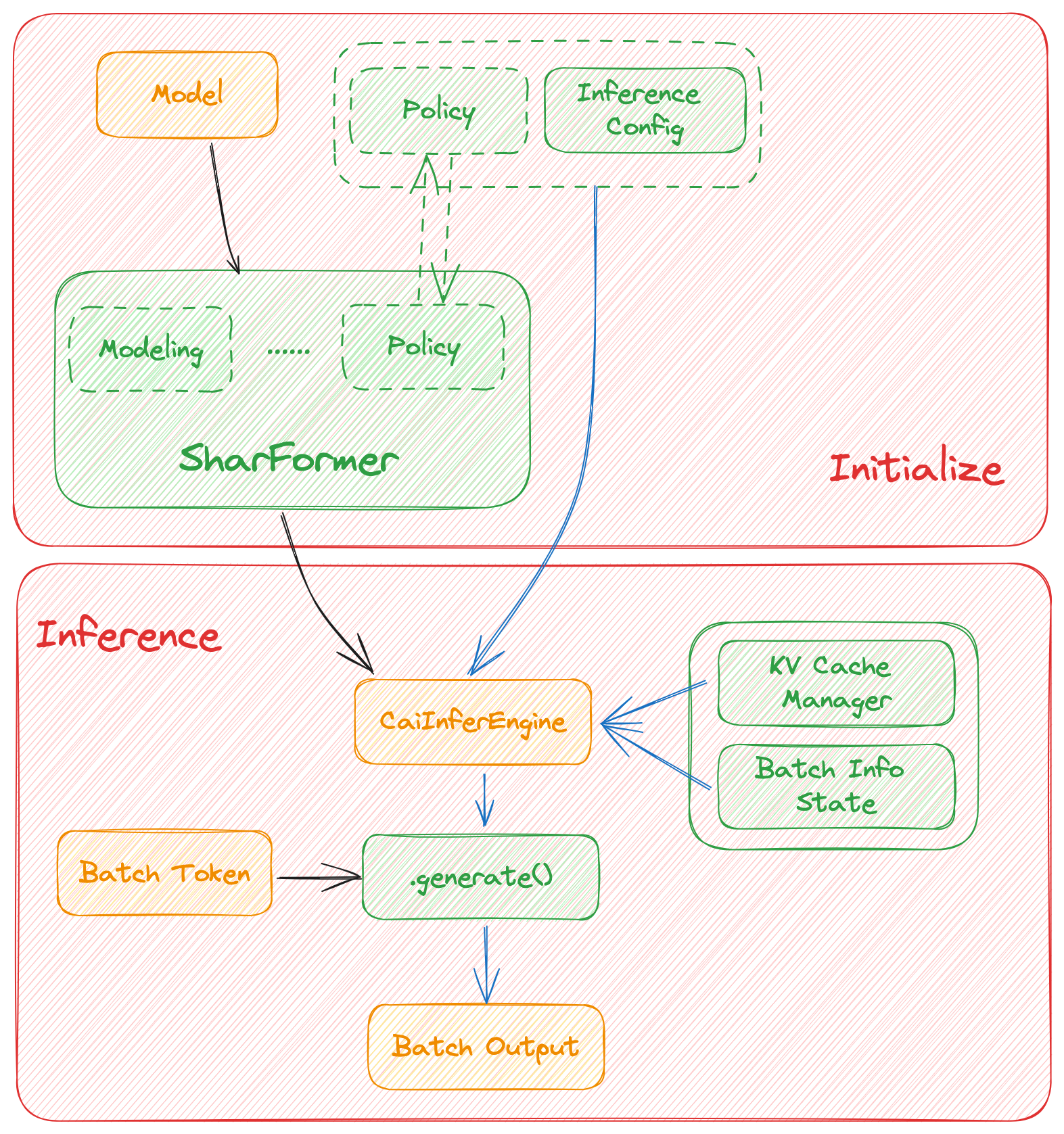

-## Inference example - After Stage3

-

-We support different inference options, including int8 and int4 quantization.

-For details, see [`inference/`](https://github.com/hpcaitech/ColossalAI/tree/main/applications/Chat/inference).

-

-## Attention

-

-The examples are demos for the whole training process.You need to change the hyper-parameters to reach great performance.

-

-#### data

-

-- [x] [rm-static](https://huggingface.co/datasets/Dahoas/rm-static)

-- [x] [hh-rlhf](https://huggingface.co/datasets/Anthropic/hh-rlhf)

-- [ ] [openai/summarize_from_feedback](https://huggingface.co/datasets/openai/summarize_from_feedback)

-- [ ] [openai/webgpt_comparisons](https://huggingface.co/datasets/openai/webgpt_comparisons)

-- [ ] [Dahoas/instruct-synthetic-prompt-responses](https://huggingface.co/datasets/Dahoas/instruct-synthetic-prompt-responses)

-

-## Support Model

-

-### GPT

-

-- [x] GPT2-S (s)

-- [x] GPT2-M (m)

-- [x] GPT2-L (l)

-- [x] GPT2-XL (xl)

-- [x] GPT2-4B (4b)

-- [ ] GPT2-6B (6b)

-

-### BLOOM

-

-- [x] [BLOOM-560m](https://huggingface.co/bigscience/bloom-560m)

-- [x] [BLOOM-1b1](https://huggingface.co/bigscience/bloom-1b1)

-- [x] [BLOOM-3b](https://huggingface.co/bigscience/bloom-3b)

-- [x] [BLOOM-7b](https://huggingface.co/bigscience/bloom-7b1)

-- [ ] [BLOOM-175b](https://huggingface.co/bigscience/bloom)

-

-### OPT

-

-- [x] [OPT-125M](https://huggingface.co/facebook/opt-125m)

-- [x] [OPT-350M](https://huggingface.co/facebook/opt-350m)

-- [x] [OPT-1.3B](https://huggingface.co/facebook/opt-1.3b)

-- [x] [OPT-2.7B](https://huggingface.co/facebook/opt-2.7b)

-- [x] [OPT-6.7B](https://huggingface.co/facebook/opt-6.7b)

-- [ ] [OPT-13B](https://huggingface.co/facebook/opt-13b)

-- [ ] [OPT-30B](https://huggingface.co/facebook/opt-30b)

-

-### [LLaMA](https://github.com/facebookresearch/llama/blob/main/MODEL_CARD.md)

-

-- [x] LLaMA-7B

-- [x] LLaMA-13B

-- [ ] LLaMA-33B

-- [ ] LLaMA-65B

-

-## Add your own models

-

-If you want to support your own model in Coati, please refer the pull request for RoBERTa support as an example --[[chatgpt] add pre-trained model RoBERTa for RLHF stage 2 & 3](https://github.com/hpcaitech/ColossalAI/pull/3223), and submit a PR to us.

-

-You should complete the implementation of four model classes, including Reward model, Critic model, LM model, Actor model

-

-here are some example code for a NewModel named `Coati`.

-if it is supported in huggingface [transformers](https://github.com/huggingface/transformers), you can load it by `from_pretrained`, o

-r you can build your own model by yourself.

-

-### Actor model

-

-```python

-from ..base import Actor

-from transformers.models.coati import CoatiModel

-

-class CoatiActor(Actor):

- def __init__(self,

- pretrained: Optional[str] = None,

- checkpoint: bool = False,

- lora_rank: int = 0,

- lora_train_bias: str = 'none') -> None:

- if pretrained is not None:

- model = CoatiModel.from_pretrained(pretrained)

- else:

- model = build_model() # load your own model if it is not support in transformers

-

- super().__init__(model, lora_rank, lora_train_bias)

-```

-

-### Reward model

-

-```python

-from ..base import RewardModel

-from transformers.models.coati import CoatiModel

-

-class CoatiRM(RewardModel):

-

- def __init__(self,

- pretrained: Optional[str] = None,

- checkpoint: bool = False,

- lora_rank: int = 0,

- lora_train_bias: str = 'none') -> None:

- if pretrained is not None:

- model = CoatiModel.from_pretrained(pretrained)

- else:

- model = build_model() # load your own model if it is not support in transformers

-

- value_head = nn.Linear(model.config.n_embd, 1)

- value_head.weight.data.normal_(mean=0.0, std=1 / (model.config.n_embd + 1))

- super().__init__(model, value_head, lora_rank, lora_train_bias)

-```

-

-### Critic model

-

-```python

-from ..base import Critic

-from transformers.models.coati import CoatiModel

-

-class CoatiCritic(Critic):

- def __init__(self,

- pretrained: Optional[str] = None,

- checkpoint: bool = False,

- lora_rank: int = 0,

- lora_train_bias: str = 'none') -> None:

- if pretrained is not None:

- model = CoatiModel.from_pretrained(pretrained)

- else:

- model = build_model() # load your own model if it is not support in transformers

-

- value_head = nn.Linear(model.config.n_embd, 1)

- value_head.weight.data.normal_(mean=0.0, std=1 / (model.config.n_embd + 1))

- super().__init__(model, value_head, lora_rank, lora_train_bias)

-```

diff --git a/applications/Chat/examples/download_model.py b/applications/Chat/examples/download_model.py

deleted file mode 100644

index ec3482b5f789..000000000000

--- a/applications/Chat/examples/download_model.py

+++ /dev/null

@@ -1,79 +0,0 @@

-import argparse

-import dataclasses

-import os

-import parser

-from typing import List

-

-import tqdm

-from coati.models.bloom import BLOOMRM, BLOOMActor, BLOOMCritic

-from coati.models.gpt import GPTRM, GPTActor, GPTCritic

-from coati.models.opt import OPTRM, OPTActor, OPTCritic

-from huggingface_hub import hf_hub_download, snapshot_download

-from transformers import AutoConfig, AutoTokenizer, BloomConfig, BloomTokenizerFast, GPT2Config, GPT2Tokenizer

-

-

-@dataclasses.dataclass

-class HFRepoFiles:

- repo_id: str

- files: List[str]

-

- def download(self, dir_path: str):

- for file in self.files:

- file_path = hf_hub_download(self.repo_id, file, local_dir=dir_path)

-

- def download_all(self):

- snapshot_download(self.repo_id)

-

-

-def test_init(model: str, dir_path: str):

- if model == "gpt2":

- config = GPT2Config.from_pretrained(dir_path)

- actor = GPTActor(config=config)

- critic = GPTCritic(config=config)

- reward_model = GPTRM(config=config)

- GPT2Tokenizer.from_pretrained(dir_path)

- elif model == "bloom":

- config = BloomConfig.from_pretrained(dir_path)

- actor = BLOOMActor(config=config)

- critic = BLOOMCritic(config=config)

- reward_model = BLOOMRM(config=config)

- BloomTokenizerFast.from_pretrained(dir_path)

- elif model == "opt":

- config = AutoConfig.from_pretrained(dir_path)

- actor = OPTActor(config=config)

- critic = OPTCritic(config=config)

- reward_model = OPTRM(config=config)

- AutoTokenizer.from_pretrained(dir_path)

- else:

- raise NotImplementedError(f"Model {model} not implemented")

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument("--model-dir", type=str, default="test_models")

- parser.add_argument("--config-only", default=False, action="store_true")

- args = parser.parse_args()

-

- if os.path.exists(args.model_dir):

- print(f"[INFO]: {args.model_dir} already exists")

- exit(0)

-

- repo_list = {

- "gpt2": HFRepoFiles(repo_id="gpt2", files=["config.json", "tokenizer.json", "vocab.json", "merges.txt"]),

- "bloom": HFRepoFiles(

- repo_id="bigscience/bloom-560m", files=["config.json", "tokenizer.json", "tokenizer_config.json"]

- ),

- "opt": HFRepoFiles(

- repo_id="facebook/opt-350m", files=["config.json", "tokenizer_config.json", "vocab.json", "merges.txt"]

- ),

- }

-

- os.mkdir(args.model_dir)

- for model_name in tqdm.tqdm(repo_list):

- dir_path = os.path.join(args.model_dir, model_name)

- if args.config_only:

- os.mkdir(dir_path)

- repo_list[model_name].download(dir_path)

- else:

- repo_list[model_name].download_all()

- test_init(model_name, dir_path)

diff --git a/applications/Chat/examples/generate_conversation_dataset.py b/applications/Chat/examples/generate_conversation_dataset.py

deleted file mode 100644

index 7e03b2d54260..000000000000

--- a/applications/Chat/examples/generate_conversation_dataset.py

+++ /dev/null

@@ -1,82 +0,0 @@

-import argparse

-import json

-

-from datasets import load_dataset

-

-

-def generate_alpaca():

- # We can convert dataset with the same format("instruction", "input", "output") as Alpaca into a one-round conversation.

- conversation_dataset = []

- dataset = load_dataset("tatsu-lab/alpaca", split="train")

-

- instructions = dataset["instruction"]

- inputs = dataset["input"]

- outputs = dataset["output"]

-

- assert len(instructions) == len(inputs) == len(outputs)

-

- for idx in range(len(instructions)):

- human_utterance = instructions[idx] + "\n\n" + inputs[idx] if inputs[idx] else instructions[idx]

- human = {"from": "human", "value": human_utterance}

-

- gpt_utterance = outputs[idx]

- gpt = {"from": "gpt", "value": gpt_utterance}

-

- conversation = dict(type="instruction", language="English", dataset="Alpaca", conversations=[human, gpt])

- conversation_dataset.append(conversation)

-

- return conversation_dataset

-

-

-def generate_sharegpt():

- # ShareGPT data requires less processing.

- conversation_dataset = []

- dataset = load_dataset(

- "anon8231489123/ShareGPT_Vicuna_unfiltered",

- data_files="ShareGPT_V3_unfiltered_cleaned_split_no_imsorry.json",

- split="train",

- )

-

- conversations = dataset["conversations"]

-

- for idx in range(len(conversations)):

- for conv in conversations[idx]:

- # We don't need markdown and text value.

- del conv["markdown"]

- del conv["text"]

-

- conversation = dict(

- type="conversation", language="Multilingual", dataset="ShareGPT", conversations=conversations[idx]

- )

- conversation_dataset.append(conversation)

-

- return conversation_dataset

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument(

- "--dataset",

- type=str,

- default="All",

- choices=["Alpaca", "ShareGPT", "All"],

- help="which dataset to convert, All will combine Alpaca and ShareGPT",

- )

- parser.add_argument("--save_path", type=str, default="dataset.json", help="path to save the converted dataset")

- args = parser.parse_args()

-

- conversation_dataset = []

-

- if args.dataset == "Alpaca":

- conversation_dataset.extend(generate_alpaca())

- elif args.dataset == "ShareGPT":

- conversation_dataset.extend(generate_sharegpt())

- else:

- conversation_dataset.extend(generate_alpaca())

- conversation_dataset.extend(generate_sharegpt())

-

- for idx, sample in enumerate(conversation_dataset):

- sample["id"] = idx + 1

-

- with open(args.save_path, mode="w") as f:

- json.dump(conversation_dataset, f, indent=4, default=str, ensure_ascii=False)

diff --git a/applications/Chat/examples/generate_prompt_dataset.py b/applications/Chat/examples/generate_prompt_dataset.py

deleted file mode 100644

index 4eec6feae505..000000000000

--- a/applications/Chat/examples/generate_prompt_dataset.py

+++ /dev/null

@@ -1,27 +0,0 @@

-import argparse

-import json

-import random

-

-random.seed(42)

-

-

-def sample(args):

- with open(args.dataset_path, mode="r") as f:

- dataset_list = json.load(f)

-

- sampled_dataset = [

- {"instruction": sample["instruction"], "id": idx}

- for idx, sample in enumerate(random.sample(dataset_list, args.sample_size))

- ]

-

- with open(args.save_path, mode="w") as f:

- json.dump(sampled_dataset, f, indent=4, default=str, ensure_ascii=False)

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument("--dataset_path", type=str, default=None, required=True, help="path to the pretrain dataset")

- parser.add_argument("--save_path", type=str, default="prompt.json", help="path to save the prompt dataset")

- parser.add_argument("--sample_size", type=int, default=16384, help="size of the prompt dataset")

- args = parser.parse_args()

- sample(args)

diff --git a/applications/Chat/examples/inference.py b/applications/Chat/examples/inference.py

deleted file mode 100644

index 62e06bf7b3bb..000000000000

--- a/applications/Chat/examples/inference.py

+++ /dev/null

@@ -1,73 +0,0 @@

-import argparse

-

-import torch

-from coati.models.bloom import BLOOMActor

-from coati.models.generation import generate

-from coati.models.gpt import GPTActor

-from coati.models.llama import LlamaActor

-from coati.models.opt import OPTActor

-from transformers import AutoTokenizer, BloomTokenizerFast, GPT2Tokenizer, LlamaTokenizer

-

-

-def eval(args):

- # configure model

- if args.model == "gpt2":

- actor = GPTActor(pretrained=args.pretrain)

- elif args.model == "bloom":

- actor = BLOOMActor(pretrained=args.pretrain)

- elif args.model == "opt":

- actor = OPTActor(pretrained=args.pretrain)

- elif args.model == "llama":

- actor = LlamaActor(pretrained=args.pretrain)

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- actor.to(torch.cuda.current_device())

- if args.model_path is not None:

- state_dict = torch.load(args.model_path)

- actor.load_state_dict(state_dict)

-

- # configure tokenizer

- if args.model == "gpt2":

- tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "bloom":

- tokenizer = BloomTokenizerFast.from_pretrained("bigscience/bloom-560m")

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "opt":

- tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m")

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "llama":

- tokenizer = LlamaTokenizer.from_pretrained("hf-internal-testing/llama-tokenizer")

- tokenizer.eos_token = "<\s>"

- tokenizer.pad_token = tokenizer.unk_token

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- actor.eval()

- tokenizer.padding_side = "left"

- input_ids = tokenizer.encode(args.input, return_tensors="pt").to(torch.cuda.current_device())

- outputs = generate(

- actor,

- input_ids,

- tokenizer=tokenizer,

- max_length=args.max_length,

- do_sample=True,

- top_k=50,

- top_p=0.95,

- num_return_sequences=1,

- )

- output = tokenizer.batch_decode(outputs[0], skip_special_tokens=True)

- print(f"[Output]: {''.join(output)}")

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument("--model", default="gpt2", choices=["gpt2", "bloom", "opt", "llama"])

- # We suggest to use the pretrained model from HuggingFace, use pretrain to configure model

- parser.add_argument("--pretrain", type=str, default=None)

- parser.add_argument("--model_path", type=str, default=None)

- parser.add_argument("--input", type=str, default="Question: How are you ? Answer:")

- parser.add_argument("--max_length", type=int, default=100)

- args = parser.parse_args()

- eval(args)

diff --git a/applications/Chat/examples/train_prompts.py b/applications/Chat/examples/train_prompts.py

deleted file mode 100644

index 8868e278d85e..000000000000

--- a/applications/Chat/examples/train_prompts.py

+++ /dev/null

@@ -1,249 +0,0 @@

-import argparse

-import warnings

-

-import torch

-import torch.distributed as dist

-from coati.dataset import PromptDataset, SupervisedDataset

-from coati.models.bloom import BLOOMRM, BLOOMActor, BLOOMCritic

-from coati.models.gpt import GPTRM, GPTActor, GPTCritic

-from coati.models.llama import LlamaActor, LlamaCritic, LlamaRM

-from coati.models.opt import OPTRM, OPTActor, OPTCritic

-from coati.trainer import PPOTrainer

-from coati.trainer.strategies import DDPStrategy, GeminiStrategy, LowLevelZeroStrategy

-from torch.optim import Adam

-from torch.utils.data import DataLoader

-from torch.utils.data.distributed import DistributedSampler

-from transformers import AutoTokenizer, BloomTokenizerFast, GPT2Tokenizer, LlamaTokenizer

-

-from colossalai.nn.optimizer import HybridAdam

-

-

-def main(args):

- # configure strategy

- if args.strategy == "ddp":

- strategy = DDPStrategy()

- elif args.strategy == "colossalai_gemini":

- strategy = GeminiStrategy(placement_policy="static", initial_scale=2**5)

- elif args.strategy == "colossalai_zero2":

- strategy = LowLevelZeroStrategy(stage=2, placement_policy="cuda")

- else:

- raise ValueError(f'Unsupported strategy "{args.strategy}"')

-

- if args.rm_path is not None:

- warnings.warn("LoRA weights should be merged with the model weights")

- state_dict = torch.load(args.rm_path, map_location="cpu")

-

- if args.lora_rank > 0:

- warnings.warn("Lora is not supported yet.")

- args.lora_rank = 0

-

- with strategy.model_init_context():

- # configure model

- if args.model == "gpt2":

- initial_model = GPTActor(pretrained=args.pretrain)

- elif args.model == "bloom":

- initial_model = BLOOMActor(pretrained=args.pretrain)

- elif args.model == "opt":

- initial_model = OPTActor(pretrained=args.pretrain)

- elif args.model == "llama":

- initial_model = LlamaActor(pretrained=args.pretrain)

- else:

- raise ValueError(f'Unsupported actor model "{args.model}"')

-

- if args.rm_model is None:

- rm_model_name = args.model

- else:

- rm_model_name = args.rm_model

-

- if rm_model_name == "gpt2":

- reward_model = GPTRM(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "bloom":

- reward_model = BLOOMRM(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "opt":

- reward_model = OPTRM(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "llama":

- reward_model = LlamaRM(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- else:

- raise ValueError(f'Unsupported reward model "{rm_model_name}"')

-

- if args.rm_path is not None:

- reward_model.load_state_dict(state_dict, strict=False)

-

- initial_model.to(torch.bfloat16).to(torch.cuda.current_device())

- reward_model.to(torch.bfloat16).to(torch.cuda.current_device())

-

- if args.model == "gpt2":

- actor = GPTActor(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "bloom":

- actor = BLOOMActor(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "opt":

- actor = OPTActor(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "llama":

- actor = LlamaActor(pretrained=args.pretrain, lora_rank=args.lora_rank)

- else:

- raise ValueError(f'Unsupported actor model "{args.model}"')

-

- if rm_model_name == "gpt2":

- critic = GPTCritic(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "bloom":

- critic = BLOOMCritic(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "opt":

- critic = OPTCritic(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- elif rm_model_name == "llama":

- critic = LlamaCritic(pretrained=args.rm_pretrain, lora_rank=args.lora_rank)

- else:

- raise ValueError(f'Unsupported reward model "{rm_model_name}"')

-

- if args.rm_path is not None:

- critic.load_state_dict(state_dict, strict=False)

- del state_dict

-

- actor.to(torch.bfloat16).to(torch.cuda.current_device())

- critic.to(torch.bfloat16).to(torch.cuda.current_device())

-

- # configure optimizer

- if args.strategy.startswith("colossalai"):

- actor_optim = HybridAdam(actor.parameters(), lr=args.lr)

- critic_optim = HybridAdam(critic.parameters(), lr=args.lr)

- else:

- actor_optim = Adam(actor.parameters(), lr=args.lr)

- critic_optim = Adam(critic.parameters(), lr=args.lr)

-

- # configure tokenizer

- if args.model == "gpt2":

- tokenizer = GPT2Tokenizer.from_pretrained("gpt2" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "bloom":

- tokenizer = BloomTokenizerFast.from_pretrained(

- "bigscience/bloom-560m" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "opt":

- tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "llama":

- tokenizer = LlamaTokenizer.from_pretrained(

- "hf-internal-testing/llama-tokenizer" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.eos_token = "<\s>"

- tokenizer.pad_token = tokenizer.unk_token

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

- # NOTE: generate() requires padding_side to be "left"

- tokenizer.padding_side = "left"

-

- prompt_dataset = PromptDataset(

- tokenizer=tokenizer,

- data_path=args.prompt_dataset,

- max_datasets_size=args.max_datasets_size,

- max_length=args.max_input_len,

- )

- if dist.is_initialized() and dist.get_world_size() > 1:

- prompt_sampler = DistributedSampler(prompt_dataset, shuffle=True, seed=42, drop_last=True)

- else:

- prompt_sampler = None

- prompt_dataloader = DataLoader(

- prompt_dataset, shuffle=(prompt_sampler is None), sampler=prompt_sampler, batch_size=args.experience_batch_size

- )

-

- pretrain_dataset = SupervisedDataset(

- tokenizer=tokenizer,

- data_path=args.pretrain_dataset,

- max_datasets_size=args.max_datasets_size,

- max_length=args.max_input_len,

- )

- if dist.is_initialized() and dist.get_world_size() > 1:

- pretrain_sampler = DistributedSampler(pretrain_dataset, shuffle=True, seed=42, drop_last=True)

- else:

- pretrain_sampler = None

- pretrain_dataloader = DataLoader(

- pretrain_dataset, shuffle=(pretrain_sampler is None), sampler=pretrain_sampler, batch_size=args.ptx_batch_size

- )

-

- # NOTE: For small models like opt-1.3b, reward model and initial model are not required to be parallelized.

- (actor, actor_optim), (critic, critic_optim), reward_model, initial_model = strategy.prepare(

- (actor, actor_optim), (critic, critic_optim), reward_model, initial_model

- )

-

- # configure trainer

- trainer = PPOTrainer(

- strategy,

- actor,

- critic,

- reward_model,

- initial_model,

- actor_optim,

- critic_optim,

- tokenizer=tokenizer,

- kl_coef=args.kl_coef,

- ptx_coef=args.ptx_coef,

- train_batch_size=args.train_batch_size,

- max_length=args.max_seq_len,

- use_cache=True,

- do_sample=True,

- temperature=1.0,

- top_k=50,

- offload_inference_models=args.strategy != "colossalai_gemini",

- )

-

- trainer.fit(

- num_episodes=args.num_episodes,

- num_collect_steps=args.num_collect_steps,

- num_update_steps=args.num_update_steps,

- prompt_dataloader=prompt_dataloader,

- pretrain_dataloader=pretrain_dataloader,

- log_dir=args.log_dir,

- use_wandb=args.use_wandb,

- )

-

- if args.lora_rank > 0 and args.merge_lora_weights:

- from coati.models.lora import LORA_MANAGER

-

- # NOTE: set model to eval to merge LoRA weights

- LORA_MANAGER.merge_weights = True

- actor.eval()

- # save model checkpoint after fitting

- strategy.save_pretrained(actor, path=args.save_path)

- # save optimizer checkpoint on all ranks

- if args.need_optim_ckpt:

- strategy.save_optimizer(

- actor_optim, "actor_optim_checkpoint_prompts_%d.pt" % (torch.cuda.current_device()), only_rank0=False

- )

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument("--prompt_dataset", type=str, default=None, help="path to the prompt dataset")

- parser.add_argument("--pretrain_dataset", type=str, default=None, help="path to the pretrained dataset")

- parser.add_argument("--max_datasets_size", type=int, default=50000)

- parser.add_argument(

- "--strategy",

- choices=["ddp", "colossalai_gemini", "colossalai_zero2"],

- default="colossalai_zero2",

- help="strategy to use",

- )

- parser.add_argument("--model", default="gpt2", choices=["gpt2", "bloom", "opt", "llama"])

- parser.add_argument("--tokenizer", type=str, default=None)

- parser.add_argument("--pretrain", type=str, default=None)

- parser.add_argument("--rm_model", default=None, choices=["gpt2", "bloom", "opt", "llama"])

- parser.add_argument("--rm_path", type=str, default=None)

- parser.add_argument("--rm_pretrain", type=str, default=None)

- parser.add_argument("--save_path", type=str, default="actor_checkpoint_prompts")

- parser.add_argument("--need_optim_ckpt", type=bool, default=False)

- parser.add_argument("--num_episodes", type=int, default=10)

- parser.add_argument("--num_collect_steps", type=int, default=10)

- parser.add_argument("--num_update_steps", type=int, default=5)

- parser.add_argument("--train_batch_size", type=int, default=8)

- parser.add_argument("--ptx_batch_size", type=int, default=1)

- parser.add_argument("--experience_batch_size", type=int, default=8)

- parser.add_argument("--lora_rank", type=int, default=0, help="low-rank adaptation matrices rank")

- parser.add_argument("--merge_lora_weights", type=bool, default=True)

- parser.add_argument("--lr", type=float, default=1e-7)

- parser.add_argument("--kl_coef", type=float, default=0.1)

- parser.add_argument("--ptx_coef", type=float, default=0.9)

- parser.add_argument("--max_input_len", type=int, default=96)

- parser.add_argument("--max_seq_len", type=int, default=128)

- parser.add_argument("--log_dir", default="logs", type=str)

- parser.add_argument("--use_wandb", default=False, action="store_true")

- args = parser.parse_args()

- main(args)

diff --git a/applications/Chat/examples/train_prompts.sh b/applications/Chat/examples/train_prompts.sh

deleted file mode 100755

index d04c416015b1..000000000000

--- a/applications/Chat/examples/train_prompts.sh

+++ /dev/null

@@ -1,25 +0,0 @@

-set_n_least_used_CUDA_VISIBLE_DEVICES() {

- local n=${1:-"9999"}

- echo "GPU Memory Usage:"

- local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

- tail -n +2 |

- nl -v 0 |

- tee /dev/tty |

- sort -g -k 2 |

- awk '{print $1}' |

- head -n $n)

- export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

- echo "Now CUDA_VISIBLE_DEVICES is set to:"

- echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

-}

-

-set_n_least_used_CUDA_VISIBLE_DEVICES 2

-

-# torchrun --standalone --nproc_per_node=2 train_prompts.py prompts.csv --strategy colossalai_zero2

-

-torchrun --standalone --nproc_per_node=2 train_prompts.py \

- --pretrain_dataset /path/to/data.json \

- --prompt_dataset /path/to/data.json \

- --strategy colossalai_zero2 \

- --num_episodes 1 --num_collect_steps 2 --num_update_steps 1 \

- --train_batch_size 2

diff --git a/applications/Chat/examples/train_reward_model.py b/applications/Chat/examples/train_reward_model.py

deleted file mode 100644

index df6e8b6bdc26..000000000000

--- a/applications/Chat/examples/train_reward_model.py

+++ /dev/null

@@ -1,208 +0,0 @@

-import argparse

-import warnings

-

-import torch

-import torch.distributed as dist

-from coati.dataset import HhRlhfDataset, RmStaticDataset

-from coati.models import LogExpLoss, LogSigLoss

-from coati.models.bloom import BLOOMRM

-from coati.models.gpt import GPTRM

-from coati.models.llama import LlamaRM

-from coati.models.opt import OPTRM

-from coati.trainer import RewardModelTrainer

-from coati.trainer.strategies import DDPStrategy, GeminiStrategy, LowLevelZeroStrategy

-from datasets import load_dataset

-from torch.optim import Adam

-from torch.optim.lr_scheduler import CosineAnnealingLR

-from torch.utils.data import DataLoader

-from torch.utils.data.distributed import DistributedSampler

-from transformers import AutoTokenizer, BloomTokenizerFast, LlamaTokenizer

-from transformers.models.gpt2.tokenization_gpt2 import GPT2Tokenizer

-

-from colossalai.nn.optimizer import HybridAdam

-

-

-def train(args):

- # configure strategy

- if args.strategy == "ddp":

- strategy = DDPStrategy()

- elif args.strategy == "colossalai_gemini":

- strategy = GeminiStrategy(placement_policy="auto")

- elif args.strategy == "colossalai_zero2":

- strategy = LowLevelZeroStrategy(stage=2, placement_policy="cuda")

- else:

- raise ValueError(f'Unsupported strategy "{args.strategy}"')

-

- # configure model

- if args.lora_rank > 0:

- warnings.warn("Lora is not supported yet.")

- args.lora_rank = 0

-

- with strategy.model_init_context():

- if args.model == "bloom":

- model = BLOOMRM(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "opt":

- model = OPTRM(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "gpt2":

- model = GPTRM(pretrained=args.pretrain, lora_rank=args.lora_rank)

- elif args.model == "llama":

- model = LlamaRM(pretrained=args.pretrain, lora_rank=args.lora_rank)

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- model.to(torch.bfloat16).to(torch.cuda.current_device())

-

- if args.model_path is not None:

- state_dict = torch.load(args.model_path)

- model.load_state_dict(state_dict)

-

- # configure tokenizer

- if args.model == "gpt2":

- tokenizer = GPT2Tokenizer.from_pretrained("gpt2" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "bloom":

- tokenizer = BloomTokenizerFast.from_pretrained(

- "bigscience/bloom-560m" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "opt":

- tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "llama":

- tokenizer = LlamaTokenizer.from_pretrained(

- "hf-internal-testing/llama-tokenizer" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.eos_token = "<\s>"

- tokenizer.pad_token = tokenizer.unk_token

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- # configure optimizer

- if args.strategy.startswith("colossalai"):

- optim = HybridAdam(model.parameters(), lr=args.lr)

- else:

- optim = Adam(model.parameters(), lr=args.lr)

-

- # configure loss function

- if args.loss_fn == "log_sig":

- loss_fn = LogSigLoss()

- elif args.loss_fn == "log_exp":

- loss_fn = LogExpLoss()

- else:

- raise ValueError(f'Unsupported loss function "{args.loss_fn}"')

-

- # prepare for data and dataset

- if args.subset is not None:

- data = load_dataset(args.dataset, data_dir=args.subset)

- else:

- data = load_dataset(args.dataset)

-

- train_data = data["train"].select(range(min(args.max_datasets_size, len(data["train"]))))

- eval_data = data["test"].select(range(min(args.max_datasets_size, len(data["test"]))))

-

- if args.dataset == "Dahoas/rm-static":

- train_dataset = RmStaticDataset(train_data, tokenizer, args.max_len)

- eval_dataset = RmStaticDataset(eval_data, tokenizer, args.max_len)

- elif args.dataset == "Anthropic/hh-rlhf":

- train_dataset = HhRlhfDataset(train_data, tokenizer, args.max_len)

- eval_dataset = HhRlhfDataset(eval_data, tokenizer, args.max_len)

- else:

- raise ValueError(f'Unsupported dataset "{args.dataset}"')

-

- if dist.is_initialized() and dist.get_world_size() > 1:

- train_sampler = DistributedSampler(

- train_dataset,

- shuffle=True,

- seed=42,

- drop_last=True,

- rank=dist.get_rank(),

- num_replicas=dist.get_world_size(),

- )

- eval_sampler = DistributedSampler(

- eval_dataset,

- shuffle=True,

- seed=42,

- drop_last=True,

- rank=dist.get_rank(),

- num_replicas=dist.get_world_size(),

- )

- else:

- train_sampler = None

- eval_sampler = None

-

- train_dataloader = DataLoader(

- train_dataset,

- shuffle=(train_sampler is None),

- sampler=train_sampler,

- batch_size=args.batch_size,

- pin_memory=True,

- )

-

- eval_dataloader = DataLoader(

- eval_dataset, shuffle=(eval_sampler is None), sampler=eval_sampler, batch_size=args.batch_size, pin_memory=True

- )

-

- lr_scheduler = CosineAnnealingLR(optim, train_dataloader.__len__() // 100)

- strategy_dict = strategy.prepare(dict(model=model, optimizer=optim, lr_scheduler=lr_scheduler))

- model = strategy_dict["model"]

- optim = strategy_dict["optimizer"]

- lr_scheduler = strategy_dict["lr_scheduler"]

- trainer = RewardModelTrainer(

- model=model,

- strategy=strategy,

- optim=optim,

- lr_scheduler=lr_scheduler,

- loss_fn=loss_fn,

- max_epochs=args.max_epochs,

- )

-

- trainer.fit(

- train_dataloader=train_dataloader,

- eval_dataloader=eval_dataloader,

- log_dir=args.log_dir,

- use_wandb=args.use_wandb,

- )

-

- if args.lora_rank > 0 and args.merge_lora_weights:

- from coati.models.lora import LORA_MANAGER

-

- # NOTE: set model to eval to merge LoRA weights

- LORA_MANAGER.merge_weights = True

- model.eval()

- # save model checkpoint after fitting on only rank0

- state_dict = model.state_dict()

- torch.save(state_dict, args.save_path)

- # save optimizer checkpoint on all ranks

- if args.need_optim_ckpt:

- strategy.save_optimizer(

- trainer.optimizer, "rm_optim_checkpoint_%d.pt" % (torch.cuda.current_device()), only_rank0=False

- )

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument(

- "--strategy", choices=["ddp", "colossalai_gemini", "colossalai_zero2"], default="colossalai_zero2"

- )

- parser.add_argument("--model", choices=["gpt2", "bloom", "opt", "llama"], default="bloom")

- parser.add_argument("--tokenizer", type=str, default=None)

- parser.add_argument("--pretrain", type=str, default=None)

- parser.add_argument("--model_path", type=str, default=None)

- parser.add_argument("--need_optim_ckpt", type=bool, default=False)

- parser.add_argument(

- "--dataset", type=str, choices=["Anthropic/hh-rlhf", "Dahoas/rm-static"], default="Dahoas/rm-static"

- )

- parser.add_argument("--subset", type=lambda x: None if x == "None" else x, default=None)

- parser.add_argument("--max_datasets_size", type=int, default=1000000)

- parser.add_argument("--save_path", type=str, default="rm_ckpt")

- parser.add_argument("--max_epochs", type=int, default=1)

- parser.add_argument("--batch_size", type=int, default=1)

- parser.add_argument("--max_len", type=int, default=512)

- parser.add_argument("--lora_rank", type=int, default=0, help="low-rank adaptation matrices rank")

- parser.add_argument("--merge_lora_weights", type=bool, default=True)

- parser.add_argument("--lr", type=float, default=9e-6)

- parser.add_argument("--loss_fn", type=str, default="log_sig", choices=["log_sig", "log_exp"])

- parser.add_argument("--log_dir", default="logs", type=str)

- parser.add_argument("--use_wandb", default=False, action="store_true")

- args = parser.parse_args()

- train(args)

diff --git a/applications/Chat/examples/train_rm.sh b/applications/Chat/examples/train_rm.sh

deleted file mode 100755

index c5ebaf708ddc..000000000000

--- a/applications/Chat/examples/train_rm.sh

+++ /dev/null

@@ -1,25 +0,0 @@

-set_n_least_used_CUDA_VISIBLE_DEVICES() {

- local n=${1:-"9999"}

- echo "GPU Memory Usage:"

- local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

- tail -n +2 |

- nl -v 0 |

- tee /dev/tty |

- sort -g -k 2 |

- awk '{print $1}' |

- head -n $n)

- export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

- echo "Now CUDA_VISIBLE_DEVICES is set to:"

- echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

-}

-

-set_n_least_used_CUDA_VISIBLE_DEVICES 2

-

-torchrun --standalone --nproc_per_node=2 train_reward_model.py \

- --pretrain 'gpt2' \

- --model 'gpt2' \

- --strategy colossalai_zero2 \

- --loss_fn 'log_exp' \

- --dataset 'Anthropic/hh-rlhf' \

- --batch_size 16 \

- --max_epochs 10

diff --git a/applications/Chat/examples/train_sft.py b/applications/Chat/examples/train_sft.py

deleted file mode 100644

index 66d08da30120..000000000000

--- a/applications/Chat/examples/train_sft.py

+++ /dev/null

@@ -1,221 +0,0 @@

-import argparse

-import math

-import warnings

-

-import torch

-import torch.distributed as dist

-from coati.dataset import SFTDataset, SupervisedDataset

-from coati.models.bloom import BLOOMActor

-from coati.models.chatglm import ChatGLMActor

-from coati.models.chatglm.chatglm_tokenizer import ChatGLMTokenizer

-from coati.models.gpt import GPTActor

-from coati.models.llama import LlamaActor

-from coati.models.opt import OPTActor

-from coati.trainer import SFTTrainer

-from coati.trainer.strategies import DDPStrategy, GeminiStrategy, LowLevelZeroStrategy

-from datasets import load_dataset

-from torch.optim import Adam

-from torch.utils.data import DataLoader

-from torch.utils.data.distributed import DistributedSampler

-from transformers import AutoTokenizer, BloomTokenizerFast, LlamaTokenizer

-from transformers.models.gpt2.tokenization_gpt2 import GPT2Tokenizer

-from transformers.trainer import get_scheduler

-

-from colossalai.logging import get_dist_logger

-from colossalai.nn.optimizer import HybridAdam

-

-

-def train(args):

- # configure strategy

- if args.strategy == "ddp":

- strategy = DDPStrategy()

- elif args.strategy == "colossalai_gemini":

- strategy = GeminiStrategy(placement_policy="auto")

- elif args.strategy == "colossalai_zero2":

- strategy = LowLevelZeroStrategy(stage=2, placement_policy="cuda")

- elif args.strategy == "colossalai_zero2_cpu":

- strategy = LowLevelZeroStrategy(stage=2, placement_policy="cpu")

- else:

- raise ValueError(f'Unsupported strategy "{args.strategy}"')

-

- # configure model

- if args.lora_rank > 0:

- warnings.warn("Lora is not supported yet.")

- args.lora_rank = 0

-

- with strategy.model_init_context():

- if args.model == "bloom":

- model = BLOOMActor(pretrained=args.pretrain, lora_rank=args.lora_rank, checkpoint=args.grad_checkpoint)

- elif args.model == "opt":

- model = OPTActor(pretrained=args.pretrain, lora_rank=args.lora_rank, checkpoint=args.grad_checkpoint)

- elif args.model == "gpt2":

- model = GPTActor(pretrained=args.pretrain, lora_rank=args.lora_rank, checkpoint=args.grad_checkpoint)

- elif args.model == "llama":

- model = LlamaActor(pretrained=args.pretrain, lora_rank=args.lora_rank, checkpoint=args.grad_checkpoint)

- elif args.model == "chatglm":

- model = ChatGLMActor(pretrained=args.pretrain)

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- model.to(torch.bfloat16).to(torch.cuda.current_device())

-

- # configure tokenizer

- if args.model == "gpt2":

- tokenizer = GPT2Tokenizer.from_pretrained("gpt2" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "bloom":

- tokenizer = BloomTokenizerFast.from_pretrained(

- "bigscience/bloom-560m" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "opt":

- tokenizer = AutoTokenizer.from_pretrained("facebook/opt-350m" if args.tokenizer is None else args.tokenizer)

- tokenizer.pad_token = tokenizer.eos_token

- elif args.model == "llama":

- tokenizer = LlamaTokenizer.from_pretrained(

- "hf-internal-testing/llama-tokenizer" if args.tokenizer is None else args.tokenizer

- )

- tokenizer.eos_token = "<\s>"

- tokenizer.pad_token = tokenizer.unk_token

- elif args.model == "chatglm":

- tokenizer = ChatGLMTokenizer.from_pretrained(

- "THUDM/chatglm-6b" if args.tokenizer is None else args.tokenizer, trust_remote_code=True

- )

- else:

- raise ValueError(f'Unsupported model "{args.model}"')

-

- # configure optimizer

- if args.strategy.startswith("colossalai"):

- optim = HybridAdam(model.parameters(), lr=args.lr, clipping_norm=1.0)

- else:

- optim = Adam(model.parameters(), lr=args.lr)

-

- # configure dataset

- if args.dataset == "yizhongw/self_instruct":

- train_data = load_dataset(args.dataset, "super_natural_instructions", split="train")

- eval_data = load_dataset(args.dataset, "super_natural_instructions", split="test")

-

- if args.max_datasets_size is not None:

- train_data = train_data.select(range(min(args.max_datasets_size, len(train_data))))

- eval_data = eval_data.select(range(min(args.max_datasets_size, len(eval_data))))

-

- train_dataset = SFTDataset(train_data, tokenizer, args.max_len)

- eval_dataset = SFTDataset(eval_data, tokenizer, args.max_len)

-

- else:

- train_dataset = SupervisedDataset(

- tokenizer=tokenizer,

- data_path=args.dataset,

- max_datasets_size=args.max_datasets_size,

- max_length=args.max_len,

- )

- eval_dataset = None

-

- if dist.is_initialized() and dist.get_world_size() > 1:

- train_sampler = DistributedSampler(

- train_dataset,

- shuffle=True,

- seed=42,

- drop_last=True,

- rank=dist.get_rank(),

- num_replicas=dist.get_world_size(),

- )

- if eval_dataset is not None:

- eval_sampler = DistributedSampler(

- eval_dataset,

- shuffle=False,

- seed=42,

- drop_last=False,

- rank=dist.get_rank(),

- num_replicas=dist.get_world_size(),

- )

- else:

- train_sampler = None

- eval_sampler = None

-

- train_dataloader = DataLoader(

- train_dataset,

- shuffle=(train_sampler is None),

- sampler=train_sampler,

- batch_size=args.batch_size,

- pin_memory=True,

- )

- if eval_dataset is not None:

- eval_dataloader = DataLoader(

- eval_dataset,

- shuffle=(eval_sampler is None),

- sampler=eval_sampler,

- batch_size=args.batch_size,

- pin_memory=True,

- )

- else:

- eval_dataloader = None

-

- num_update_steps_per_epoch = len(train_dataloader) // args.accumulation_steps

- max_steps = math.ceil(args.max_epochs * num_update_steps_per_epoch)

- lr_scheduler = get_scheduler(

- "cosine", optim, num_warmup_steps=math.ceil(max_steps * 0.03), num_training_steps=max_steps

- )

- strategy_dict = strategy.prepare(dict(model=model, optimizer=optim, lr_scheduler=lr_scheduler))

- model = strategy_dict["model"]

- optim = strategy_dict["optimizer"]

- lr_scheduler = strategy_dict["lr_scheduler"]

- trainer = SFTTrainer(

- model=model,

- strategy=strategy,

- optim=optim,

- lr_scheduler=lr_scheduler,

- max_epochs=args.max_epochs,

- accumulation_steps=args.accumulation_steps,

- )

-

- logger = get_dist_logger()

- trainer.fit(

- train_dataloader=train_dataloader,

- eval_dataloader=eval_dataloader,

- logger=logger,

- log_dir=args.log_dir,

- use_wandb=args.use_wandb,

- )

-

- if args.lora_rank > 0 and args.merge_lora_weights:

- from coati.models.lora import LORA_MANAGER

-

- # NOTE: set model to eval to merge LoRA weights

- LORA_MANAGER.merge_weights = True

- model.eval()

- # save model checkpoint after fitting on only rank0

- strategy.save_pretrained(model, path=args.save_path, tokenizer=tokenizer)

- # save optimizer checkpoint on all ranks

- if args.need_optim_ckpt:

- strategy.save_optimizer(

- trainer.optimizer, "rm_optim_checkpoint_%d.pt" % (torch.cuda.current_device()), only_rank0=False

- )

-

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument(

- "--strategy",

- choices=["ddp", "colossalai_gemini", "colossalai_zero2", "colossalai_zero2_cpu"],

- default="colossalai_zero2",

- )

- parser.add_argument("--model", choices=["gpt2", "bloom", "opt", "llama", "chatglm"], default="bloom")

- parser.add_argument("--tokenizer", type=str, default=None)

- parser.add_argument("--pretrain", type=str, default=None)

- parser.add_argument("--dataset", type=str, default=None)

- parser.add_argument("--max_datasets_size", type=int, default=None)

- parser.add_argument("--save_path", type=str, default="output")

- parser.add_argument("--need_optim_ckpt", type=bool, default=False)

- parser.add_argument("--max_epochs", type=int, default=3)

- parser.add_argument("--batch_size", type=int, default=4)

- parser.add_argument("--max_len", type=int, default=512)

- parser.add_argument("--lora_rank", type=int, default=0, help="low-rank adaptation matrices rank")

- parser.add_argument("--merge_lora_weights", type=bool, default=True)

- parser.add_argument("--lr", type=float, default=5e-6)

- parser.add_argument("--accumulation_steps", type=int, default=8)

- parser.add_argument("--log_dir", default="logs", type=str)

- parser.add_argument("--use_wandb", default=False, action="store_true")

- parser.add_argument("--grad_checkpoint", default=False, action="store_true")

- args = parser.parse_args()

- train(args)

diff --git a/applications/Chat/examples/train_sft.sh b/applications/Chat/examples/train_sft.sh

deleted file mode 100755

index 0fb4da3d3ce8..000000000000

--- a/applications/Chat/examples/train_sft.sh

+++ /dev/null

@@ -1,28 +0,0 @@

-set_n_least_used_CUDA_VISIBLE_DEVICES() {

- local n=${1:-"9999"}

- echo "GPU Memory Usage:"

- local FIRST_N_GPU_IDS=$(nvidia-smi --query-gpu=memory.used --format=csv |

- tail -n +2 |

- nl -v 0 |

- tee /dev/tty |

- sort -g -k 2 |

- awk '{print $1}' |

- head -n $n)

- export CUDA_VISIBLE_DEVICES=$(echo $FIRST_N_GPU_IDS | sed 's/ /,/g')

- echo "Now CUDA_VISIBLE_DEVICES is set to:"

- echo "CUDA_VISIBLE_DEVICES=$CUDA_VISIBLE_DEVICES"

-}

-

-set_n_least_used_CUDA_VISIBLE_DEVICES 4

-

-torchrun --standalone --nproc_per_node=4 train_sft.py \

- --pretrain "/path/to/LLaMa-7B/" \

- --model 'llama' \

- --strategy colossalai_zero2 \

- --save_path /path/to/Coati-7B \

- --dataset /path/to/data.json \

- --batch_size 4 \

- --accumulation_steps 8 \

- --lr 2e-5 \

- --max_datasets_size 512 \

- --max_epochs 1

diff --git a/applications/Chat/inference/benchmark.py b/applications/Chat/inference/benchmark.py

deleted file mode 100644

index dbb5490a63dc..000000000000

--- a/applications/Chat/inference/benchmark.py

+++ /dev/null

@@ -1,141 +0,0 @@

-# Adapted from https://github.com/tloen/alpaca-lora/blob/main/generate.py

-

-import argparse

-from time import time

-

-import torch

-from coati.quant import llama_load_quant, low_resource_init

-from transformers import AutoTokenizer, GenerationConfig, LlamaConfig, LlamaForCausalLM

-

-

-def generate_prompt(instruction, input=None):

- if input:

- return f"""Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

-

-### Instruction:

-{instruction}

-

-### Input:

-{input}

-

-### Response:"""

- else:

- return f"""Below is an instruction that describes a task. Write a response that appropriately completes the request.

-

-### Instruction:

-{instruction}

-

-### Response:"""

-

-

-@torch.no_grad()

-def evaluate(

- model,

- tokenizer,

- instruction,

- input=None,

- temperature=0.1,

- top_p=0.75,

- top_k=40,

- num_beams=4,

- max_new_tokens=128,

- **kwargs,

-):

- prompt = generate_prompt(instruction, input)

- inputs = tokenizer(prompt, return_tensors="pt")

- input_ids = inputs["input_ids"].cuda()

- generation_config = GenerationConfig(

- temperature=temperature,

- top_p=top_p,

- top_k=top_k,

- num_beams=num_beams,

- **kwargs,

- )

- generation_output = model.generate(

- input_ids=input_ids,

- generation_config=generation_config,

- return_dict_in_generate=True,

- output_scores=True,

- max_new_tokens=max_new_tokens,

- do_sample=True,

- )

- s = generation_output.sequences[0]

- output = tokenizer.decode(s)

- n_new_tokens = s.size(0) - input_ids.size(1)

- return output.split("### Response:")[1].strip(), n_new_tokens

-

-

-instructions = [

- "Tell me about alpacas.",

- "Tell me about the president of Mexico in 2019.",

- "Tell me about the king of France in 2019.",

- "List all Canadian provinces in alphabetical order.",

- "Write a Python program that prints the first 10 Fibonacci numbers.",

- "Write a program that prints the numbers from 1 to 100. But for multiples of three print 'Fizz' instead of the number and for the multiples of five print 'Buzz'. For numbers which are multiples of both three and five print 'FizzBuzz'.",

- "Tell me five words that rhyme with 'shock'.",

- "Translate the sentence 'I have no mouth but I must scream' into Spanish.",

- "Count up from 1 to 500.",

- # ===

- "How to play support in legends of league",

- "Write a Python program that calculate Fibonacci numbers.",

-]

-inst = [instructions[0]] * 4

-

-if __name__ == "__main__":

- parser = argparse.ArgumentParser()

- parser.add_argument(

- "pretrained",

- help="Path to pretrained model. Can be a local path or a model name from the HuggingFace model hub.",

- )

- parser.add_argument(

- "--quant",

- choices=["8bit", "4bit"],

- default=None,

- help="Quantization mode. Default: None (no quantization, fp16).",

- )

- parser.add_argument(

- "--gptq_checkpoint",

- default=None,

- help="Path to GPTQ checkpoint. This is only useful when quantization mode is 4bit. Default: None.",

- )

- parser.add_argument(

- "--gptq_group_size",

- type=int,

- default=128,

- help="Group size for GPTQ. This is only useful when quantization mode is 4bit. Default: 128.",

- )

- args = parser.parse_args()

-

- if args.quant == "4bit":

- assert args.gptq_checkpoint is not None, "Please specify a GPTQ checkpoint."

-

- tokenizer = AutoTokenizer.from_pretrained(args.pretrained)

-

- if args.quant == "4bit":

- with low_resource_init():

- config = LlamaConfig.from_pretrained(args.pretrained)

- model = LlamaForCausalLM(config)

- model = llama_load_quant(model, args.gptq_checkpoint, 4, args.gptq_group_size)

- model.cuda()

- else:

- model = LlamaForCausalLM.from_pretrained(

- args.pretrained,

- load_in_8bit=(args.quant == "8bit"),

- torch_dtype=torch.float16,

- device_map="auto",

- )

- if args.quant != "8bit":

- model.half() # seems to fix bugs for some users.

- model.eval()

-

- total_tokens = 0

- start = time()

- for instruction in instructions:

- print(f"Instruction: {instruction}")

- resp, tokens = evaluate(model, tokenizer, instruction, temperature=0.2, num_beams=1)

- total_tokens += tokens

- print(f"Response: {resp}")

- print("\n----------------------------\n")

- duration = time() - start

- print(f"Total time: {duration:.3f} s, {total_tokens/duration:.3f} tokens/s")

- print(f"Peak CUDA mem: {torch.cuda.max_memory_allocated()/1024**3:.3f} GB")

diff --git a/applications/Chat/inference/tests/test_chat_prompt.py b/applications/Chat/inference/tests/test_chat_prompt.py

deleted file mode 100644

index 9835e71894c6..000000000000

--- a/applications/Chat/inference/tests/test_chat_prompt.py

+++ /dev/null

@@ -1,61 +0,0 @@

-import os

-

-from transformers import AutoTokenizer

-from utils import ChatPromptProcessor, Dialogue

-

-CONTEXT = "Below is an instruction that describes a task. Write a response that appropriately completes the request. Do not generate new instructions."

-tokenizer = AutoTokenizer.from_pretrained(os.environ["PRETRAINED_PATH"])

-

-samples = [

- (

- [

- Dialogue(

- instruction="Who is the best player in the history of NBA?",

- response="The best player in the history of the NBA is widely considered to be Michael Jordan. He is one of the most successful players in the league, having won 6 NBA championships with the Chicago Bulls and 5 more with the Washington Wizards. He is a 5-time MVP, 1",

- ),

- Dialogue(instruction="continue this talk", response=""),

- ],

- 128,

- "Below is an instruction that describes a task. Write a response that appropriately completes the request. Do not generate new instructions.\n\n### Instruction:\nWho is the best player in the history of NBA?\n\n### Response:\nThe best player in the history of the NBA is widely considered to be Michael Jordan. He is one of the most successful players in the league, having won 6 NBA championships with the Chicago Bulls and 5 more with the Washington Wizards. He is a 5-time MVP, 1\n\n### Instruction:\ncontinue this talk\n\n### Response:\n",

- ),

- (

- [

- Dialogue(

- instruction="Who is the best player in the history of NBA?",

- response="The best player in the history of the NBA is widely considered to be Michael Jordan. He is one of the most successful players in the league, having won 6 NBA championships with the Chicago Bulls and 5 more with the Washington Wizards. He is a 5-time MVP, 1",

- ),

- Dialogue(instruction="continue this talk", response=""),

- ],

- 200,

- "Below is an instruction that describes a task. Write a response that appropriately completes the request. Do not generate new instructions.\n\n### Instruction:\ncontinue this talk\n\n### Response:\n",

- ),

- (

- [

- Dialogue(

- instruction="Who is the best player in the history of NBA?",

- response="The best player in the history of the NBA is widely considered to be Michael Jordan. He is one of the most successful players in the league, having won 6 NBA championships with the Chicago Bulls and 5 more with the Washington Wizards. He is a 5-time MVP, 1",

- ),

- Dialogue(instruction="continue this talk", response=""),

- ],

- 211,

- "Below is an instruction that describes a task. Write a response that appropriately completes the request. Do not generate new instructions.\n\n### Instruction:\ncontinue this\n\n### Response:\n",

- ),

- (

- [

- Dialogue(instruction="Who is the best player in the history of NBA?", response=""),

- ],

- 128,

- "Below is an instruction that describes a task. Write a response that appropriately completes the request. Do not generate new instructions.\n\n### Instruction:\nWho is the best player in the history of NBA?\n\n### Response:\n",

- ),

-]

-

-

-def test_chat_prompt_processor():

- processor = ChatPromptProcessor(tokenizer, CONTEXT, 256)

- for history, max_new_tokens, result in samples:

- prompt = processor.preprocess_prompt(history, max_new_tokens)

- assert prompt == result

-

-

-if __name__ == "__main__":

- test_chat_prompt_processor()

diff --git a/applications/Chat/inference/utils.py b/applications/Chat/inference/utils.py

deleted file mode 100644

index af018adf6e9d..000000000000

--- a/applications/Chat/inference/utils.py

+++ /dev/null

@@ -1,209 +0,0 @@

-import json

-import re

-from threading import Lock

-from typing import Any, Callable, Generator, List, Optional

-

-import jieba

-import torch

-import torch.distributed as dist

-import torch.nn as nn

-from pydantic import BaseModel, Field

-

-try:

- from transformers.generation_logits_process import (

- LogitsProcessorList,

- TemperatureLogitsWarper,

- TopKLogitsWarper,

- TopPLogitsWarper,

- )

-except ImportError:

- from transformers.generation import LogitsProcessorList, TemperatureLogitsWarper, TopKLogitsWarper, TopPLogitsWarper

-

-

-def prepare_logits_processor(

- top_k: Optional[int] = None, top_p: Optional[float] = None, temperature: Optional[float] = None

-) -> LogitsProcessorList:

- processor_list = LogitsProcessorList()

- if temperature is not None and temperature != 1.0:

- processor_list.append(TemperatureLogitsWarper(temperature))

- if top_k is not None and top_k != 0:

- processor_list.append(TopKLogitsWarper(top_k))

- if top_p is not None and top_p < 1.0:

- processor_list.append(TopPLogitsWarper(top_p))

- return processor_list

-

-

-def _is_sequence_finished(unfinished_sequences: torch.Tensor) -> bool:

- if dist.is_initialized() and dist.get_world_size() > 1:

- # consider DP

- unfinished_sequences = unfinished_sequences.clone()

- dist.all_reduce(unfinished_sequences)

- return unfinished_sequences.max() == 0

-

-

-def sample_streamingly(

- model: nn.Module,

- input_ids: torch.Tensor,

- max_generate_tokens: int,

- early_stopping: bool = False,

- eos_token_id: Optional[int] = None,

- pad_token_id: Optional[int] = None,

- top_k: Optional[int] = None,

- top_p: Optional[float] = None,

- temperature: Optional[float] = None,

- prepare_inputs_fn: Optional[Callable[[torch.Tensor, Any], dict]] = None,

- update_model_kwargs_fn: Optional[Callable[[dict, Any], dict]] = None,

- **model_kwargs,

-) -> Generator:

- logits_processor = prepare_logits_processor(top_k, top_p, temperature)

- unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

-

- for _ in range(max_generate_tokens):

- model_inputs = (

- prepare_inputs_fn(input_ids, **model_kwargs) if prepare_inputs_fn is not None else {"input_ids": input_ids}

- )

- outputs = model(**model_inputs)

-

- next_token_logits = outputs["logits"][:, -1, :]

- # pre-process distribution

- next_token_logits = logits_processor(input_ids, next_token_logits)

- # sample

- probs = torch.softmax(next_token_logits, dim=-1, dtype=torch.float)

- next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

-

- # finished sentences should have their next token be a padding token

- if eos_token_id is not None:

- if pad_token_id is None:

- raise ValueError("If `eos_token_id` is defined, make sure that `pad_token_id` is defined.")

- next_tokens = next_tokens * unfinished_sequences + pad_token_id * (1 - unfinished_sequences)

-

- yield next_tokens

-

- # update generated ids, model inputs for next step

- input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

- if update_model_kwargs_fn is not None:

- model_kwargs = update_model_kwargs_fn(outputs, **model_kwargs)

-

- # if eos_token was found in one sentence, set sentence to finished

- if eos_token_id is not None:

- unfinished_sequences = unfinished_sequences.mul((next_tokens != eos_token_id).long())

-

- # stop when each sentence is finished if early_stopping=True

- if early_stopping and _is_sequence_finished(unfinished_sequences):

- break

-

-

-def update_model_kwargs_fn(outputs: dict, **model_kwargs) -> dict:

- if "past_key_values" in outputs:

- model_kwargs["past"] = outputs["past_key_values"]

- else:

- model_kwargs["past"] = None

-

- # update token_type_ids with last value

- if "token_type_ids" in model_kwargs:

- token_type_ids = model_kwargs["token_type_ids"]

- model_kwargs["token_type_ids"] = torch.cat([token_type_ids, token_type_ids[:, -1].unsqueeze(-1)], dim=-1)

-

- # update attention mask

- if "attention_mask" in model_kwargs:

- attention_mask = model_kwargs["attention_mask"]

- model_kwargs["attention_mask"] = torch.cat(

- [attention_mask, attention_mask.new_ones((attention_mask.shape[0], 1))], dim=-1

- )

-

- return model_kwargs

-

-

-class Dialogue(BaseModel):

- instruction: str = Field(min_length=1, example="Count up from 1 to 500.")

- response: str = Field(example="")

-

-

-def _format_dialogue(instruction: str, response: str = ""):

- return f"\n\n### Instruction:\n{instruction}\n\n### Response:\n{response}"

-

-

-STOP_PAT = re.compile(r"(###|instruction:).*", flags=(re.I | re.S))

-

-

-class ChatPromptProcessor:

- SAFE_RESPONSE = "The input/response contains inappropriate content, please rephrase your prompt."

-

- def __init__(self, tokenizer, context: str, max_len: int = 2048, censored_words: List[str] = []):

- self.tokenizer = tokenizer

- self.context = context

- self.max_len = max_len

- self.censored_words = set([word.lower() for word in censored_words])

- # These will be initialized after the first call of preprocess_prompt()

- self.context_len: Optional[int] = None

- self.dialogue_placeholder_len: Optional[int] = None

-

- def preprocess_prompt(self, history: List[Dialogue], max_new_tokens: int) -> str:

- if self.context_len is None:

- self.context_len = len(self.tokenizer(self.context)["input_ids"])

- if self.dialogue_placeholder_len is None:

- self.dialogue_placeholder_len = len(

- self.tokenizer(_format_dialogue(""), add_special_tokens=False)["input_ids"]

- )

- prompt = self.context

- # the last dialogue must be in the prompt

- last_dialogue = history.pop()

- # the response of the last dialogue is empty

- assert last_dialogue.response == ""

- if (

- len(self.tokenizer(_format_dialogue(last_dialogue.instruction), add_special_tokens=False)["input_ids"])

- + max_new_tokens

- + self.context_len

- >= self.max_len

- ):

- # to avoid truncate placeholder, apply truncate to the original instruction

- instruction_truncated = self.tokenizer(

- last_dialogue.instruction,

- add_special_tokens=False,

- truncation=True,

- max_length=(self.max_len - max_new_tokens - self.context_len - self.dialogue_placeholder_len),

- )["input_ids"]

- instruction_truncated = self.tokenizer.decode(instruction_truncated).lstrip()

- prompt += _format_dialogue(instruction_truncated)

- return prompt

-

- res_len = self.max_len - max_new_tokens - len(self.tokenizer(prompt)["input_ids"])

-

- rows = []

- for dialogue in history[::-1]:

- text = _format_dialogue(dialogue.instruction, dialogue.response)

- cur_len = len(self.tokenizer(text, add_special_tokens=False)["input_ids"])

- if res_len - cur_len < 0:

- break

- res_len -= cur_len

- rows.insert(0, text)

- prompt += "".join(rows) + _format_dialogue(last_dialogue.instruction)

- return prompt

-

- def postprocess_output(self, output: str) -> str:

- output = STOP_PAT.sub("", output)

- return output.strip()

-

- def has_censored_words(self, text: str) -> bool:

- if len(self.censored_words) == 0:

- return False

- intersection = set(jieba.cut(text.lower())) & self.censored_words

- return len(intersection) > 0

-

-

-class LockedIterator:

- def __init__(self, it, lock: Lock) -> None:

- self.lock = lock

- self.it = iter(it)

-

- def __iter__(self):

- return self

-

- def __next__(self):

- with self.lock:

- return next(self.it)

-

-

-def load_json(path: str):

- with open(path) as f:

- return json.load(f)

diff --git a/applications/Chat/requirements-test.txt b/applications/Chat/requirements-test.txt

deleted file mode 100644

index 93d48bcb6f79..000000000000

--- a/applications/Chat/requirements-test.txt

+++ /dev/null

@@ -1,2 +0,0 @@

-pytest

-colossalai==0.3.3

diff --git a/applications/Chat/requirements.txt b/applications/Chat/requirements.txt

deleted file mode 100644

index e56aaca0e7cb..000000000000

--- a/applications/Chat/requirements.txt

+++ /dev/null

@@ -1,14 +0,0 @@

-transformers>=4.20.1

-tqdm

-datasets

-loralib

-colossalai==0.3.3

-torch<2.0.0, >=1.12.1

-langchain

-tokenizers

-fastapi

-sse_starlette

-wandb

-sentencepiece

-gpustat

-tensorboard

diff --git a/applications/Chat/tests/test_benchmarks.sh b/applications/Chat/tests/test_benchmarks.sh

deleted file mode 100755

index 3fdb25181342..000000000000

--- a/applications/Chat/tests/test_benchmarks.sh

+++ /dev/null

@@ -1,33 +0,0 @@

-#!/bin/bash

-

-set -xue

-

-echo "Hint: You can run this script with 'verbose' as the first argument to run all strategies."

-

-if [[ $# -ne 0 && "$1" == "verbose" ]]; then

- STRATEGIES=(

- 'ddp'

- 'colossalai_gemini'

- 'colossalai_gemini_cpu'

- 'colossalai_zero2'

- 'colossalai_zero2_cpu'

- 'colossalai_zero1'

- 'colossalai_zero1_cpu'

- )

-else

- STRATEGIES=(

- 'colossalai_zero2'

- )

-fi

-

-BASE_DIR=$(dirname $(dirname $(realpath $BASH_SOURCE)))

-BENCHMARKS_DIR=$BASE_DIR/benchmarks

-

-echo "[Test]: testing benchmarks ..."

-

-for strategy in ${STRATEGIES[@]}; do

- torchrun --standalone --nproc_per_node 1 $BENCHMARKS_DIR/benchmark_opt_lora_dummy.py \

- --model 125m --critic_model 125m --strategy ${strategy} --lora_rank 4 \

- --num_episodes 2 --num_collect_steps 4 --num_update_steps 2 \

- --train_batch_size 2 --experience_batch_size 4

-done

diff --git a/applications/Chat/tests/test_checkpoint.py b/applications/Chat/tests/test_checkpoint.py

deleted file mode 100644

index 9c08aa36c9b4..000000000000

--- a/applications/Chat/tests/test_checkpoint.py

+++ /dev/null

@@ -1,91 +0,0 @@

-import os

-import tempfile

-from contextlib import nullcontext

-

-import pytest

-import torch

-import torch.distributed as dist

-from coati.models.gpt import GPTActor

-from coati.models.utils import calc_action_log_probs

-from coati.trainer.strategies import DDPStrategy, GeminiStrategy, LowLevelZeroStrategy, Strategy

-from transformers.models.gpt2.configuration_gpt2 import GPT2Config

-

-from colossalai.nn.optimizer import HybridAdam

-from colossalai.testing import rerun_if_address_is_in_use, spawn

-

-GPT_CONFIG = GPT2Config(n_embd=128, n_layer=4, n_head=4)

-

-

-def get_data(batch_size: int, seq_len: int = 10) -> dict:

- input_ids = torch.randint(0, 50257, (batch_size, seq_len), device="cuda")

- attention_mask = torch.ones_like(input_ids)

- return dict(input_ids=input_ids, attention_mask=attention_mask)

-

-

-def train_step(strategy: Strategy, actor: GPTActor, actor_optim: HybridAdam, batch_size: int = 8):

- data = get_data(batch_size)

- action_mask = torch.ones_like(data["attention_mask"], dtype=torch.bool)

- actor_logits = actor(data["input_ids"], data["attention_mask"])["logits"]

- action_log_probs = calc_action_log_probs(actor_logits, data["input_ids"], action_mask.size(1))

- loss = action_log_probs.sum()

- strategy.backward(loss, actor, actor_optim)

- strategy.optimizer_step(actor_optim)

-

-

-def run_test_checkpoint(strategy_name: str, shard: bool):

- if strategy_name == "ddp":

- strategy = DDPStrategy()

- elif strategy_name == "colossalai_gemini":

- strategy = GeminiStrategy(placement_policy="auto", initial_scale=2**5)

- elif strategy_name == "colossalai_zero2":

- strategy = LowLevelZeroStrategy(stage=2, placement_policy="cuda")

- else:

- raise ValueError(f"Unsupported strategy '{strategy_name}'")

-

- with strategy.model_init_context():

- actor = GPTActor(config=GPT_CONFIG).cuda()

- actor_optim = HybridAdam(actor.parameters())

- actor, actor_optim = strategy.prepare((actor, actor_optim))

-

- train_step(strategy, actor, actor_optim)

-

- ctx = tempfile.TemporaryDirectory() if dist.get_rank() == 0 else nullcontext()

-

- with ctx as dirname: