This repository was archived by the owner on Jun 4, 2025. It is now read-only.

Override LRScheduler when using LRModifiers #11

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

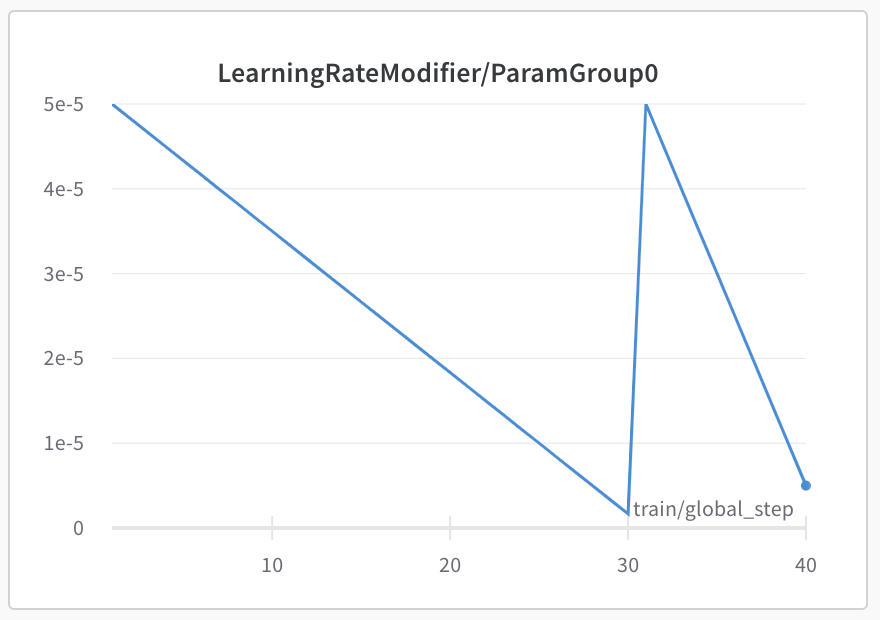

to perform pruning and QAT phases in the same recipe, it is useful to control the LR schedule within the recipe. currently, the HF LR scheduler will be called after the sparseml step, overriding any LR Modifiers. This PR detects if there are any LR modifiers in a Trainer's recipe, and if so, sets the HF LR scheduler to a dummy one.

example LR schedule recipe for pruning+QAT:

Produced schedule from W&B:

W&B: https://wandb.ai/neuralmagic/huggingface/runs/40ycsv8g?workspace=user-neuralmagic