-

-

Notifications

You must be signed in to change notification settings - Fork 5.7k

=== Optimization: Defer recursion into pointees

#43658

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

jl_egal Optimization: Defer recursion into pointees=== Optimization: Defer recursion into pointees

|

@nanosoldier |

1 similar comment

|

@nanosoldier |

|

Good idea, I like it! This sort of feels like the right way to do it to me, even if it's a tad slower in some cases. I wonder if it would help to ignore pointer fields completely in the first pass, instead of doing the |

|

Something went wrong when running your job: Unfortunately, the logs could not be uploaded. |

|

Yay, thanks! 🎉

Yeah, i had that idea too, and I could go either way. I chose to do it this way, because it's possible the NULL/NULL check could also allow us to return early: If a struct has 2 pointer fields and the second one is NULL in So it's another instance of front-loading checking the bits in the struct before recursing to pointees. So i think probably better to do it this way, since it's making the same tradeoff as the rest of the PR. Does that make sense to you, too? |

|

Anyone know why Nanosolider failed to upload the benchmark results? Is it because the PR is a draft or something? I'll mark it ready for review now. |

The size has just gotten too big and no one has fixed it. |

|

NULL fields are rare, so I think not worth special-casing. Actual timing is what matters though. |

|

I get these results for your last benchmark (where the PR is slower): before PR: PR: PR + ignore pointer fields on the first pass: So there is a slight improvement (and looks like I need a new laptop again 😂 ) |

|

Okay cool, makes sense! I think the "NULL fields are rare, so I think not worth special-casing." argument is strong here, so that makes sense. Thanks! 👍 👍 I can make that change. But first: So is it possible to see the results of the nanosoldier run? Or are they lost to the mysteries of the universe? It would be nice to see if this proves to be good or bad, and I'd like to be able to have these numbers before making any changes so we can compare? Thanks! |

|

@vtjnash has to manually retrieve them. |

|

Oops, sorry, I am behind on email. Here you go: |

|

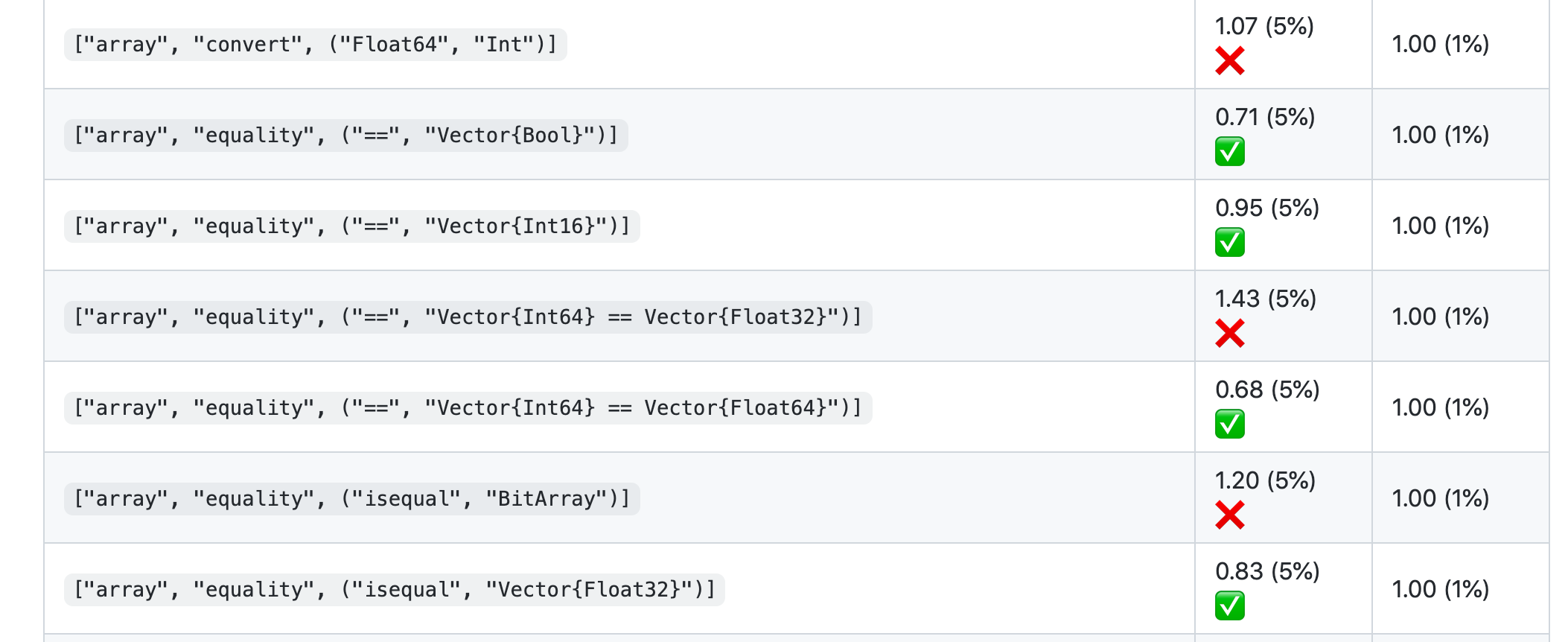

Thanks @vtjnash! What do the percentages mean? It looks like some got quite a bit better and some quite a bit worse, but in ways that don't exactly make sense to me... So I feel like probably this is mostly just noisy? Can any of you with more experience reading these weigh in? I'll give your suggestion a shot now, @JeffBezanson. |

|

@nanosoldier runbenchmarks(ALL, vs="@5449d1bfabdaeeb321c179a8344dc2852a989764") |

|

Nanosoldier (especially recently) is pretty noisy. |

|

@NHDaly, you need to code quote the part after the nanosoldier invocation. |

|

@nanosoldier |

|

Something went wrong when running your job: Unfortunately, the logs could not be uploaded. |

|

Oops, thanks @KristofferC. I did that right the first time (but also it didn't work then either.. 🤔 maybe i don't have permissions or something). Thanks @oscardssmith and @KristofferC |

|

it did work, it's just that there's a nanosoldier bug that means @vtjnash needs to post the log manually. |

|

I don't know if this is the comparison you wanted, since there are a lot of unrelated commits in that command, but here you go: https://github.com/JuliaCI/NanosoldierReports/blob/master/benchmark/by_hash/18eef47_vs_5449d1b/report.md |

|

@nanosoldier |

|

For more impact, we may want to update codegen.cpp to do the same ordering change as here also for |

|

Something went wrong when running your job: Unfortunately, the logs could not be uploaded. |

|

Still not what I mean to run though: @nanosoldier |

|

Your benchmark job has completed - possible performance regressions were detected. A full report can be found here. |

Oh, huh, maybe I don't exactly undrestand how Nanosoldier works. I think i was trying to compare the latest commit on this branch against the previous commit. Did that not do that? |

Yeah, makes sense. Good idea! I don't really feel like I have the chops to do that.. should it be done in a separate PR? Or could someone help with that here? Also, i really don't know what to make of these Nanosoldier results.. Some things appear to get quite a bit better, and some quite a bit worse, but it's hard to tell what's just noise? :( |

|

@NHDaly Can you rebase on the latest master? That should fix the |

Immutable struct comparisons with `===` can be arbitrarily expensive for

deeply recursive but (almost) equal objects. Whenever possible, it's

valuable to defer the potentially expensive recursion by first comparing

the struct fields for bitwise equality.

Before this commit, two structs are compare elementwise, in the order of

the struct definition, recursing when pointer fields are encountered.

This commit defers the recursion into pointed-to fields until after all

other non-pointer fields of the struct are compared.

This has two advantages:

1. It defers the expensive part of === comparison as long as possible,

in the hopes that we can exit early from dissimilarities discovered

elsewhere in the struct instances.

2. It improves cache-locality by scanning the whole struct before

jumping into any new places in memory (and reducing comparisons

needed on the current cache line after returning from the recursive

call).

The drawback is that you'll have to scan the pointer fields again, which

means potentially more cache misses if the struct is very large.

The best way to tell if this is helpful or harmful is benchmarking.

Here is the motivating benchmark, which indeed improves by 10x with this

commit, compared to master:

```julia

julia> using BenchmarkTools

julia> struct VN

val::Float32

next::Union{VN, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

end

julia> struct NV

next::Union{NV, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

val::Float32

end

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(rand(Int), head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, rand(Int))

end

return head

end

make_chain_nv (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

7.562 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # slower, since it recurses pointers unnecessarily

76.952 μs (0 allocations: 0 bytes)

false

```

After this commit:

```

julia> @Btime $vn1 === $vn2

8.597 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # We get to skip the recursion and exit early. :)

10.280 ns (0 allocations: 0 bytes)

false

```

However, I think that there are probably other benchmarks where it

harms performance, so we'll have to see...

For example, here's one: In the exact opposite case as above, if the two

objects _are_ (almost) equal, necessitating checking every object, the

`NV` comparisons could have exited after all the recursive pointer

checks, and never compare the fields, whereas now the fields are checked

first, so this gets slower.

I'm not exactly sure why the `VN` comparisons get somewhat slower too,

but it's maybe because of the second scan mentioned above.

```julia

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, i)

end

return head

end

make_chain_nv (generic function with 1 method)

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(i, head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

95.996 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

82.192 μs (0 allocations: 0 bytes)

false

```

This commit:

```

julia> @Btime $vn1 === $vn2

127.512 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

126.837 μs (0 allocations: 0 bytes)

```

Delay the nullptr checks until all non-ptr fields have been compared, since we have to go back to those anyways to follow the pointers. Co-authored-by:Jeff Bezanson <[email protected]>

7d8c933 to

ba718e8

Compare

|

Done, thanks |

* jl_egal Optimization: Defer recursion into pointees

Immutable struct comparisons with `===` can be arbitrarily expensive for

deeply recursive but (almost) equal objects. Whenever possible, it's

valuable to defer the potentially expensive recursion by first comparing

the struct fields for bitwise equality.

Before this commit, two structs are compare elementwise, in the order of

the struct definition, recursing when pointer fields are encountered.

This commit defers the recursion into pointed-to fields until after all

other non-pointer fields of the struct are compared.

This has two advantages:

1. It defers the expensive part of === comparison as long as possible,

in the hopes that we can exit early from dissimilarities discovered

elsewhere in the struct instances.

2. It improves cache-locality by scanning the whole struct before

jumping into any new places in memory (and reducing comparisons

needed on the current cache line after returning from the recursive

call).

The drawback is that you'll have to scan the pointer fields again, which

means potentially more cache misses if the struct is very large.

The best way to tell if this is helpful or harmful is benchmarking.

Here is the motivating benchmark, which indeed improves by 10x with this

commit, compared to master:

```julia

julia> using BenchmarkTools

julia> struct VN

val::Float32

next::Union{VN, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

end

julia> struct NV

next::Union{NV, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

val::Float32

end

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(rand(Int), head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, rand(Int))

end

return head

end

make_chain_nv (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

7.562 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # slower, since it recurses pointers unnecessarily

76.952 μs (0 allocations: 0 bytes)

false

```

After this commit:

```

julia> @Btime $vn1 === $vn2

8.597 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # We get to skip the recursion and exit early. :)

10.280 ns (0 allocations: 0 bytes)

false

```

However, I think that there are probably other benchmarks where it

harms performance, so we'll have to see...

For example, here's one: In the exact opposite case as above, if the two

objects _are_ (almost) equal, necessitating checking every object, the

`NV` comparisons could have exited after all the recursive pointer

checks, and never compare the fields, whereas now the fields are checked

first, so this gets slower.

I'm not exactly sure why the `VN` comparisons get somewhat slower too,

but it's maybe because of the second scan mentioned above.

```julia

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, i)

end

return head

end

make_chain_nv (generic function with 1 method)

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(i, head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

95.996 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

82.192 μs (0 allocations: 0 bytes)

false

```

This commit:

```

julia> @Btime $vn1 === $vn2

127.512 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

126.837 μs (0 allocations: 0 bytes)

```

* Ignore pointer fields completely in the first pass of ===

Delay the nullptr checks until all non-ptr fields have been compared,

since we have to go back to those anyways to follow the pointers.

Co-authored-by:Jeff Bezanson <[email protected]>

* jl_egal Optimization: Defer recursion into pointees

Immutable struct comparisons with `===` can be arbitrarily expensive for

deeply recursive but (almost) equal objects. Whenever possible, it's

valuable to defer the potentially expensive recursion by first comparing

the struct fields for bitwise equality.

Before this commit, two structs are compare elementwise, in the order of

the struct definition, recursing when pointer fields are encountered.

This commit defers the recursion into pointed-to fields until after all

other non-pointer fields of the struct are compared.

This has two advantages:

1. It defers the expensive part of === comparison as long as possible,

in the hopes that we can exit early from dissimilarities discovered

elsewhere in the struct instances.

2. It improves cache-locality by scanning the whole struct before

jumping into any new places in memory (and reducing comparisons

needed on the current cache line after returning from the recursive

call).

The drawback is that you'll have to scan the pointer fields again, which

means potentially more cache misses if the struct is very large.

The best way to tell if this is helpful or harmful is benchmarking.

Here is the motivating benchmark, which indeed improves by 10x with this

commit, compared to master:

```julia

julia> using BenchmarkTools

julia> struct VN

val::Float32

next::Union{VN, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

end

julia> struct NV

next::Union{NV, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

val::Float32

end

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(rand(Int), head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, rand(Int))

end

return head

end

make_chain_nv (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

7.562 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # slower, since it recurses pointers unnecessarily

76.952 μs (0 allocations: 0 bytes)

false

```

After this commit:

```

julia> @Btime $vn1 === $vn2

8.597 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # We get to skip the recursion and exit early. :)

10.280 ns (0 allocations: 0 bytes)

false

```

However, I think that there are probably other benchmarks where it

harms performance, so we'll have to see...

For example, here's one: In the exact opposite case as above, if the two

objects _are_ (almost) equal, necessitating checking every object, the

`NV` comparisons could have exited after all the recursive pointer

checks, and never compare the fields, whereas now the fields are checked

first, so this gets slower.

I'm not exactly sure why the `VN` comparisons get somewhat slower too,

but it's maybe because of the second scan mentioned above.

```julia

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, i)

end

return head

end

make_chain_nv (generic function with 1 method)

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(i, head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

95.996 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

82.192 μs (0 allocations: 0 bytes)

false

```

This commit:

```

julia> @Btime $vn1 === $vn2

127.512 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

126.837 μs (0 allocations: 0 bytes)

```

* Ignore pointer fields completely in the first pass of ===

Delay the nullptr checks until all non-ptr fields have been compared,

since we have to go back to those anyways to follow the pointers.

Co-authored-by:Jeff Bezanson <[email protected]>

* jl_egal Optimization: Defer recursion into pointees

Immutable struct comparisons with `===` can be arbitrarily expensive for

deeply recursive but (almost) equal objects. Whenever possible, it's

valuable to defer the potentially expensive recursion by first comparing

the struct fields for bitwise equality.

Before this commit, two structs are compare elementwise, in the order of

the struct definition, recursing when pointer fields are encountered.

This commit defers the recursion into pointed-to fields until after all

other non-pointer fields of the struct are compared.

This has two advantages:

1. It defers the expensive part of === comparison as long as possible,

in the hopes that we can exit early from dissimilarities discovered

elsewhere in the struct instances.

2. It improves cache-locality by scanning the whole struct before

jumping into any new places in memory (and reducing comparisons

needed on the current cache line after returning from the recursive

call).

The drawback is that you'll have to scan the pointer fields again, which

means potentially more cache misses if the struct is very large.

The best way to tell if this is helpful or harmful is benchmarking.

Here is the motivating benchmark, which indeed improves by 10x with this

commit, compared to master:

```julia

julia> using BenchmarkTools

julia> struct VN

val::Float32

next::Union{VN, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

end

julia> struct NV

next::Union{NV, Array} # put a mutable thing at the end, to prevent runtime from sharing instances

val::Float32

end

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(rand(Int), head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, rand(Int))

end

return head

end

make_chain_nv (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

7.562 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # slower, since it recurses pointers unnecessarily

76.952 μs (0 allocations: 0 bytes)

false

```

After this commit:

```

julia> @Btime $vn1 === $vn2

8.597 ns (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2 # We get to skip the recursion and exit early. :)

10.280 ns (0 allocations: 0 bytes)

false

```

However, I think that there are probably other benchmarks where it

harms performance, so we'll have to see...

For example, here's one: In the exact opposite case as above, if the two

objects _are_ (almost) equal, necessitating checking every object, the

`NV` comparisons could have exited after all the recursive pointer

checks, and never compare the fields, whereas now the fields are checked

first, so this gets slower.

I'm not exactly sure why the `VN` comparisons get somewhat slower too,

but it's maybe because of the second scan mentioned above.

```julia

julia> function make_chain_nv(n, sentinal)

head = NV(sentinal, 1)

for i in 2:n

head = NV(head, i)

end

return head

end

make_chain_nv (generic function with 1 method)

julia> function make_chain_vn(n, sentinal)

head = VN(1, sentinal)

for i in 2:n

head = VN(i, head)

end

return head

end

make_chain_vn (generic function with 1 method)

julia> vn1, vn2 = make_chain_vn(10000, []), make_chain_vn(10000, []);

julia> nv1, nv2 = make_chain_nv(10000, []), make_chain_nv(10000, []);

```

Master:

```

julia> @Btime $vn1 === $vn2

95.996 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

82.192 μs (0 allocations: 0 bytes)

false

```

This commit:

```

julia> @Btime $vn1 === $vn2

127.512 μs (0 allocations: 0 bytes)

false

julia> @Btime $nv1 === $nv2

126.837 μs (0 allocations: 0 bytes)

```

* Ignore pointer fields completely in the first pass of ===

Delay the nullptr checks until all non-ptr fields have been compared,

since we have to go back to those anyways to follow the pointers.

Co-authored-by:Jeff Bezanson <[email protected]>

|

Explicitly referencing #44712 from here. |

Immutable struct comparisons with

===can be arbitrarily expensive fordeeply recursive but (almost) equal objects. Whenever possible, it's

valuable to defer the potentially expensive recursion by first comparing

the struct fields for bitwise equality.

Before this commit, two structs are compare elementwise, in the order of

the struct definition, recursing when pointer fields are encountered.

This commit defers the recursion into pointed-to fields until after all

other non-pointer fields of the struct are compared.

This has two advantages:

in the hopes that we can exit early from dissimilarities discovered

elsewhere in the struct instances.

jumping into any new places in memory (and reducing comparisons

needed on the current cache line after returning from the recursive

call).

The drawback is that you'll have to scan the pointer fields again, which

means potentially more cache misses if the struct is very large.

The best way to tell if this is helpful or harmful is benchmarking.

Here is the motivating benchmark, which indeed improves by 10x with this

commit, compared to master:

Master:

After this commit:

However, I think that there are probably other benchmarks where it

harms performance, so we'll have to see...

For example, here's one: In the exact opposite case as above, if the two

objects are (almost) equal, necessitating checking every object, the

NVcomparisons could have exited after all the recursive pointerchecks, and never compare the fields, whereas now the fields are checked

first, so this gets slower.

I'm not exactly sure why the

VNcomparisons get somewhat slower too,but it's maybe because of the second scan mentioned above.

Master:

This commit:

We stumbled across this potential optimization while reading through the code for

compare_fields(). Hopefully it's beneficial, but we'll see! :)Co-Authored-By: @nystrom