-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Update VM priority (cpu_shares) when live scaling it #6031

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

GutoVeronezi

left a comment

GutoVeronezi

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

CLGTM, tested manually.

I've pointed just some writing fixes.

...ypervisors/kvm/src/main/java/com/cloud/hypervisor/kvm/resource/LibvirtComputingResource.java

Outdated

Show resolved

Hide resolved

...vm/src/main/java/com/cloud/hypervisor/kvm/resource/wrapper/LibvirtScaleVmCommandWrapper.java

Outdated

Show resolved

Hide resolved

DaanHoogland

left a comment

DaanHoogland

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

cltgm but some remarks and questions.

...ypervisors/kvm/src/main/java/com/cloud/hypervisor/kvm/resource/LibvirtComputingResource.java

Outdated

Show resolved

Hide resolved

...vm/src/main/java/com/cloud/hypervisor/kvm/resource/wrapper/LibvirtScaleVmCommandWrapper.java

Outdated

Show resolved

Hide resolved

| protected void updateCpuShares(Domain dm, int newCpuShares) throws LibvirtException { | ||

| int oldCpuShares = LibvirtComputingResource.getCpuShares(dm); | ||

|

|

||

| if (oldCpuShares < newCpuShares) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

only up, never down? is this like nice for users or can the root user force something?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In ACS, the live scale process does not allow to reduce the resource of a running VM. Therefore, it can only occur upwards and the cpu_shares is calculated as follow:

cpu_shares = number of vCPUs X vCPU frequency in Mhz.

This if (the conditional) is to check if the vCPUs or CPU speed is higher than the current compute offering, as it makes no sense to decrease the priority of a VM that is having its resources increased. In other words, this if is to check if it is a memory scale only, which should not have its priority changed in the host.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it looks the new speed >= current speed, new cpu core >= current cpu core

so new cpu shares is always equal or bigger than old cpu shares.

the check may be unnecessary but looks ok.

Co-authored-by: dahn <[email protected]> Co-authored-by: Daniel Augusto Veronezi Salvador <[email protected]>

...vm/src/main/java/com/cloud/hypervisor/kvm/resource/wrapper/LibvirtScaleVmCommandWrapper.java

Show resolved

Hide resolved

sureshanaparti

left a comment

sureshanaparti

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

code LGTM

|

@blueorangutan package |

|

@DaanHoogland a Jenkins job has been kicked to build packages. I'll keep you posted as I make progress. |

|

Packaging result: ✔️ el7 ✖️ el8 ✔️ debian ✔️ suse15. SL-JID 2714 |

|

@blueorangutan test |

|

@DaanHoogland a Trillian-Jenkins test job (centos7 mgmt + kvm-centos7) has been kicked to run smoke tests |

weizhouapache

left a comment

weizhouapache

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

code lgtm

|

Trillian test result (tid-3440)

|

|

@blueorangutan package |

|

@BryanMLima a Jenkins job has been kicked to build packages. I'll keep you posted as I make progress. |

|

Packaging result: ✔️ el7 ✔️ el8 ✔️ debian ✔️ suse15. SL-JID 2730 |

|

@blueorangutan package |

|

@weizhouapache a Jenkins job has been kicked to build packages. I'll keep you posted as I make progress. |

|

Packaging result: ✔️ el7 ✔️ el8 ✔️ debian ✔️ suse15. SL-JID 2743 |

|

@blueorangutan test |

|

@blueorangutan test ubuntu20 kvm-ubuntu20 |

|

@weizhouapache a Trillian-Jenkins test job (ubuntu20 mgmt + kvm-ubuntu20) has been kicked to run smoke tests |

|

@blueorangutan test |

|

@weizhouapache a Trillian-Jenkins test job (centos7 mgmt + kvm-centos7) has been kicked to run smoke tests |

|

Trillian test result (tid-3456)

|

|

Trillian test result (tid-3452)

|

|

@blueorangutan test ubuntu20 kvm-ubuntu20 |

|

@weizhouapache a Trillian-Jenkins test job (ubuntu20 mgmt + kvm-ubuntu20) has been kicked to run smoke tests |

|

Trillian test result (tid-3466)

|

|

@weizhouapache Could you run the tests again, since I am not in the whitelist? I verified the errors and, apparently, they have no relation to the code I wrote. |

|

@blueorangutan test ubuntu20 kvm-ubuntu20 |

|

@weizhouapache a Trillian-Jenkins test job (ubuntu20 mgmt + kvm-ubuntu20) has been kicked to run smoke tests |

|

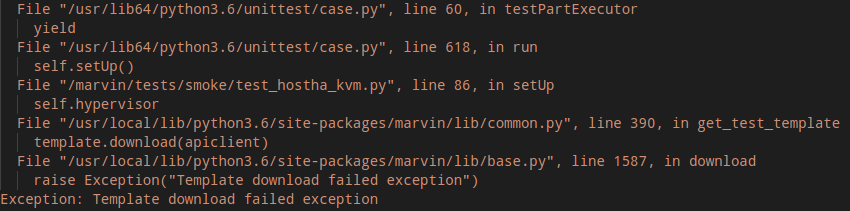

Trillian Build Failed (tid-3519) |

|

@blueorangutan test ubuntu20 kvm-ubuntu20 |

|

@weizhouapache a Trillian-Jenkins test job (ubuntu20 mgmt + kvm-ubuntu20) has been kicked to run smoke tests |

|

Trillian test result (tid-3520)

|

|

@blueorangutan package |

|

@weizhouapache a Jenkins job has been kicked to build packages. I'll keep you posted as I make progress. |

|

Packaging result: ✔️ el7 ✔️ el8 ✔️ debian ✔️ suse15. SL-JID 2828 |

|

@blueorangutan test ubuntu20 kvm-ubuntu20 |

|

@weizhouapache a Trillian-Jenkins test job (ubuntu20 mgmt + kvm-ubuntu20) has been kicked to run smoke tests |

|

Trillian test result (tid-3559)

|

|

The recurrent errors |

|

Ran the above failed tests on a 4.16.1 env - and the same behavior was observed. Test failures as mentioned aren't related to this PR - the specific issue is: |

|

thanks @Pearl1594 for investigation. let's merge this PR @nvazquez |

|

I have created an issue for tracking. #6114 |

|

Merging based on approvals and tests results - failing tests are only for ubuntu 20 which are being tracked on #6114 |

Description

This PR addresses an issue when live scaling a VM with the KVM hypervisor. When the VM has the CPU cores or CPU speed increased, the cpu_shares (priority) of the VM is not updated accordingly. To overcome that, it was needed to manually restart the VM or using the command:

virsh schedinfo --domain <vm_internal_name> --live cpu_shares=<cpu_shares_value>. This PR changes this to automatically update the cpu_shares when the CPU cores or speed is increased. It is worth mention that the current behavior caused steal time when VMs would compete for the CPU after a dynamic scale process.Steps to reproduce:

Host

Custom service offering

VM (deploy config)

The cpu_shares of a VM is calculated as follow:

cpu_shares = number of vCPUs X vCPU frequency in MhzUsing the command

virsh schedinfo --domain <internal_vm_name>the cpu_shares displayed was 2350, which is expected. However, when live scaling the VM to 4 vCPUs, which should return 9400 shares, it was the initial 2350 shares. If the VM is restarted, however, the 9400 shares is used, as it should without the need to restart the VM.Types of changes

Feature/Enhancement Scale or Bug Severity

Feature/Enhancement Scale

Bug Severity

How Has This Been Tested?

Using the same configuration and steps I used to describe the bug. Then, when live scaling a VM, it returns the correct cpu_shares without the need to restart it. Therefore, when the VM with the 2350 shares is live scaled to 4 vCPUs, the cpu_shares when using the command mentioned above, returned the correct value of 9400.

Moreover, I created unit tests for the affected methods.