-

Notifications

You must be signed in to change notification settings - Fork 227

Update YourKit usage in developer tools #65

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

| - Untar this file somewhere (in `/root` in our case): `tar xvjf yjp-12.0.5-linux.tar.bz2` | ||

| - Copy the expanded YourKit files to each node using copy-dir: `~/spark-ec2/copy-dir /root/yjp-12.0.5` | ||

| - Unzip this file somewhere (in `/root` in our case): `unzip YourKit-JavaProfiler-2017.02-b66.zip` | ||

| - Copy the expanded YourKit files to each node using copy-dir: `~/spark-ec2/copy-dir /root/YourKit-JavaProfiler-2017.02` |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we still use spark-ec2/copy-dir? (not sure to keep spark-ec2 updated)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @shivaram, if I understood correctly, amplab/spark-ec2 is managed by you. Would you mind if I ask whether we should keep this here? The change itself looks fine if I haven't missed something but I am less sure if we should keep this here or not.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think its probably good to add some wording on top saying These instructions apply to when Spark is run using the spark-ec2[link to amplab/spark-ec2] scripts

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Since this yourkit usage is not only for spark-ec2, I think the instruction might make others misunderstood. WDYT? @HyukjinKwon

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Actually, that's what I was thinking too. But I would rather avoid to fix it here now as I guess it maybe needs to wait for some more opinions / discussion (e.g., other profiles tools, description for copying it to other nodes, checking duplicated documentation and etc.). I am fine with it if any committer strongly prefers (I am +0).

Otherwise, let's push what we are sure of first as the current changes look obviously something to be fixed. I don't want to make this PR complicated for now and the original intention looks describing yourkit with ec2 anyway.

|

@srowen could you check? thanks! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Look fine to me except a question. Would you mind regenerating HTML by jekyll build and include the changes too? This will make some diff, for example in site/developer-tools.html.

developer-tools.md

Outdated

| and adding the lines | ||

| ``` | ||

| SPARK_DAEMON_JAVA_OPTS+=" -agentpath:/root/yjp-12.0.5/bin/linux-x86-64/libyjpagent.so=sampling" | ||

| SPARK_DAEMON_JAVA_OPTS+=" -agentpath:/root/YourKit-JavaProfiler-2017.02/bin/linux-x86-64/libyjpagent.so=sampling,port=10001" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I thought port in the agent can actually omitted because it actually allows omitting the port (detecting the ports 10001-10010) when YourKit in the desktop connects to the remote applications in the agents.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea, I a little misunderstood this. I'll update soon.

|

@HyukjinKwon Thanks! yea, sure. I'll add gen'd html, too. |

|

@HyukjinKwon I only added diffs in |

|

Ah, sure. That's the same case to me as well. I am not aware of why in details but I just think different versions might affect how to generate HTMLs and/or some custom changes were made to the HTMLs. |

|

oh, yea. ok. Even in this pr, is it okay to filter out unnecessary diffs manually? |

|

Yes, I think that should be safer. |

|

ok, I'll update. |

|

ok, updated |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I at least manually tested and verified YourKit (not ec2 but it looks correct). It looks fine to me except the minor comment.

| <li>Copy the expanded YourKit files to each node using copy-dir: <code>~/spark-ec2/copy-dir /root/YourKit-JavaProfiler-2017.02</code></li> | ||

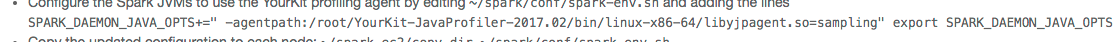

| <li>Configure the Spark JVMs to use the YourKit profiling agent by editing <code>~/spark/conf/spark-env.sh</code> | ||

| and adding the lines | ||

| <pre><code>SPARK_DAEMON_JAVA_OPTS+=" -agentpath:/root/yjp-12.0.5/bin/linux-x86-64/libyjpagent.so=sampling" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@maropu, it looks we should keep <pre>. I just double checked the format is broken:

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ok, will recheck

|

I also checked if this worked well in ec2. |

| SPARK_DAEMON_JAVA_OPTS+=" -agentpath:/root/YourKit-JavaProfiler-2017.02/bin/linux-x86-64/libyjpagent.so=sampling" | ||

| export SPARK_DAEMON_JAVA_OPTS | ||

| SPARK_JAVA_OPTS+=" -agentpath:/root/yjp-12.0.5/bin/linux-x86-64/libyjpagent.so=sampling" | ||

| export SPARK_JAVA_OPTS |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wait .. is it okay to remove this out without setting other alternatives (e.g., in cluster mode)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see and I didn't check. probably, is it better that we use spark.executor.extraJavaOptions and spark.driver.extraJavaOptions for this example? I think these options seem to work even in a cluster mode?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I checked the previous pr (apache/spark#17212) to remove SPARK_JAVA_OPTS and the removed warning messages suggested we should use instead:

|SPARK_JAVA_OPTS was detected (set to '$value').

|This is deprecated in Spark 1.0+.

|

|Please instead use:

| - ./spark-submit with conf/spark-defaults.conf to set defaults for an application

| - ./spark-submit with --driver-java-options to set -X options for a driver

| - spark.executor.extraJavaOptions to set -X options for executors

| - SPARK_DAEMON_JAVA_OPTS to set java options for standalone daemons (master or worker)

From this message, SPARK_DAEMON_JAVA_OPTS seems to work in standalone only... I need to check more..

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I think this isn't my area .. (I am less sure if it'd work in Yarn and etc.). Probably the safe choice should be to set both ..

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea, ok. It's okay that we just wait for other qualified developer's comments :)

|

I checked the related code and I found Btw, I noticed that this option is not described in conf/spark-env.sh.template... |

|

Pushed to the site, @maropu . You'll have to close this manually as I squashed the commits |

|

ok, thanks, Sean! |

No description provided.