-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-22753][SQL] Get rid of dataSource.writeAndRead #19941

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #84707 has finished for PR 19941 at commit

|

|

retest this please |

|

Test build #84730 has finished for PR 19941 at commit

|

|

Maybe something wrong with the Jenkins env? I found the build from 84707 to 84733 failed in same reason: |

|

|

||

| providingClass.newInstance() match { | ||

| case dataSource: CreatableRelationProvider => | ||

| case dataSource: RelationProvider => |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

To the reviewers, current implementation here made datasource should both inherit RelationProvider and CreatableRelationProvider(like the UT), because here just want get the relation by using RelationProvider.createRelation but not save a DF to a destination by using CreatableRelationProvider.createRelation. I need more advise to do better.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

They are different public traits. We are unable to change this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If here use CreatableRelationProvider.createRelation, will it write to destination one more time?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it will write to the destination, but InsertIntoDataSourceDirCommand only supports FileFormat

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, I see.

|

retest this please |

|

Test build #84754 has finished for PR 19941 at commit

|

|

retest this please |

|

Test build #84759 has finished for PR 19941 at commit

|

| val saveMode = if (overwrite) SaveMode.Overwrite else SaveMode.ErrorIfExists | ||

| try { | ||

| sparkSession.sessionState.executePlan(dataSource.planForWriting(saveMode, query)) | ||

| dataSource.writeAndRead(saveMode, query) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How about changing it to

sparkSession.sessionState.executePlan(dataSource.planForWriting(saveMode, query)).toRddThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I implemented like this at first, but after checking this patch (https://github.com/apache/spark/pull/18064/files), I changed to current implementation, is the wrapping of execution id unnecessary here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think it is not needed. You can try it.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

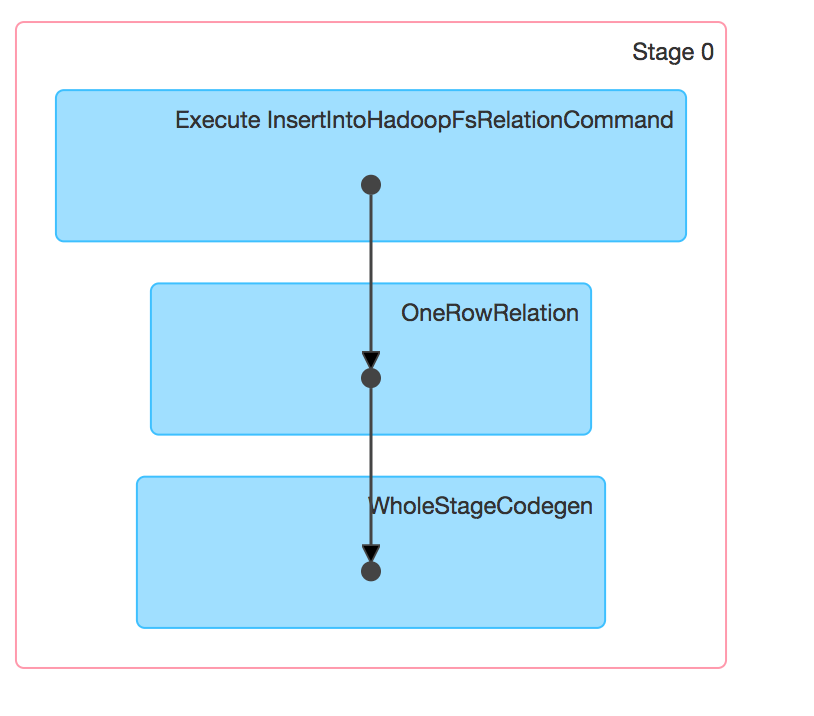

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could we revert all the changes except this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Revert done.

Sorry, maybe I misunderstand your words of 'get rid of dataSource.writeAndRead'. Like you and Wenchen's discussion in #16481, shouldn't we make writeAndRead just return a BaseRelation without write to the destination? Thank you for your patient reply.

|

Test build #84916 has finished for PR 19941 at commit

|

|

LGTM pending Jenkins |

|

Test build #84939 has finished for PR 19941 at commit

|

|

Thanks! Merged to master. |

What changes were proposed in this pull request?

As the discussion in #16481 and #18975 (comment)

Currently the BaseRelation returned by

dataSource.writeAndReadonly used inCreateDataSourceTableAsSelect, planForWriting and writeAndRead has some common code paths.In this patch I removed the writeAndRead function and added the getRelation function which only use in

CreateDataSourceTableAsSelectCommandwhile saving data to non-existing table.How was this patch tested?

Existing UT