-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-23499][MESOS] Support for priority queues in Mesos scheduler #20665

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@pgillet since you are modifying the ui could you add a screenshot in the description? |

|

@skonto I attached a screenshot in the JIRA. |

|

@pgillet why not here it makes more sense. |

|

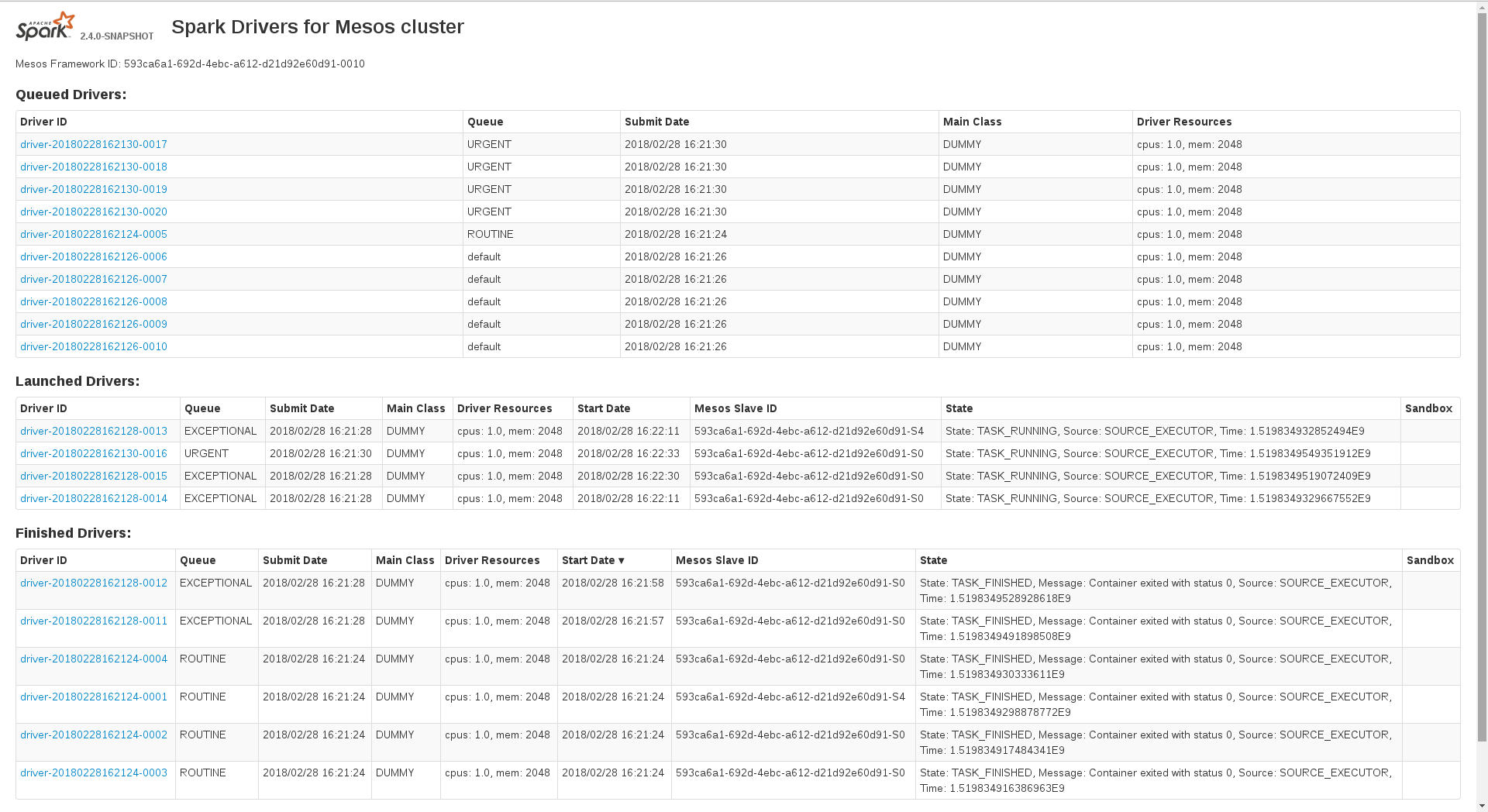

@skonto Below a screenshot of the MesosClusterDispatcher UI showing Spark jobs along with the queue to which they are submitted: |

|

any thought on this? |

|

Can one of the admins verify this patch? |

|

We're closing this PR because it hasn't been updated in a while. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

### What changes were proposed in this pull request? I push this PR as I could not re-open the stale one #20665 . As for Yarn or Kubernetes, Mesos users should be able to specify priority queues to define a workload management policy for queued drivers in the Mesos Cluster Dispatcher. This would ensure scheduling order while enqueuing Spark applications for a Mesos cluster. ### Why are the changes needed? Currently, submitted drivers are kept in order of their submission: the first driver added to the queue will be the first one to be executed (FIFO), regardless of their priority. See https://issues.apache.org/jira/projects/SPARK/issues/SPARK-23499 for more details. ### Does this PR introduce _any_ user-facing change? The MesosClusterDispatcher UI shows now Spark jobs along with the queue to which they are submitted. ### How was this patch tested? Unit tests. Also, this feature has been in production for 3 years now as we use a modified Spark 2.4.0 since then. Closes #30352 from pgillet/mesos-scheduler-priority-queue. Lead-authored-by: Pascal Gillet <[email protected]> Co-authored-by: pgillet <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

### What changes were proposed in this pull request? I push this PR as I could not re-open the stale one apache/spark#20665 . As for Yarn or Kubernetes, Mesos users should be able to specify priority queues to define a workload management policy for queued drivers in the Mesos Cluster Dispatcher. This would ensure scheduling order while enqueuing Spark applications for a Mesos cluster. ### Why are the changes needed? Currently, submitted drivers are kept in order of their submission: the first driver added to the queue will be the first one to be executed (FIFO), regardless of their priority. See https://issues.apache.org/jira/projects/SPARK/issues/SPARK-23499 for more details. ### Does this PR introduce _any_ user-facing change? The MesosClusterDispatcher UI shows now Spark jobs along with the queue to which they are submitted. ### How was this patch tested? Unit tests. Also, this feature has been in production for 3 years now as we use a modified Spark 2.4.0 since then. Closes #30352 from pgillet/mesos-scheduler-priority-queue. Lead-authored-by: Pascal Gillet <[email protected]> Co-authored-by: pgillet <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

As for Yarn, Mesos users should be able to specify priority queues to define a workload management policy for queued drivers in the Mesos Cluster Dispatcher.

What changes were proposed in this pull request?

This commit introduces the necessary changes to manage such priority queues.

How was this patch tested?

unit tests