-

Couldn't load subscription status.

- Fork 28.9k

[SPARK-31474][SQL] Consistency between dayofweek/dow in extract expression and dayofweek function #28248

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…ssion and dayofweek function

|

cc @cloud-fan @dongjoon-hyun @HyukjinKwon thanks. |

|

Hi, @yaooqinn . I also know the discussion history on the old PRs, but it would be great if you can add a link to describe ANSI SQL reference in the PR description (if possible)? cc @gatorsmile |

| case "DAY" | "D" | "DAYS" => DayOfMonth(source) | ||

| case "DAYOFWEEK" => DayOfWeek(source) | ||

| case "DOW" => Subtract(DayOfWeek(source), Literal(1)) | ||

| case "DOW" | "DAYOFWEEK" => DayOfWeek(source) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It seems to be different from @cloud-fan 's request.

@cloud-fan . Could you confirm this?

This comment has been minimized.

This comment has been minimized.

|

Hi, @dongjoon-hyun the ANSI SQL reference seems not to define the meaning of |

|

Thanks. Then, did you see the following? |

|

Hi, @dongjoon-hyun, thanks for adding that reference. Actually, before this work, I have reached @cloud-fan and had a discussion about this issue offline. I have got his permit about the changes made in this PR and I hope other members of the spark community to see if this is a proper change. |

|

Test build #121440 has finished for PR 28248 at commit

|

|

Ya. Got it. Let's see the review comment~ |

|

Retest this please. |

|

Test build #121448 has finished for PR 28248 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi, @yaooqinn .

The first target of expression description is DESC FUNCTION EXTENDED in SQL environment. The last commit shows the following. Please check the result and revert the HTML tags stuff.

spark-sql> DESC FUNCTION EXTENDED date_part;

Function: date_part

Class: org.apache.spark.sql.catalyst.expressions.DatePart

Usage: date_part(field, source) - Extracts a part of the date/timestamp or interval source.

Extended Usage:

Arguments:

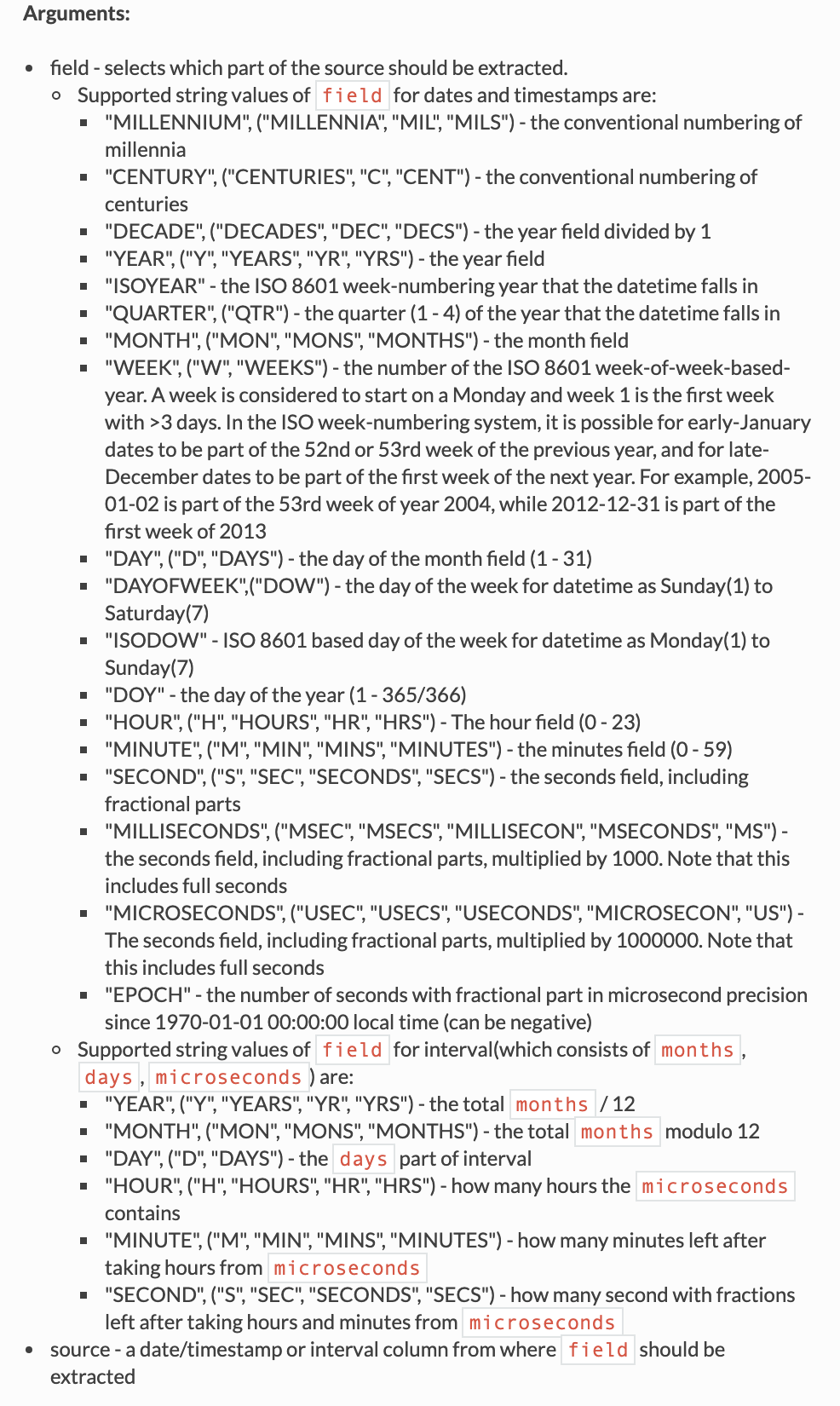

* field - selects which part of the source should be extracted.

<ul>

<b> Supported string values of `field` for dates and timestamps are: </b>

<li> "MILLENNIUM", ("MILLENNIA", "MIL", "MILS") - the conventional numbering of millennia </li>

<li> "CENTURY", ("CENTURIES", "C", "CENT") - the conventional numbering of centuries </li>

<li> "DECADE", ("DECADES", "DEC", "DECS") - the year field divided by 10 </li>

<li> "YEAR", ("Y", "YEARS", "YR", "YRS") - the year field </li>

|

Test build #121478 has finished for PR 28248 at commit

|

|

@dongjoon-hyun thanks for checking the It seems that Please check the result below to see whether you are satisfied with. +-- !query

+DESC FUNCTION EXTENDED date_part

+-- !query schema

+struct<function_desc:string>

+-- !query output

+Class: org.apache.spark.sql.catalyst.expressions.DatePart

+Extended Usage:

+ Arguments:

+ * field - selects which part of the source should be extracted.

+ - Supported string values of `field` for dates and timestamps are:

+ - "MILLENNIUM", ("MILLENNIA", "MIL", "MILS") - the conventional numbering of millennia

+ - "CENTURY", ("CENTURIES", "C", "CENT") - the conventional numbering of centuries

+ - "DECADE", ("DECADES", "DEC", "DECS") - the year field divided by 1

+ - "YEAR", ("Y", "YEARS", "YR", "YRS") - the year field

+ - "ISOYEAR" - the ISO 8601 week-numbering year that the datetime falls in

+ - "QUARTER", ("QTR") - the quarter (1 - 4) of the year that the datetime falls in

+ - "MONTH", ("MON", "MONS", "MONTHS") - the month field

+ - "WEEK", ("W", "WEEKS") - the number of the ISO 8601 week-of-week-based-year. A week is considered to start on a Monday and week 1 is the first week with >3 days. In the ISO week-numbering system, it is possible for early-January dates to be part of the 52nd or 53rd week of the previous year, and for late-December dates to be part of the first week of the next year. For example, 2005-01-02 is part of the 53rd week of year 2004, while 2012-12-31 is part of the first week of 2013

+ - "DAY", ("D", "DAYS") - the day of the month field (1 - 31)

+ - "DAYOFWEEK",("DOW") - the day of the week for datetime as Sunday(1) to Saturday(7)

+ - "ISODOW" - ISO 8601 based day of the week for datetime as Monday(1) to Sunday(7)

+ - "DOY" - the day of the year (1 - 365/366)

+ - "HOUR", ("H", "HOURS", "HR", "HRS") - The hour field (0 - 23)

+ - "MINUTE", ("M", "MIN", "MINS", "MINUTES") - the minutes field (0 - 59)

+ - "SECOND", ("S", "SEC", "SECONDS", "SECS") - the seconds field, including fractional parts

+ - "MILLISECONDS", ("MSEC", "MSECS", "MILLISECON", "MSECONDS", "MS") - the seconds field, including fractional parts, multiplied by 1000. Note that this includes full seconds

+ - "MICROSECONDS", ("USEC", "USECS", "USECONDS", "MICROSECON", "US") - The seconds field, including fractional parts, multiplied by 1000000. Note that this includes full seconds

+ - "EPOCH" - the number of seconds with fractional part in microsecond precision since 1970-01-01 00:00:00 local time (can be negative)

+ - Supported string values of `field` for interval(which consists of `months`, `days`, `microseconds`) are:

+ - "YEAR", ("Y", "YEARS", "YR", "YRS") - the total `months` / 12

+ - "MONTH", ("MON", "MONS", "MONTHS") - the total `months` modulo 12

+ - "DAY", ("D", "DAYS") - the `days` part of interval

+ - "HOUR", ("H", "HOURS", "HR", "HRS") - how many hours the `microseconds` contains

+ - "MINUTE", ("M", "MIN", "MINS", "MINUTES") - how many minutes left after taking hours from `microseconds`

+ - "SECOND", ("S", "SEC", "SECONDS", "SECS") - how many second with fractions left after taking hours and minutes from `microseconds`

+ * source - a date/timestamp or interval column from where `field` should be extracted

+

+ Examples:

+ > SELECT date_part('YEAR', TIMESTAMP '2019-08-12 01:00:00.123456');

+ 2019

+ > SELECT date_part('week', timestamp'2019-08-12 01:00:00.123456');

+ 33

+ > SELECT date_part('doy', DATE'2019-08-12');

+ 224

+ > SELECT date_part('SECONDS', timestamp'2019-10-01 00:00:01.000001');

+ 1.000001

+ > SELECT date_part('days', interval 1 year 10 months 5 days);

+ 5

+ > SELECT date_part('seconds', interval 5 hours 30 seconds 1 milliseconds 1 microseconds);

+ 30.001001

+

+ Note:

+ The date_part function is equivalent to the SQL-standard function `extract`

+

+ Since: 3.0.0

+

+Function: date_part

+Usage: date_part(field, source) - Extracts a part of the date/timestamp or interval source. |

| case "DAY" | "D" | "DAYS" => DayOfMonth(source) | ||

| case "DAYOFWEEK" => DayOfWeek(source) | ||

| case "DOW" => Subtract(DayOfWeek(source), Literal(1)) | ||

| case "DAYOFWEEK" | "DOW" => DayOfWeek(source) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I said that the DOW behavior looks more reasonable, but unfortunately, we already have DAYOFWEEK in Spark 2.4 and we can't change that. It's more important to keep internal consistency.

| - "YEAR", ("Y", "YEARS", "YR", "YRS") - the year field | ||

| - "ISOYEAR" - the ISO 8601 week-numbering year that the datetime falls in | ||

| - "QUARTER", ("QTR") - the quarter (1 - 4) of the year that the datetime falls in | ||

| - "MONTH", ("MON", "MONS", "MONTHS") - the month field |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the month field (1 - 12)

| - "EPOCH" - the number of seconds with fractional part in microsecond precision since 1970-01-01 00:00:00 local time (can be negative) | ||

| - Supported string values of `field` for interval(which consists of `months`, `days`, `microseconds`) are: | ||

| - "YEAR", ("Y", "YEARS", "YR", "YRS") - the total `months` / 12 | ||

| - "MONTH", ("MON", "MONS", "MONTHS") - the total `months` modulo 12 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the total `months` % 12

to be consistent with

the total `months` / 12

| 30.001001 | ||

| """, | ||

| note = """ | ||

| The _FUNC_ function is equivalent to the SQL-standard function `extract` |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

EXTRACT(field FROM source)

| 30.001001 | ||

| """, | ||

| note = """ | ||

| The _FUNC_ function is equivalent to `date_part`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

date_part(field, source)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

BTW is EXTRACT more widely used? If yes then we should put the document in Extract.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sgtm

|

Test build #121494 has finished for PR 28248 at commit

|

|

Test build #121507 has finished for PR 28248 at commit

|

|

retest this please |

|

Test build #121512 has finished for PR 28248 at commit

|

|

Test build #121565 has finished for PR 28248 at commit

|

|

retest this please |

|

Test build #121573 has finished for PR 28248 at commit

|

|

thanks, merging to master/3.0! |

…ession and dayofweek function

### What changes were proposed in this pull request?

```sql

spark-sql> SELECT extract(dayofweek from '2009-07-26');

1

spark-sql> SELECT extract(dow from '2009-07-26');

0

spark-sql> SELECT extract(isodow from '2009-07-26');

7

spark-sql> SELECT dayofweek('2009-07-26');

1

spark-sql> SELECT weekday('2009-07-26');

6

```

Currently, there are 4 types of day-of-week range:

1. the function `dayofweek`(2.3.0) and extracting `dayofweek`(2.4.0) result as of Sunday(1) to Saturday(7)

2. extracting `dow`(3.0.0) results as of Sunday(0) to Saturday(6)

3. extracting` isodow` (3.0.0) results as of Monday(1) to Sunday(7)

4. the function `weekday`(2.4.0) results as of Monday(0) to Sunday(6)

Actually, extracting `dayofweek` and `dow` are both derived from PostgreSQL but have different meanings.

https://issues.apache.org/jira/browse/SPARK-23903

https://issues.apache.org/jira/browse/SPARK-28623

In this PR, we make extracting `dow` as same as extracting `dayofweek` and the `dayofweek` function for historical reason and not breaking anything.

Also, add more documentation to the extracting function to make extract field more clear to understand.

### Why are the changes needed?

Consistency insurance

### Does this PR introduce any user-facing change?

yes, doc updated and extract `dow` is as same as `dayofweek`

### How was this patch tested?

1. modified ut

2. local SQL doc verification

#### before

#### after

Closes #28248 from yaooqinn/SPARK-31474.

Authored-by: Kent Yao <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

(cherry picked from commit 1985437)

Signed-off-by: Wenchen Fan <[email protected]>

…name in the note field of expression info ### What changes were proposed in this pull request? \_FUNC\_ is used in note() of `ExpressionDescription` since #28248, it can be more cases later, we should replace it with function name for documentation ### Why are the changes needed? doc fix ### Does this PR introduce any user-facing change? no ### How was this patch tested? pass Jenkins, and verify locally with Jekyll serve Closes #28305 from yaooqinn/SPARK-31474-F. Authored-by: Kent Yao <[email protected]> Signed-off-by: HyukjinKwon <[email protected]>

…name in the note field of expression info ### What changes were proposed in this pull request? \_FUNC\_ is used in note() of `ExpressionDescription` since #28248, it can be more cases later, we should replace it with function name for documentation ### Why are the changes needed? doc fix ### Does this PR introduce any user-facing change? no ### How was this patch tested? pass Jenkins, and verify locally with Jekyll serve Closes #28305 from yaooqinn/SPARK-31474-F. Authored-by: Kent Yao <[email protected]> Signed-off-by: HyukjinKwon <[email protected]> (cherry picked from commit 3b57921) Signed-off-by: HyukjinKwon <[email protected]>

What changes were proposed in this pull request?

Currently, there are 4 types of day-of-week range:

dayofweek(2.3.0) and extractingdayofweek(2.4.0) result as of Sunday(1) to Saturday(7)dow(3.0.0) results as of Sunday(0) to Saturday(6)isodow(3.0.0) results as of Monday(1) to Sunday(7)weekday(2.4.0) results as of Monday(0) to Sunday(6)Actually, extracting

dayofweekanddoware both derived from PostgreSQL but have different meanings.https://issues.apache.org/jira/browse/SPARK-23903

https://issues.apache.org/jira/browse/SPARK-28623

In this PR, we make extracting

dowas same as extractingdayofweekand thedayofweekfunction for historical reason and not breaking anything.Also, add more documentation to the extracting function to make extract field more clear to understand.

Why are the changes needed?

Consistency insurance

Does this PR introduce any user-facing change?

yes, doc updated and extract

dowis as same asdayofweekHow was this patch tested?

before

after