-

Couldn't load subscription status.

- Fork 28.9k

[SPARK-37535][CORE] Update default spark.io.compression.codec to zstd #34798

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

cc @dongjoon-hyun FYI |

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #145898 has finished for PR 34798 at commit

|

|

Just for my context - is this default change to match other default changes we already made? |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

- Could you keep the original JIRA ID instead of filing a new one, @wangyum ?

- #32286 [SPARK-35181][CORE] Use zstd for spark.io.compression.codec by default

- Also, we need a test case if this claims for a bug fix.

|

Thank you all. The root cause may be a hardware issue. |

|

It turns out that |

|

Thank you for the info, @wangyum . |

|

@wangyum @dongjoon-hyun Why root cause may be a hardware issue, could you provide more information about this. Finally, how to fix |

|

@sleep1661 You can get more information at #32385 (comment), and #32385 (comment) may be a direction to fix it |

What changes were proposed in this pull request?

This pr update default

spark.io.compression.codecto zstd.Why are the changes needed?

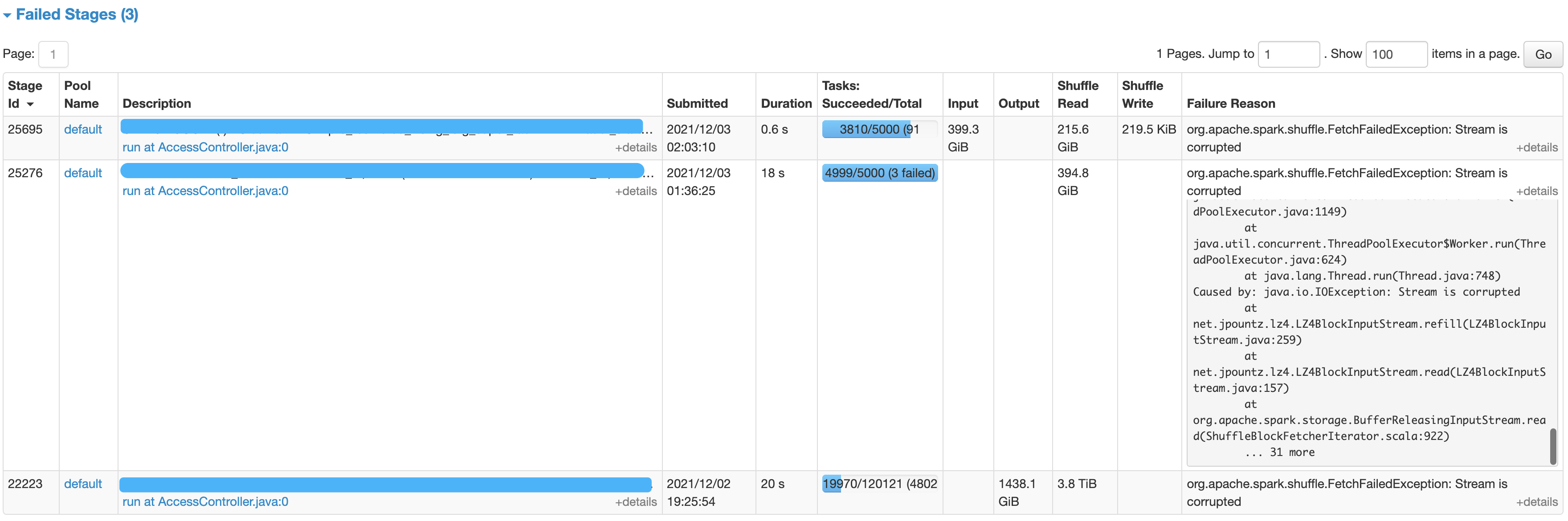

To workaround

Stream is corruptedissue:Please see SPARK-18105 for more details.

Does this PR introduce any user-facing change?

No.

How was this patch tested?

Existing unit tests.