-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-40705][SQL] Handle case of using mutable array when converting Row to JSON for Scala 2.13 #38154

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

11eba27 to

40e9de4

Compare

|

Can one of the admins verify this patch? |

40e9de4 to

0efc177

Compare

0efc177 to

7075138

Compare

| StructField("col1", StringType) :: | ||

| StructField("col2", StringType) :: | ||

| StructField("col3", IntegerType) :: Nil) | ||

| StructField("col2", StringType) :: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Scalafmt formatted the file, should I revert the changes related to formatting the code ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah revert all the formatting only changes please

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I pushed a new commit with the revert of those changes

srowen

left a comment

srowen

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks OK otherwise

… Row to JSON for Scala 2.13 ### What changes were proposed in this pull request? I encountered an issue using Spark while reading JSON files based on a schema it throws every time an exception related to conversion of types. >Note: This issue can be reproduced only with Scala `2.13`, I'm not having this issue with `2.12` ```` Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. java.lang.IllegalArgumentException: Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. ```` If I add ArraySeq to the matching cases, the test that I added passed successfully  With the current code source, the test fails and we have this following error  ### Why are the changes needed? If the person is using Scala 2.13, they can't parse an array. Which means they need to fallback to 2.12 to keep the project functioning ### How was this patch tested? I added a sample unit test for the case, but I can add more if you want to. Closes #38154 from Amraneze/fix/spark_40705. Authored-by: Ait Zeouay Amrane <[email protected]> Signed-off-by: Sean Owen <[email protected]> (cherry picked from commit 9a97f8c) Signed-off-by: Sean Owen <[email protected]>

|

Merged to master/3.3 |

What changes were proposed in this pull request?

I encountered an issue using Spark while reading JSON files based on a schema it throws every time an exception related to conversion of types.

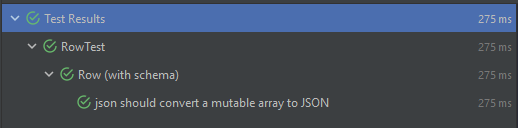

If I add ArraySeq to the matching cases, the test that I added passed successfully

With the current code source, the test fails and we have this following error

Why are the changes needed?

If the person is using Scala 2.13, they can't parse an array. Which means they need to fallback to 2.12 to keep the project functioning

How was this patch tested?

I added a sample unit test for the case, but I can add more if you want to.