RF-DETR is a real-time, transformer-based object detection model architecture developed by Roboflow and released under the Apache 2.0 license.

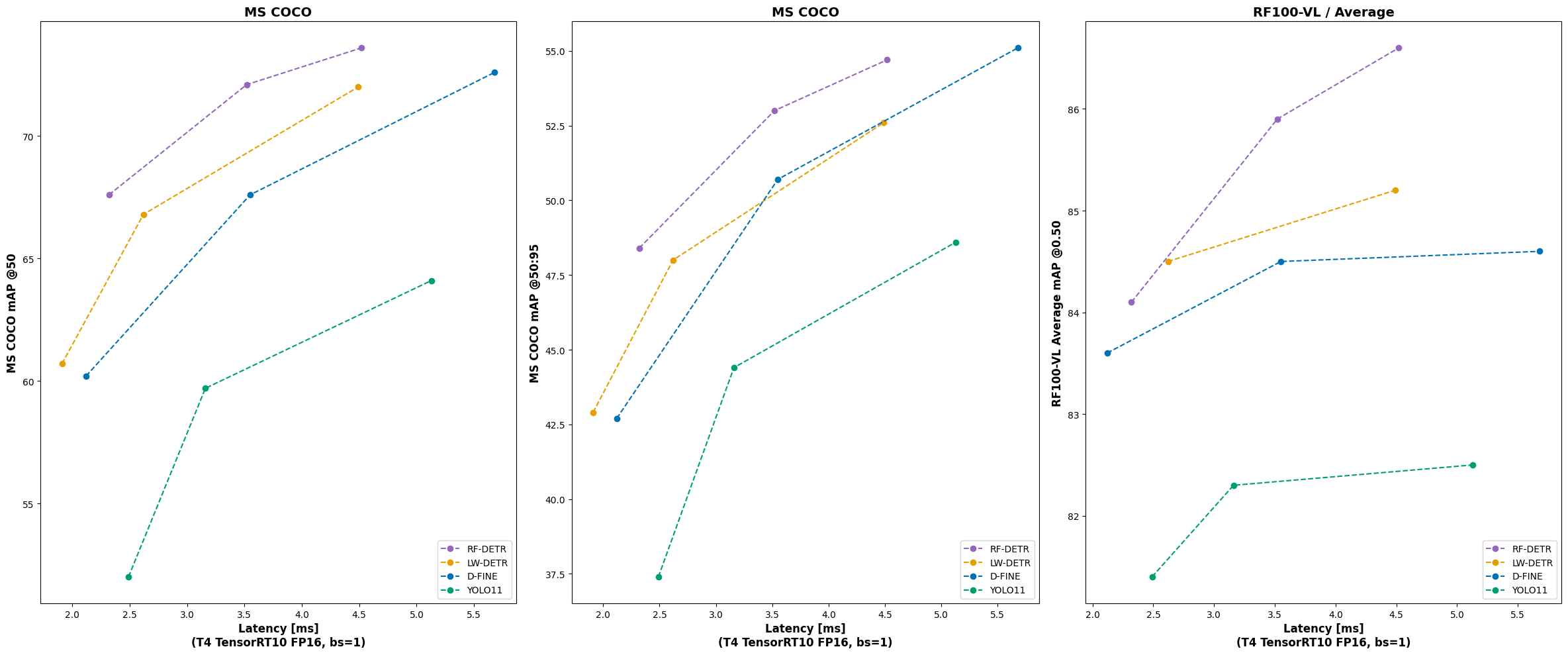

RF-DETR is the first real-time model to exceed 60 AP on the Microsoft COCO benchmark alongside competitive performance at base sizes. It also achieves state-of-the-art performance on RF100-VL, an object detection benchmark that measures model domain adaptability to real world problems. RF-DETR is fastest and most accurate for its size when compared current real-time objection models.

RF-DETR is small enough to run on the edge using Inference, making it an ideal model for deployments that need both strong accuracy and real-time performance.

Read the documentation to get started training.

2025/07/23: We release three new checkpoints for RF-DETR: Nano, Small, and Medium.- RF-DETR Base is now deprecated. We recommend using RF-DETR Medium which offers subtantially better accuracy at comparable latency.

2025/03/20: We release RF-DETR real-time object detection model. Code and checkpoint for RF-DETR-large and RF-DETR-base are available.2025/04/03: We release early stopping, gradient checkpointing, metrics saving, training resume, TensorBoard and W&B logging support.2025/05/16: We release an 'optimize_for_inference' method which speeds up native PyTorch by up to 2x, depending on platform.

RF-DETR achieves state-of-the-art performance on both the Microsoft COCO and the RF100-VL benchmarks.

The table below shows the performance of RF-DETR medium, compared to comparable medium models:

| family | size | coco_map50 | coco_map50@95 | rf100vl_map50 | rv100vl_map50@95 | latency |

|---|---|---|---|---|---|---|

| RF-DETR | Nano | 67.6 | 48.4 | 84.1 | 57.1 | 2.32 |

| RF-DETR | Small | 72.1 | 53.0 | 85.9 | 59.6 | 3.52 |

| RF-DETR | Medium | 73.6 | 54.7 | 86.6 | 60.6 | 4.52 |

| YOLO11 | n | 52.0 | 37.4 | 81.4 | 55.3 | 2.49 |

| YOLO11 | s | 59.7 | 44.4 | 82.3 | 56.2 | 3.16 |

| YOLO11 | m | 64.1 | 48.6 | 82.5 | 56.5 | 5.13 |

| YOLO11 | l | 65.3 | 50.2 | x | x | 6.65 |

| YOLO11 | x | 66.5 | 51.2 | x | x | 11.92 |

| LW-DETR | Tiny | 60.7 | 42.9 | x | x | 1.91 |

| LW-DETR | Small | 66.8 | 48.0 | 84.5 | 58.0 | 2.62 |

| LW-DETR | Medium | 72.0 | 52.6 | 85.2 | 59.4 | 4.49 |

| D-FINE | Nano | 60.2 | 42.7 | 83.6 | 57.7 | 2.12 |

| D-FINE | Small | 67.6 | 50.7 | 84.5 | 59.9 | 3.55 |

| D-FINE | Medium | 72.6 | 55.1 | 84.6 | 60.2 | 5.68 |

See our benchmark notes in the RF-DETR documentation.

We are actively working on RF-DETR Large and X-Large models using the same techniques we used to achieve the strong accuracy that RF-DETR Medium attains. This is why RF-DETR Large and X-Large is not yet reported on our pareto charts and why we haven't benchmarked other models at similar sizes. Check back in the next few weeks for the launch of new RF-DETR Large and X-Large models.

To install RF-DETR, install the rfdetr package in a Python>=3.9 environment with pip:

pip install rfdetrInstall from source

By installing RF-DETR from source, you can explore the most recent features and enhancements that have not yet been officially released. Please note that these updates are still in development and may not be as stable as the latest published release.

pip install git+https://github.com/roboflow/rf-detr.gitThe easiest path to deployment is using Roboflow's Inference package.

The code below lets you run rfdetr-base on an image:

import os

import supervision as sv

from inference import get_model

from PIL import Image

from io import BytesIO

import requests

url = "https://media.roboflow.com/dog.jpeg"

image = Image.open(BytesIO(requests.get(url).content))

model = get_model("rfdetr-base")

predictions = model.infer(image, confidence=0.5)[0]

detections = sv.Detections.from_inference(predictions)

labels = [prediction.class_name for prediction in predictions.predictions]

annotated_image = image.copy()

annotated_image = sv.BoxAnnotator(color=sv.ColorPalette.ROBOFLOW).annotate(annotated_image, detections)

annotated_image = sv.LabelAnnotator(color=sv.ColorPalette.ROBOFLOW).annotate(annotated_image, detections, labels)You can also use the .predict method to perform inference during local development. The .predict() method accepts various input formats, including file paths, PIL images, NumPy arrays, and torch tensors. Please ensure inputs use RGB channel order. For torch.Tensor inputs specifically, they must have a shape of (3, H, W) with values normalized to the [0..1) range. If you don't plan to modify the image or batch size dynamically at runtime, you can also use .optimize_for_inference() to get up to 2x end-to-end speedup, depending on platform.

import io

import requests

import supervision as sv

from PIL import Image

from rfdetr import RFDETRBase

from rfdetr.util.coco_classes import COCO_CLASSES

model = RFDETRBase()

model = model.optimize_for_inference()

url = "https://media.roboflow.com/notebooks/examples/dog-2.jpeg"

image = Image.open(io.BytesIO(requests.get(url).content))

detections = model.predict(image, threshold=0.5)

labels = [

f"{COCO_CLASSES[class_id]} {confidence:.2f}"

for class_id, confidence

in zip(detections.class_id, detections.confidence)

]

annotated_image = image.copy()

annotated_image = sv.BoxAnnotator().annotate(annotated_image, detections)

annotated_image = sv.LabelAnnotator().annotate(annotated_image, detections, labels)

sv.plot_image(annotated_image)You can fine-tune an RF-DETR Nano, Small, Medium, and Base model with a custom dataset using the rfdetr Python package.

Read our training tutorial to get started

Visit our documentation website to learn more about how to use RF-DETR.

Both the code and the weights pretrained on the COCO dataset are released under the Apache 2.0 license.

Our work is built upon LW-DETR, DINOv2, and Deformable DETR. Thanks to their authors for their excellent work!

If you find our work helpful for your research, please consider citing the following BibTeX entry.

@software{rf-detr,

author = {Robinson, Isaac and Robicheaux, Peter and Popov, Matvei},

license = {Apache-2.0},

title = {RF-DETR},

howpublished = {\url{https://github.com/roboflow/rf-detr}},

year = {2025},

note = {SOTA Real-Time Object Detection Model}

}We welcome and appreciate all contributions! If you notice any issues or bugs, have questions, or would like to suggest new features, please open an issue or pull request. By sharing your ideas and improvements, you help make RF-DETR better for everyone.