I want to fully grasp 3D Gaussian Splatting. The original code was a bit tough to follow without computer graphics or CUDA background, especially tile-based rendering. So I'm trying my best to implement it from scratch with NumPy and PyTorch, starting with rendering. Simplicity is prioritized over performance.

# tokei camera.py gaussian.py render.py utils.py

├── 📄 camera.py # Camera model and view transformation utilities

├── 📄 gaussian.py # 3D Gaussian representation and operations

├── 📄 render.py # Rendering pipeline and projection functions

└── 📄 utils.py # Helper functions and common utilities

===============================================================================

Language Files Lines Code Comments Blanks

===============================================================================

Python 4 662 529 44 89

===============================================================================- Clone the repository:

git clone https://github.com/suous/learn-gaussian-splatting.git

cd learn-gaussian-splatting- Install the required dependencies.

uv sync- Activate the environment.

source .venv/bin/activateDownload the COLMAP and PLY data from Release v1.0.0:

wget -qO- https://github.com/suous/learn-gaussian-splatting/releases/download/v1.0.0/data.tgz | tar xz -C .This will create a data directory with the following structure:

data

├── colmap

│ ├── images

│ │ ├── 000000.png

│ │ ├── ..........

│ │ └── 000024.png

│ └── sparse

│ └── 0

│ ├── cameras.bin

│ ├── images.bin

│ ├── points3D.bin

│ └── points3D.ply

└── point_cloud.ply# python gaussian.py

==================================================

Gaussian Splatting Statistics

==================================================

🎯 Positions : (37942, 3)

🔄 Rotations : (37942, 4)

📏 Scalings : (37942, 3)

💫 Opacities : (37942, 1)

🎨 Features : (37942, 3, 16)

📊 Covariances: (37942, 3, 3)

--------------------------------------------------

Total Gaussians: 37,942

SH Degree : 3

==================================================- Render an Image

python main.py --image_number 0This will display the original image and the rendered image using matplotlib.

- Generate animation

python main.py --generate_animationffmpeg -framerate 8 -pattern_type glob -i "*.png" -vf "fps=10,scale=640:-1,split[s0][s1];[s0]palettegen[p];[s1][p]paletteuse" -loop 0 animation.gif3D Gaussian Splatting (3DGS) is a novel method for representing and rendering 3D scenes, introduced in the paper "3D Gaussian Splatting for Real-Time Radiance Field Rendering" by Kerbl et al. (2023). It has gained significant attention as an alternative to Neural Radiance Fields (NeRFs) due to its impressive rendering speed and quality.

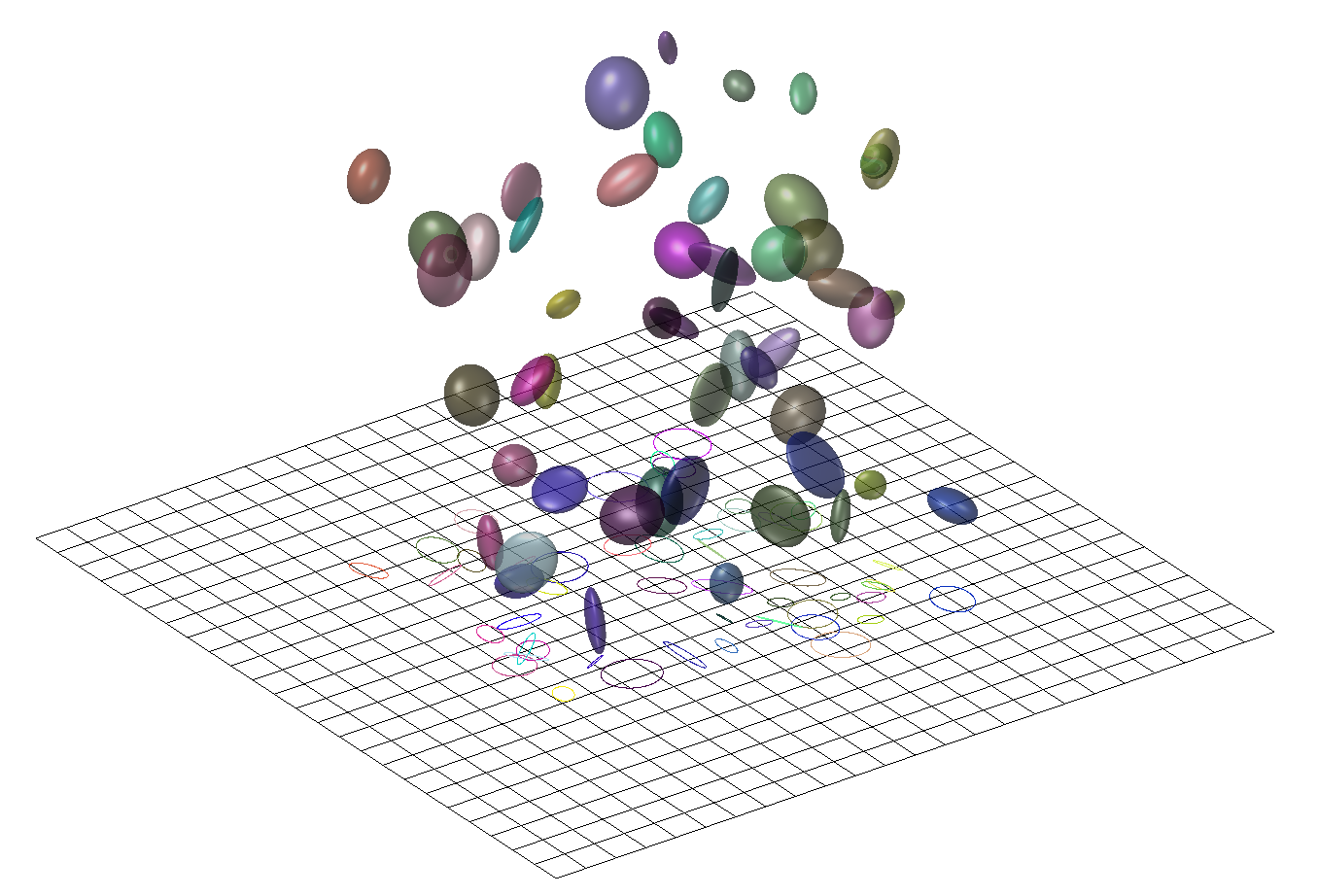

At its core, 3DGS represents a 3D scene as a collection of millions of 3D Gaussians. Each Gaussian is a point in space with specific parameters that define its appearance and shape.

image from https://3dgstutorial.github.io/3dv_part2.pdf

Each 3D Gaussian is defined by the following parameters:

-

Position (Mean

$\mu$ ): A 3D vector representing the center of the Gaussian:$[x, y, z]$ . -

Covariance (

$\Sigma$ ): A$3\times3$ matrix that defines the shape, size, and orientation of the Gaussian. To ensure that the covariance matrix remains physically meaningful (positive semi-definite) during optimization, it is decomposed into:-

Scaling (

$S$ ): A 3D vector representing the scale of the Gaussian along its local axes:$[S_x, S_y, S_z]$ . -

Rotation (

$R$ ): A quaternion representing the orientation of the Gaussian in space:$q=[r, x, y, z]$ .

-

Scaling (

-

Color (

$c$ ): The color of the Gaussian, which can be represented as RGB values or, more commonly, as coefficients of Spherical Harmonics (SH) to model view-dependent effects like reflections. -

Opacity (

$\alpha$ ): A value between 0 and 1 that controls the transparency of the Gaussian.

The rendering process in 3DGS is a form of rasterization. For a given camera viewpoint, the 3D Gaussians are projected onto the 2D image plane. These 2D projections are then sorted by depth and blended together, front-to-back, to produce the final color for each pixel.

The influence of a 3D Gaussian at a point

Where:

-

$\mu$ is the mean (center) of the Gaussian. -

$\Sigma$ is the covariance matrix.

During the 3D-to-2D projection process, the Jacobian matrix

3D Gaussian Splatting (3DGS) specifically uses perspective projection. According to the perspective projection formula:

The relationship between

The Jacobian matrix

The final color

Where:

-

$N$ is the set of Gaussians overlapping the pixel, sorted by depth. -

$c_i$ is the color of the i-th Gaussian. -

$\alpha_i$ is the final opacity of the i-th Gaussian, which is calculated from its 2D projection.

-

$\alpha^\prime$ is the learned opacity. -

$\Sigma^{\prime}$ is the 2d projection of the 3d covariance.

The parameters of the Gaussians are optimized to reconstruct a scene from a set of input images with known camera poses. The training process involves:

-

Initialization: The process starts with a sparse point cloud generated from the input images using Structure-from-Motion (SfM), for example, with COLMAP. These points are used to initialize the positions (

$\mu$ ) of the Gaussians. - Optimization: The parameters of the Gaussians (position, covariance, color, opacity) are optimized using stochastic gradient descent. The loss function is typically a combination of an L1 loss and a D-SSIM (Structural Dissimilarity Index) term, comparing the rendered images with the training images.

-

Adaptive Densification: During optimization, the set of Gaussians is adaptively modified to better represent the scene. This involves:

- Cloning: Duplicating small Gaussians in areas that are under-reconstructed.

- Splitting: Splitting large Gaussians in areas with high variance.

- Pruning: Removing Gaussians that are nearly transparent (very low opacity).

- Understand and implement tile based rendering.

- Train from scratch with pytorch code.

- 3D Gaussian Splatting for Real-Time Radiance Field Rendering

- A Survey on 3D Gaussian Splatting

- Mip-Splatting: Alias-free 3D Gaussian Splatting

- 2D Gaussian Splatting for Geometrically Accurate Radiance Fields

- Dynamic 3D Gaussians: Tracking by Persistent Dynamic View Synthesis

- 4D Gaussian Splatting for Real-Time Dynamic Scene Rendering

- VastGaussian: Vast 3D Gaussians for Large Scene Reconstruction

- Games101

- Visualizing quaternions (4d numbers) with stereographic projection

- 【论文讲解】用点云结合3D高斯构建辐射场,成为快速训练、实时渲染的新SOTA!

- 3D Gaussian Splatting Tutorial

- A Comprehensive Overview of Gaussian Splatting

- Introduction to 3D Gaussian Splatting

- Exploring 3D Gaussian Splatting

- 3D Gaussian Splatting Introduction – Paper Explanation & Training on Custom Datasets with NeRF Studio Gsplats

- Rendering in 3D Gaussian Splatting

- 3D Gaussian Splatting

- 详解3D Gaussian Splatting CUDA Kernel:前向传播

- 2DGS: 2D Gaussian Splatting for Geometrically Accurate Radiance Fields

- Understanding 3D Gaussian Splats by writing a software renderer

- [Concept summary] 3D Gaussian and 2D projection

❤️ Thanks to Cursor for generating well-formatted code comments!