forked from apache/spark

-

Notifications

You must be signed in to change notification settings - Fork 2

[pull] master from apache:master #38

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

…CY_ERROR_TEMP_2026-2050 ### What changes were proposed in this pull request? This PR proposes to migrate 25 execution errors onto temporary error classes with the prefix `_LEGACY_ERROR_TEMP_2026` to `_LEGACY_ERROR_TEMP_2050`. The error classes are prefixed with `_LEGACY_ERROR_TEMP_` indicates the dev-facing error messages, and won't be exposed to end users. ### Why are the changes needed? To speed-up the error class migration. The migration on temporary error classes allow us to analyze the errors, so we can detect the most popular error classes. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? ``` $ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite" $ build/sbt "test:testOnly *SQLQuerySuite" ``` Closes #38108 from itholic/SPARK-40540-2026-2050. Lead-authored-by: itholic <[email protected]> Co-authored-by: Haejoon Lee <[email protected]> Signed-off-by: Max Gekk <[email protected]>

### What changes were proposed in this pull request? This pr aims upgrade `sbt-assembly` plugin from `0.15.0` to `1.2.0` ### Why are the changes needed? `sbt-assembly` 1.2.0 no longer supports sbt 0.13, the release notes as follows: - https://github.com/sbt/sbt-assembly/releases/tag/v1.0.0 - https://github.com/sbt/sbt-assembly/releases/tag/v1.1.0 - https://github.com/sbt/sbt-assembly/releases/tag/v1.2.0 ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #38164 from LuciferYang/SPARK-40712. Authored-by: yangjie01 <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…cumentation ### What changes were proposed in this pull request? This PR aims to supplement undocumented yarn configuration in documentation. ### Why are the changes needed? Help users to confirm yarn configurations through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentation. ### How was this patch tested? Pass the GA. Closes #38150 from dcoliversun/SPARK-40699. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…cumentation ### What changes were proposed in this pull request? This PR aims to supplement undocumented avro configurations in documentation. ### Why are the changes needed? Help users to confirm configuration through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentation ### How was this patch tested? Pass the GA Closes #38156 from dcoliversun/SPARK-40709. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

… documentation ### What changes were proposed in this pull request? This PR aims to supplement undocumented parquet configurations in documentation. ### Why are the changes needed? Help users to confirm configurations through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentation. ### How was this patch tested? Pass the GA. Closes #38160 from dcoliversun/SPARK-40710. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…configuration.md` ### What changes were proposed in this pull request? This PR aims to supplement missing spark configurations in `org.apache.spark.internal.config` in `configuration.md`. ### Why are the changes needed? Help users to confirm configuration through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentation. ### How was this patch tested? Pass the GitHub Actions. Closes #38131 from dcoliversun/SPARK-40675. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

…CY_ERROR_TEMP_2051-2075 ### What changes were proposed in this pull request? This PR proposes to migrate 25 execution errors onto temporary error classes with the prefix `_LEGACY_ERROR_TEMP_2051` to `_LEGACY_ERROR_TEMP_2075`. The error classes are prefixed with `_LEGACY_ERROR_TEMP_` indicates the dev-facing error messages, and won't be exposed to end users. ### Why are the changes needed? To speed-up the error class migration. The migration on temporary error classes allow us to analyze the errors, so we can detect the most popular error classes. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? ``` $ build/sbt "sql/testOnly org.apache.spark.sql.SQLQueryTestSuite" $ build/sbt "test:testOnly *SQLQuerySuite" ``` Closes #38116 from itholic/SPARK-40540-2051-2075. Lead-authored-by: itholic <[email protected]> Co-authored-by: Haejoon Lee <[email protected]> Signed-off-by: Max Gekk <[email protected]>

### What changes were proposed in this pull request? This patch replaces the shaded version of Netty in GRPC with the unshaded one since we're shading the result again and want to avoid double shaded package names. ### Why are the changes needed? Unnecessary double shaded package names. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing tests will check for compilation break. Closes #38179 from grundprinzip/spark-40718. Authored-by: Martin Grund <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

…fix code style ### What changes were proposed in this pull request? We are very concerned about the progress of the Spark Connect, so we understand the current draft and read preliminary implementation. We can see that some code style are inappropriate, or even cause confusion. Therefore, this PR try to correct it. ### Why are the changes needed? Fix code style on scala server side. ### Does this PR introduce _any_ user-facing change? 'No'. Spark Connect just started. ### How was this patch tested? N/A Closes #38061 from beliefer/SPARK-40448_followup. Authored-by: Jiaan Geng <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

### What changes were proposed in this pull request? Simplify `corr` with method `inline` ### Why are the changes needed? after `inline` was introduced into PySpark, we can use it to simplify existing implementations ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? existing UT Closes #38184 from zhengruifeng/ps_corr_inline. Authored-by: Ruifeng Zheng <[email protected]> Signed-off-by: Ruifeng Zheng <[email protected]>

### What changes were proposed in this pull request?

Add `mypy-protobuf` to dev/requirements

### Why are the changes needed?

`connector/connect/dev/generate_protos.sh` requires this package:

```

DEBUG /buf.alpha.registry.v1alpha1.GenerateService/GeneratePlugins {"duration": "14.25µs", "http.path": "/buf.alpha.registry.v1alpha1.GenerateService/GeneratePlugins", "http.url": "https://api.buf.build/buf.alpha.registry.v1alpha1.GenerateService/GeneratePlugins", "http.host": "api.buf.build", "http.method": "POST", "http.user_agent": "connect-go/0.4.0-dev (go1.19.2)"}

DEBUG command {"duration": "9.238333ms"}

Failure: plugin mypy: could not find protoc plugin for name mypy

```

### Does this PR introduce _any_ user-facing change?

No, only for contributors

### How was this patch tested?

manually check

Closes #38186 from zhengruifeng/add_mypy-protobuf_to_requirements.

Authored-by: Ruifeng Zheng <[email protected]>

Signed-off-by: Yikun Jiang <[email protected]>

…_protos.sh` ### What changes were proposed in this pull request? Fix the path in `connector/connect/dev/generate_protos.sh` ### Why are the changes needed? ``` (spark_dev) ➜ dev git:(master) pwd /Users/ruifeng.zheng/Dev/spark/connector/connect/dev (spark_dev) ➜ dev git:(master) ./generate_protos.sh +++ dirname ./generate_protos.sh ++ cd ./../.. ++ pwd + SPARK_HOME=/Users/ruifeng.zheng/Dev/spark/connector + cd /Users/ruifeng.zheng/Dev/spark/connector + pushd connector/connect/src/main ./generate_protos.sh: line 22: pushd: connector/connect/src/main: No such file or directory ``` ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? manually check Closes #38187 from zhengruifeng/connect_fix_generate_protos. Authored-by: Ruifeng Zheng <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…dependencies for the `connect` module ### What changes were proposed in this pull request? After #38179, there are 2 netty version in Spark: - 4.1.72 for `connect` module - 4.1.80 for other modules So this pr explicitly add netty related dependencies for the `connect` module to ensure Spark use unified netty version. ### Why are the changes needed? Ensure Spark use unified netty version. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? - Pass GitHub Actions - Manual test: run `mvn dependency:tree -pl connector/connect | grep netty` Before ``` [INFO] | +- io.netty:netty-all:jar:4.1.80.Final:provided [INFO] | | +- io.netty:netty-buffer:jar:4.1.80.Final:compile [INFO] | | +- io.netty:netty-codec:jar:4.1.80.Final:compile [INFO] | | +- io.netty:netty-common:jar:4.1.80.Final:compile [INFO] | | +- io.netty:netty-handler:jar:4.1.80.Final:compile [INFO] | | +- io.netty:netty-resolver:jar:4.1.80.Final:provided [INFO] | | +- io.netty:netty-transport:jar:4.1.80.Final:compile [INFO] | | +- io.netty:netty-transport-classes-epoll:jar:4.1.80.Final:provided [INFO] | | \- io.netty:netty-transport-classes-kqueue:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-epoll:jar:linux-x86_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-epoll:jar:linux-aarch_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-kqueue:jar:osx-aarch_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-kqueue:jar:osx-x86_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-tcnative-classes:jar:2.0.54.Final:provided [INFO] | \- org.apache.arrow:arrow-memory-netty:jar:9.0.0:provided [INFO] +- io.grpc:grpc-netty:jar:1.47.0:compile [INFO] | +- io.netty:netty-codec-http2:jar:4.1.72.Final:compile [INFO] | | \- io.netty:netty-codec-http:jar:4.1.72.Final:compile [INFO] | +- io.netty:netty-handler-proxy:jar:4.1.72.Final:runtime [INFO] | | \- io.netty:netty-codec-socks:jar:4.1.72.Final:runtime [INFO] | \- io.netty:netty-transport-native-unix-common:jar:4.1.72.Final:runtime ``` After ``` [INFO] | +- io.netty:netty-all:jar:4.1.80.Final:provided [INFO] | | +- io.netty:netty-resolver:jar:4.1.80.Final:provided [INFO] | | +- io.netty:netty-transport-classes-epoll:jar:4.1.80.Final:provided [INFO] | | \- io.netty:netty-transport-classes-kqueue:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-epoll:jar:linux-x86_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-epoll:jar:linux-aarch_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-kqueue:jar:osx-aarch_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport-native-kqueue:jar:osx-x86_64:4.1.80.Final:provided [INFO] | +- io.netty:netty-tcnative-classes:jar:2.0.54.Final:provided [INFO] | \- org.apache.arrow:arrow-memory-netty:jar:9.0.0:provided [INFO] +- io.grpc:grpc-netty:jar:1.47.0:compile [INFO] +- io.netty:netty-codec-http2:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-common:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-buffer:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-transport:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-codec:jar:4.1.80.Final:provided [INFO] | +- io.netty:netty-handler:jar:4.1.80.Final:provided [INFO] | \- io.netty:netty-codec-http:jar:4.1.80.Final:provided [INFO] +- io.netty:netty-handler-proxy:jar:4.1.80.Final:provided [INFO] | \- io.netty:netty-codec-socks:jar:4.1.80.Final:provided [INFO] +- io.netty:netty-transport-native-unix-common:jar:4.1.80.Final:provided ``` Closes #38185 from LuciferYang/SPARK-40718-FOLLOWUP. Authored-by: yangjie01 <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…umentation ### What changes were proposed in this pull request? This PR aims to supplement undocumented orc configurations in documentation. ### Why are the changes needed? Help users to confirm configurations through documentation instead of code. ### Does this PR introduce _any_ user-facing change? Yes, more configurations in documentations. ### How was this patch tested? Pass the GA. Closes #38188 from dcoliversun/SPARK-40726. Authored-by: Qian.Sun <[email protected]> Signed-off-by: Sean Owen <[email protected]>

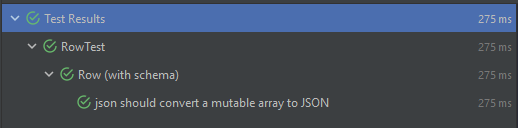

… Row to JSON for Scala 2.13 ### What changes were proposed in this pull request? I encountered an issue using Spark while reading JSON files based on a schema it throws every time an exception related to conversion of types. >Note: This issue can be reproduced only with Scala `2.13`, I'm not having this issue with `2.12` ```` Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. java.lang.IllegalArgumentException: Failed to convert value ArraySeq(1, 2, 3) (class of class scala.collection.mutable.ArraySeq$ofRef}) with the type of ArrayType(StringType,true) to JSON. ```` If I add ArraySeq to the matching cases, the test that I added passed successfully  With the current code source, the test fails and we have this following error  ### Why are the changes needed? If the person is using Scala 2.13, they can't parse an array. Which means they need to fallback to 2.12 to keep the project functioning ### How was this patch tested? I added a sample unit test for the case, but I can add more if you want to. Closes #38154 from Amraneze/fix/spark_40705. Authored-by: Ait Zeouay Amrane <[email protected]> Signed-off-by: Sean Owen <[email protected]>

### What changes were proposed in this pull request? In the PR, I propose to remove `PartitionAlreadyExistsException` and use `PartitionsAlreadyExistException` instead of it. ### Why are the changes needed? 1. To simplify user apps. After the changes, users don't need to catch both exceptions `PartitionsAlreadyExistException` as well as `PartitionAlreadyExistsException `. 2. To improve code maintenance since don't need to support almost the same code. 3. To avoid errors like the PR #38152 fixed `PartitionsAlreadyExistException` but not `PartitionAlreadyExistsException`. ### Does this PR introduce _any_ user-facing change? Yes. ### How was this patch tested? By running the affected test suites: ``` $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly *SupportsPartitionManagementSuite" $ build/sbt -Phive-2.3 -Phive-thriftserver "test:testOnly *.AlterTableAddPartitionSuite" ``` Closes #38161 from MaxGekk/remove-PartitionAlreadyExistsException. Authored-by: Max Gekk <[email protected]> Signed-off-by: Max Gekk <[email protected]>

…n types ### What changes were proposed in this pull request? 1. Extend the support for Join with different join types. Before this PR, all joins are hardcoded `inner` type. So this PR supports other join types. 2. Add join to connect DSL. 3. Update a few Join proto fields to better reflect the semantic. ### Why are the changes needed? Extend the support for Join in connect. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #38157 from amaliujia/SPARK-40534. Authored-by: Rui Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…ndency for Spark Connect ### What changes were proposed in this pull request? `mypy-protobuf` is only needed when the connect proto is changed and then to use [generate_protos.sh](https://github.com/apache/spark/blob/master/connector/connect/dev/generate_protos.sh) to update python side generated proto files. We should mark this dependency as optional for people who do not care. ### Why are the changes needed? `mypy-protobuf` can be optional dependency for people who do not touch connect proto files. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? N/A Closes #38195 from amaliujia/dev_requirements. Authored-by: Rui Wang <[email protected]> Signed-off-by: Ruifeng Zheng <[email protected]>

…ecutorDecommissionInfo

### What changes were proposed in this pull request?

This change populates `ExecutorDecommission` with messages in `ExecutorDecommissionInfo`.

### Why are the changes needed?

Currently the message in `ExecutorDecommission` is a fixed value ("Executor decommission."), so it is the same for all cases, e.g. spot instance interruptions and auto-scaling down. With this change we can better differentiate those cases.

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Added a unit test.

Closes #38030 from bozhang2820/spark-40596.

Authored-by: Bo Zhang <[email protected]>

Signed-off-by: Yi Wu <[email protected]>

…ules ### What changes were proposed in this pull request? This main change of this pr is refactor shade relocation/rename rules refer to result of `mvn dependency:tree -pl connector/connect` to ensure that maven and sbt produce assembly jar according to the same rules. The main parts of `mvn dependency:tree -pl connector/connect` result as follows: ``` [INFO] +- com.google.guava:guava:jar:31.0.1-jre:compile [INFO] | +- com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava:compile [INFO] | +- org.checkerframework:checker-qual:jar:3.12.0:compile [INFO] | +- com.google.errorprone:error_prone_annotations:jar:2.7.1:compile [INFO] | \- com.google.j2objc:j2objc-annotations:jar:1.3:compile [INFO] +- com.google.guava:failureaccess:jar:1.0.1:compile [INFO] +- com.google.protobuf:protobuf-java:jar:3.21.1:compile [INFO] +- io.grpc:grpc-netty:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-core:jar:1.47.0:compile [INFO] | | +- com.google.code.gson:gson:jar:2.9.0:runtime [INFO] | | +- com.google.android:annotations:jar:4.1.1.4:runtime [INFO] | | \- org.codehaus.mojo:animal-sniffer-annotations:jar:1.19:runtime [INFO] | +- io.netty:netty-codec-http2:jar:4.1.72.Final:compile [INFO] | | \- io.netty:netty-codec-http:jar:4.1.72.Final:compile [INFO] | +- io.netty:netty-handler-proxy:jar:4.1.72.Final:runtime [INFO] | | \- io.netty:netty-codec-socks:jar:4.1.72.Final:runtime [INFO] | +- io.perfmark:perfmark-api:jar:0.25.0:runtime [INFO] | \- io.netty:netty-transport-native-unix-common:jar:4.1.72.Final:runtime [INFO] +- io.grpc:grpc-protobuf:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-api:jar:1.47.0:compile [INFO] | | \- io.grpc:grpc-context:jar:1.47.0:compile [INFO] | +- com.google.api.grpc:proto-google-common-protos:jar:2.0.1:compile [INFO] | \- io.grpc:grpc-protobuf-lite:jar:1.47.0:compile [INFO] +- io.grpc:grpc-services:jar:1.47.0:compile [INFO] | \- com.google.protobuf:protobuf-java-util:jar:3.19.2:runtime [INFO] +- io.grpc:grpc-stub:jar:1.47.0:compile [INFO] +- org.spark-project.spark:unused:jar:1.0.0:compile ``` The new shade rule excludes the following jar packages: - scala related jars - netty related jars - only sbt inlcude jars before: pmml-model-*.jar, findbugs jsr305-*.jar, spark unused-1.0.0.jar So after this pr maven shade will includes the following jars: ``` [INFO] --- maven-shade-plugin:3.2.4:shade (default) spark-connect_2.12 --- [INFO] Including com.google.guava:guava:jar:31.0.1-jre in the shaded jar. [INFO] Including com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava in the shaded jar. [INFO] Including org.checkerframework:checker-qual:jar:3.12.0 in the shaded jar. [INFO] Including com.google.errorprone:error_prone_annotations:jar:2.7.1 in the shaded jar. [INFO] Including com.google.j2objc:j2objc-annotations:jar:1.3 in the shaded jar. [INFO] Including com.google.guava:failureaccess:jar:1.0.1 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java:jar:3.21.1 in the shaded jar. [INFO] Including io.grpc:grpc-netty:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-core:jar:1.47.0 in the shaded jar. [INFO] Including com.google.code.gson:gson:jar:2.9.0 in the shaded jar. [INFO] Including com.google.android:annotations:jar:4.1.1.4 in the shaded jar. [INFO] Including org.codehaus.mojo:animal-sniffer-annotations:jar:1.19 in the shaded jar. [INFO] Including io.perfmark:perfmark-api:jar:0.25.0 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-api:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-context:jar:1.47.0 in the shaded jar. [INFO] Including com.google.api.grpc:proto-google-common-protos:jar:2.0.1 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf-lite:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-services:jar:1.47.0 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java-util:jar:3.19.2 in the shaded jar. [INFO] Including io.grpc:grpc-stub:jar:1.47.0 in the shaded jar. ``` sbt assembly will include the following jars: ``` [debug] Including from cache: j2objc-annotations-1.3.jar [debug] Including from cache: guava-31.0.1-jre.jar [debug] Including from cache: protobuf-java-3.21.1.jar [debug] Including from cache: grpc-services-1.47.0.jar [debug] Including from cache: failureaccess-1.0.1.jar [debug] Including from cache: grpc-stub-1.47.0.jar [debug] Including from cache: perfmark-api-0.25.0.jar [debug] Including from cache: annotations-4.1.1.4.jar [debug] Including from cache: listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar [debug] Including from cache: animal-sniffer-annotations-1.19.jar [debug] Including from cache: checker-qual-3.12.0.jar [debug] Including from cache: grpc-netty-1.47.0.jar [debug] Including from cache: grpc-api-1.47.0.jar [debug] Including from cache: grpc-protobuf-lite-1.47.0.jar [debug] Including from cache: grpc-protobuf-1.47.0.jar [debug] Including from cache: grpc-context-1.47.0.jar [debug] Including from cache: grpc-core-1.47.0.jar [debug] Including from cache: protobuf-java-util-3.19.2.jar [debug] Including from cache: error_prone_annotations-2.10.0.jar [debug] Including from cache: gson-2.9.0.jar [debug] Including from cache: proto-google-common-protos-2.0.1.jar ``` All the dependencies mentioned above are relocationed to the `org.sparkproject.connect` package according to the new rules to avoid conflicts with other third-party dependencies. ### Why are the changes needed? Refactor shade relocation/rename rules to ensure that maven and sbt produce assembly jar according to the same rules. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Pass GitHub Actions Closes #38162 from LuciferYang/SPARK-40677-FOLLOWUP. Lead-authored-by: yangjie01 <[email protected]> Co-authored-by: YangJie <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

…l inputs

### What changes were proposed in this pull request?

add a dedicated expression for `product`:

1. for integral inputs, directly use `LongType` to avoid the rounding error:

2. when `ignoreNA` is true, skip following values when meet a `zero`;

3. when `ignoreNA` is false, skip following values when meet a `zero` or `null`;

### Why are the changes needed?

1. existing computation logic is too complex in the PySpark side, with a dedicated expression, we can simplify the PySpark side and apply it in more cases.

2. existing computation of `product` is likely to introduce rounding error for integral inputs, for example `55108 x 55108 x 55108 x 55108` in the following case:

before:

```

In [14]: df = pd.DataFrame({"a": [55108, 55108, 55108, 55108], "b": [55108.0, 55108.0, 55108.0, 55108.0], "c": [1, 2, 3, 4]})

In [15]: df.a.prod()

Out[15]: 9222710978872688896

In [16]: type(df.a.prod())

Out[16]: numpy.int64

In [17]: df.b.prod()

Out[17]: 9.222710978872689e+18

In [18]: type(df.b.prod())

Out[18]: numpy.float64

In [19]:

In [19]: psdf = ps.from_pandas(df)

In [20]: psdf.a.prod()

Out[20]: 9222710978872658944

In [21]: type(psdf.a.prod())

Out[21]: int

In [22]: psdf.b.prod()

Out[22]: 9.222710978872659e+18

In [23]: type(psdf.b.prod())

Out[23]: float

In [24]: df.a.prod() - psdf.a.prod()

Out[24]: 29952

```

after:

```

In [1]: import pyspark.pandas as ps

In [2]: import pandas as pd

In [3]: df = pd.DataFrame({"a": [55108, 55108, 55108, 55108], "b": [55108.0, 55108.0, 55108.0, 55108.0], "c": [1, 2, 3, 4]})

In [4]: df.a.prod()

Out[4]: 9222710978872688896

In [5]: psdf = ps.from_pandas(df)

In [6]: psdf.a.prod()

Out[6]: 9222710978872688896

In [7]: df.a.prod() - psdf.a.prod()

Out[7]: 0

```

### Does this PR introduce _any_ user-facing change?

No

### How was this patch tested?

existing UT & added UT

Closes #38148 from zhengruifeng/ps_new_prod.

Authored-by: Ruifeng Zheng <[email protected]>

Signed-off-by: Hyukjin Kwon <[email protected]>

…one grouping expressions ### What changes were proposed in this pull request? 1. Add `groupby` to connect DSL and test more than one grouping expressions 2. Pass limited data types through connect proto for LocalRelation's attributes. 3. Cleanup unused `Trait` in the testing code. ### Why are the changes needed? Enhance connect's support for GROUP BY. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? UT Closes #38155 from amaliujia/support_more_than_one_grouping_set. Authored-by: Rui Wang <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

…classes ### What changes were proposed in this pull request? In the PR, I propose to use error classes in the case of type check failure in collection expressions. ### Why are the changes needed? Migration onto error classes unifies Spark SQL error messages. ### Does this PR introduce _any_ user-facing change? Yes. The PR changes user-facing error messages. ### How was this patch tested? ``` build/sbt "sql/testOnly *SQLQueryTestSuite" build/sbt "test:testOnly org.apache.spark.SparkThrowableSuite" build/sbt "test:testOnly *ExpressionTypeCheckingSuite" build/sbt "test:testOnly *DataFrameFunctionsSuite" build/sbt "test:testOnly *DataFrameAggregateSuite" build/sbt "test:testOnly *AnalysisErrorSuite" build/sbt "test:testOnly *CollectionExpressionsSuite" build/sbt "test:testOnly *ComplexTypeSuite" build/sbt "test:testOnly *HigherOrderFunctionsSuite" build/sbt "test:testOnly *PredicateSuite" build/sbt "test:testOnly *TypeUtilsSuite" ``` Closes #38197 from lvshaokang/SPARK-40358. Authored-by: lvshaokang <[email protected]> Signed-off-by: Max Gekk <[email protected]>

### What changes were proposed in this pull request? This PR cleans up the logic of `listFunctions`. Currently `listFunctions` gets all external functions and registered functions (built-in, temporary, and persistent functions with a specific database name). It is not necessary to get persistent functions that match a specific database name again since`externalCatalog.listFunctions` already fetched them. We only need to list all built-in and temporary functions from the function registries. ### Why are the changes needed? Code clean up. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Existing unit tests. Closes #38194 from allisonwang-db/spark-40740-list-functions. Authored-by: allisonwang-db <[email protected]> Signed-off-by: Hyukjin Kwon <[email protected]>

### What changes were proposed in this pull request? Code refactor on all File data source options: - `TextOptions` - `CSVOptions` - `JSONOptions` - `AvroOptions` - `ParquetOptions` - `OrcOptions` - `FileIndex` related options Change semantics: - First, we introduce a new trait `DataSourceOptions`, which defines the following functions: - `newOption(name)`: Register a new option - `newOption(name, alternative)`: Register a new option with alternative - `getAllValidOptions`: retrieve all valid options - `isValidOption(name)`: validate a given option name - `getAlternativeOption(name)`: get alternative option name if any - Then, for each class above - Create/update its companion object to extend from the trait above and register all valid options within it. - Update places where name strings are used directly to fetch option values to use those option constants instead. - Add a unit test for each file data source options ### Why are the changes needed? Currently for each file data source, all options are placed sparsely in the options class and there is no clear list of all options supported. As more and more options are added, the readability get worse. Thus, we want to refactor those codes so that - we can easily get a list of supported options for each data source - enforce better practice for adding new options going forwards. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Closes #38113 from xiaonanyang-db/SPARK-40667. Authored-by: xiaonanyang-db <[email protected]> Signed-off-by: Wenchen Fan <[email protected]>

pull bot

pushed a commit

that referenced

this pull request

May 1, 2024

… spark docker image ### What changes were proposed in this pull request? The pr aims to update the packages name removed in building the spark docker image. ### Why are the changes needed? When our default image base was switched from `ubuntu 20.04` to `ubuntu 22.04`, the unused installation package in the base image has changed, in order to eliminate some warnings in building images and free disk space more accurately, we need to correct it. Before: ``` #35 [29/31] RUN apt-get remove --purge -y '^aspnet.*' '^dotnet-.*' '^llvm-.*' 'php.*' '^mongodb-.*' snapd google-chrome-stable microsoft-edge-stable firefox azure-cli google-cloud-sdk mono-devel powershell libgl1-mesa-dri || true #35 0.489 Reading package lists... #35 0.505 Building dependency tree... #35 0.507 Reading state information... #35 0.511 E: Unable to locate package ^aspnet.* #35 0.511 E: Couldn't find any package by glob '^aspnet.*' #35 0.511 E: Couldn't find any package by regex '^aspnet.*' #35 0.511 E: Unable to locate package ^dotnet-.* #35 0.511 E: Couldn't find any package by glob '^dotnet-.*' #35 0.511 E: Couldn't find any package by regex '^dotnet-.*' #35 0.511 E: Unable to locate package ^llvm-.* #35 0.511 E: Couldn't find any package by glob '^llvm-.*' #35 0.511 E: Couldn't find any package by regex '^llvm-.*' #35 0.511 E: Unable to locate package ^mongodb-.* #35 0.511 E: Couldn't find any package by glob '^mongodb-.*' #35 0.511 EPackage 'php-crypt-gpg' is not installed, so not removed #35 0.511 Package 'php' is not installed, so not removed #35 0.511 : Couldn't find any package by regex '^mongodb-.*' #35 0.511 E: Unable to locate package snapd #35 0.511 E: Unable to locate package google-chrome-stable #35 0.511 E: Unable to locate package microsoft-edge-stable #35 0.511 E: Unable to locate package firefox #35 0.511 E: Unable to locate package azure-cli #35 0.511 E: Unable to locate package google-cloud-sdk #35 0.511 E: Unable to locate package mono-devel #35 0.511 E: Unable to locate package powershell #35 DONE 0.5s #36 [30/31] RUN apt-get autoremove --purge -y #36 0.063 Reading package lists... #36 0.079 Building dependency tree... #36 0.082 Reading state information... #36 0.088 0 upgraded, 0 newly installed, 0 to remove and 0 not upgraded. #36 DONE 0.4s ``` After: ``` #38 [32/36] RUN apt-get remove --purge -y 'gfortran-11' 'humanity-icon-theme' 'nodejs-doc' || true #38 0.066 Reading package lists... #38 0.087 Building dependency tree... #38 0.089 Reading state information... #38 0.094 The following packages were automatically installed and are no longer required: #38 0.094 at-spi2-core bzip2-doc dbus-user-session dconf-gsettings-backend #38 0.095 dconf-service gsettings-desktop-schemas gtk-update-icon-cache #38 0.095 hicolor-icon-theme libatk-bridge2.0-0 libatk1.0-0 libatk1.0-data #38 0.095 libatspi2.0-0 libbz2-dev libcairo-gobject2 libcolord2 libdconf1 libepoxy0 #38 0.095 libgfortran-11-dev libgtk-3-common libjs-highlight.js libllvm11 #38 0.095 libncurses-dev libncurses5-dev libphobos2-ldc-shared98 libreadline-dev #38 0.095 librsvg2-2 librsvg2-common libvte-2.91-common libwayland-client0 #38 0.095 libwayland-cursor0 libwayland-egl1 libxdamage1 libxkbcommon0 #38 0.095 session-migration tilix-common xkb-data #38 0.095 Use 'apt autoremove' to remove them. #38 0.096 The following packages will be REMOVED: #38 0.096 adwaita-icon-theme* gfortran* gfortran-11* humanity-icon-theme* libgtk-3-0* #38 0.096 libgtk-3-bin* libgtkd-3-0* libvte-2.91-0* libvted-3-0* nodejs-doc* #38 0.096 r-base-dev* tilix* ubuntu-mono* #38 0.248 0 upgraded, 0 newly installed, 13 to remove and 0 not upgraded. #38 0.248 After this operation, 99.6 MB disk space will be freed. ... (Reading database ... 70597 files and directories currently installed.) #38 0.304 Removing r-base-dev (4.1.2-1ubuntu2) ... #38 0.319 Removing gfortran (4:11.2.0-1ubuntu1) ... #38 0.340 Removing gfortran-11 (11.4.0-1ubuntu1~22.04) ... #38 0.356 Removing tilix (1.9.4-2build1) ... #38 0.377 Removing libvted-3-0:amd64 (3.10.0-1ubuntu1) ... #38 0.392 Removing libvte-2.91-0:amd64 (0.68.0-1) ... #38 0.407 Removing libgtk-3-bin (3.24.33-1ubuntu2) ... #38 0.422 Removing libgtkd-3-0:amd64 (3.10.0-1ubuntu1) ... #38 0.436 Removing nodejs-doc (12.22.9~dfsg-1ubuntu3.4) ... #38 0.457 Removing libgtk-3-0:amd64 (3.24.33-1ubuntu2) ... #38 0.488 Removing ubuntu-mono (20.10-0ubuntu2) ... #38 0.754 Removing humanity-icon-theme (0.6.16) ... #38 1.362 Removing adwaita-icon-theme (41.0-1ubuntu1) ... #38 1.537 Processing triggers for libc-bin (2.35-0ubuntu3.6) ... #38 1.566 Processing triggers for mailcap (3.70+nmu1ubuntu1) ... #38 1.577 Processing triggers for libglib2.0-0:amd64 (2.72.4-0ubuntu2.2) ... (Reading database ... 56946 files and directories currently installed.) #38 1.645 Purging configuration files for libgtk-3-0:amd64 (3.24.33-1ubuntu2) ... #38 1.657 Purging configuration files for ubuntu-mono (20.10-0ubuntu2) ... #38 1.670 Purging configuration files for humanity-icon-theme (0.6.16) ... #38 1.682 Purging configuration files for adwaita-icon-theme (41.0-1ubuntu1) ... #38 DONE 1.7s #39 [33/36] RUN apt-get autoremove --purge -y #39 0.061 Reading package lists... #39 0.075 Building dependency tree... #39 0.077 Reading state information... #39 0.083 The following packages will be REMOVED: #39 0.083 at-spi2-core* bzip2-doc* dbus-user-session* dconf-gsettings-backend* #39 0.083 dconf-service* gsettings-desktop-schemas* gtk-update-icon-cache* #39 0.083 hicolor-icon-theme* libatk-bridge2.0-0* libatk1.0-0* libatk1.0-data* #39 0.083 libatspi2.0-0* libbz2-dev* libcairo-gobject2* libcolord2* libdconf1* #39 0.083 libepoxy0* libgfortran-11-dev* libgtk-3-common* libjs-highlight.js* #39 0.083 libllvm11* libncurses-dev* libncurses5-dev* libphobos2-ldc-shared98* #39 0.083 libreadline-dev* librsvg2-2* librsvg2-common* libvte-2.91-common* #39 0.083 libwayland-client0* libwayland-cursor0* libwayland-egl1* libxdamage1* #39 0.083 libxkbcommon0* session-migration* tilix-common* xkb-data* #39 0.231 0 upgraded, 0 newly installed, 36 to remove and 0 not upgraded. #39 0.231 After this operation, 124 MB disk space will be freed. ``` ### Does this PR introduce _any_ user-facing change? No. ### How was this patch tested? - Manually test. - Pass GA. ### Was this patch authored or co-authored using generative AI tooling? No. Closes apache#46258 from panbingkun/remove_packages_on_ubuntu. Authored-by: panbingkun <[email protected]> Signed-off-by: Dongjoon Hyun <[email protected]>

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

See Commits and Changes for more details.

Created by pull[bot]

pull[bot]

Can you help keep this open source service alive? 💖 Please sponsor : )